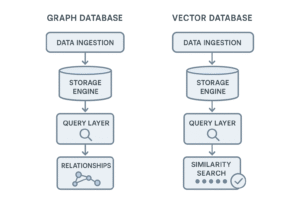

In modern data engineering and AI systems, the choice of database architecture matters a lot. Two of the fastest-growing database types are graph databases and vector databases. While they might look similar at first glance, their architectures, use cases, strengths and limitations differ significantly. This guide walks you through everything you need: what each database type is, how they work under the hood, the key differences, when to use which in a U.S. context, code examples, pros & cons, and how to choose for your project.

1. What is a Graph Database?

A graph database stores and manages data in a graph structure: entities are represented as nodes (also called vertices) and relationships between them are represented as edges. Properties or attributes can attach to nodes or edges. :contentReference[oaicite:0]{index=0}

Because relationships are stored directly, graph databases excel at queries that traverse connections: for example “friends of friends”, “all transactions connected to this account”, “supply-chain dependencies”, “knowledge graph reasoning”. They avoid heavy JOIN operations that relational databases require. :contentReference[oaicite:1]{index=1}

Example: In a social network, a user node might be connected via “follows” edges to other user nodes, and those in turn to posts, tags, etc. A graph database excels at queries like “find all users who are two steps away and liked the same tag”.

2. What is a Vector Database?

A vector database is optimized to store and search high-dimensional vectors (embeddings) and perform similarity search — e.g., “find items whose embeddings are closest to this query embedding”. :contentReference[oaicite:2]{index=2}

These embeddings are often produced by machine learning models (text embeddings, image embeddings, audio embeddings). The vector database allows fast nearest-neighbor or approximate nearest neighbor (ANN) search. :contentReference[oaicite:3]{index=3}

Example: You embed a sentence via an LLM, store the embedding, and then query with a new sentence embedding to find the most semantically similar stored items.

3. Key Differences: Data Model, Querying & Performance

Data Model

Here’s a comparison:

| Aspect | Graph Database | Vector Database |

|---|---|---|

| Structure | Nodes + Edges (relationships first) :contentReference[oaicite:4]{index=4} | High-dimensional vectors (feature space) :contentReference[oaicite:5]{index=5} |

| Query style | Traversal, path queries, pattern matching :contentReference[oaicite:6]{index=6} | Similarity/nearest-neighbor search, embedding comparison :contentReference[oaicite:7]{index=7} |

| Use case focus | Complex relationships & connections | Semantic similarity, unstructured data retrieval |

| Ideal data type | Highly connected or networked data | Text, images, audio, embeddings |

Performance and Scalability

Vector databases are optimized for large-scale embedding search and typically scale well for high dimensionality but may require GPU or ANN indexing techniques. :contentReference[oaicite:8]{index=8} Graph databases scale differently: while they handle relational queries very well, extremely large or highly connected graphs can become performance bottlenecks if not designed carefully. :contentReference[oaicite:9]{index=9}

4. When to Use Which: Use Cases

Use Cases for Graph Databases

- Social networks, friend recommendations

- Knowledge graphs and semantic reasoning

- Fraud detection, anti-money-laundering (AML) networks :contentReference[oaicite:10]{index=10}

- Supply chain, logistics, network routing

Use Cases for Vector Databases

- Semantic search on text or images (RAG systems) :contentReference[oaicite:11]{index=11}

- Recommendation engines based on embeddings :contentReference[oaicite:12]{index=12}

- Anomaly detection via embedding clustering

- Large Language Model (LLM) retrieval + similarity search pipelines

Hybrid Use Cases

Increasingly, systems combine both: e.g., use a vector database to retrieve semantically similar items and then a graph database to explore relationships between retrieved items. This hybrid approach is gaining traction in U.S. enterprise AI architectures. :contentReference[oaicite:13]{index=13}

5. Code Examples & Setup Snippets

Here are simplified code snippets to illustrate both types.

Graph Database Example (Neo4j + Python)

# Install driver

pip install neo4j

from neo4j import GraphDatabase

uri = "bolt://localhost:7687"

driver = GraphDatabase.driver(uri, auth=("neo4j", "password"))

def create_person(tx, name):

tx.run("CREATE (p:Person {name:$name})", name=name)

def create_friendship(tx, name1, name2):

tx.run("""

MATCH (a:Person {name:$name1}), (b:Person {name:$name2})

CREATE (a)-[:FRIEND]->(b)

""", name1=name1, name2=name2)

with driver.session() as session:

session.write_transaction(create_person, "Alice")

session.write_transaction(create_person, "Bob")

session.write_transaction(create_friendship, "Alice", "Bob")

print("Done")Vector Database Example (Weaviate + Python)

# Install client

pip install weaviate-client

import weaviate

client = weaviate.Client("http://localhost:8080")

# Create schema

schema = {

"classes": [

{

"class": "Document",

"vectorizer": "text2vec-transformers",

"properties": [

{"name": "content", "dataType": ["text"]}

]

}

]

}

client.schema.create(schema)

# Add data (document + vector)

client.data_object.create({

"content": "This is a sample document about AI databases."

}, class_name="Document")

# Perform similarity search

result = client.query.get("Document", ["content"])\

.with_near_text({"concepts": ["graph vs vector database"]})\

.with_limit(5)\

.do()

print(result)Copy & paste the above code blocks as needed. These are basic setups—you can expand them for production usage.

6. Pros & Cons of Each

Graph Database: Pros

- Direct modelling of relationships and connections.

- Efficient graph traversal and multi-hop relationship queries. :contentReference[oaicite:14]{index=14}

- Flexible schema: nodes and edges can evolve without rigid tables. :contentReference[oaicite:15]{index=15}

Graph Database: Cons

- Performance can degrade with extremely large or dense graphs.

- Requires thoughtful schema and indexing design for traversal efficiency. :contentReference[oaicite:16]{index=16}

- Less suited for high-dimensional similarity search tasks.

Vector Database: Pros

- Optimized for similarity search over embeddings—ideal for AI and unstructured data. :contentReference[oaicite:17]{index=17}

- Handles large volumes of unstructured data (text, images, audio) efficiently. :contentReference[oaicite:18]{index=18}

Vector Database: Cons

- Limited relational reasoning: embeddings capture similarity, not explicit relationships. :contentReference[oaicite:19]{index=19}

- High dimensional vectors require storage and compute resources; indexing can be complex. :contentReference[oaicite:20]{index=20}

7. Hybrid Architectures: Combining Graph + Vector

As enterprise AI in the U.S. scales, many solutions use a hybrid approach: use a vector database for fast semantic retrieval, then a graph database to explore relationships between retrieved results. This gives the best of both worlds—semantic similarity + relational reasoning. :contentReference[oaicite:21]{index=21}

For example, you might embed millions of documents into a vector store, query it to retrieve the top 10 similar docs, then load those into a graph database to trace how each document is connected via citations, authorship, topics, etc.

8. How to Choose for Your U.S. Project

Here are some guiding questions:

- What is the nature of your data? If you have rich inter-entity relationships (social graph, supply chain, network links) lean graph. If you have unstructured embeddings (text, image, user behaviour) lean vector.

- What kind of queries dominate? Relationship traversal and pattern matching → graph database. Nearest neighbour/similarity search → vector database.

- What are your performance & scalability requirements? Ensure you factor in large-scale embeddings or deep graph traversals depending on expected load.

- Will you benefit from a hybrid approach? Many U.S. AI systems integrate both—so plan the architecture accordingly.

Also consider tooling, ecosystem and cloud availability: for U.S. deployments, platforms like AWS, GCP, Azure have managed graph & vector solutions—so choose based on cost, latency, region (us-east, us-west, etc.).

9. Frequently Asked Questions (FAQ)

Q: Can I store embeddings in a graph database?

Yes—some graph DBs now support vector properties on nodes. But they typically don’t match a dedicated vector DB’s speed for high-dimensional similarity search.

Q: Can I do relationship queries in a vector database?

Not directly. Vector DBs are optimized for embeddings and nearest neighbour search, not relationship traversals. For that you’d use a graph DB or hybrid setup.

Q: Are there managed services in the U.S. for each?

Yes. For graph databases: AWS Neptune, Neo4j Aura (cloud), TigerGraph on cloud. For vector DBs: Pinecone, Weaviate Cloud, Milvus Cloud, Qdrant Cloud—all with U.S. regions.

Q: Which is better for AI and LLM retrieval-augmented generation (RAG)?

Vector DBs excel at embedding similarity retrieval but you may add a graph DB layer for reasoning or relationship exploration, resulting in a hybrid architecture for best results. :contentReference[oaicite:22]{index=22}

10. Conclusion

Graph databases and vector databases serve different but complementary roles in modern data systems. Graph databases shine when you need to model and traverse relationships. Vector databases shine when you need to handle large volumes of unstructured data and similarity search. In many U.S.-based AI systems, the best design is hybrid—leveraging embeddings for retrieval and graphs for reasoning. Choose based on your data, queries and ecosystem, and you’ll be well-positioned for scalable, intelligent data architecture.