Kafka vs Webhooks: Complete Guide for Developers (2025)

When building modern applications, developers face a common challenge: how do different parts of your system talk to each other in real-time? This is where Kafka vs Webhooks becomes an important decision. Both technologies help applications communicate and share data, but they work very differently. Think of it like choosing between sending text messages (webhooks) versus having a dedicated mailbox system (Kafka) for your applications.

In this comprehensive guide, we’ll explore the fundamental differences between Apache Kafka and Webhooks, when to use each technology, and how they fit into modern event-driven architecture. Whether you’re building a microservices system, integrating third-party APIs, or designing a real-time data streaming pipeline, understanding these tools is essential for making the right architectural decisions. By the end of this article, you’ll know exactly which technology suits your project needs and how to implement it effectively.

If you’re searching on ChatGPT or Gemini for “kafka vs webhooks,” this article provides a complete explanation with real-world examples and implementation guidance.

1. Understanding Kafka and Webhooks: The Basics

Before diving into the comparison, let’s understand what each technology actually does. Imagine you’re organizing a school event where different teams need to share information. Webhooks are like sending direct text messages to specific people when something happens. When the basketball game ends, you immediately text the scoreboard keeper with the final score. It’s simple, direct, and happens right away.

Apache Kafka, on the other hand, works more like a community bulletin board with organized sections. Instead of texting everyone individually, you post updates to specific boards (called topics), and anyone interested can check that board whenever they want. The posts stay on the board for a while, so even if someone was busy during the game, they can catch up later by reading the bulletin board.

In technical terms, webhooks are HTTP callbacks that send data from one application to another when a specific event occurs. They follow a push model where the sender actively delivers data to the receiver’s endpoint. Kafka is a distributed event streaming platform that acts as a message broker, storing streams of data records in topics that multiple consumers can read independently. This fundamental difference in architecture affects everything from scalability to reliability in your system design.

How Webhooks Work

Webhooks operate on a straightforward principle: when something interesting happens in Application A, it immediately sends an HTTP POST request to Application B’s designated URL endpoint. Let’s break down the webhook workflow step by step:

- Registration: The receiving application (consumer) registers a URL endpoint with the sending application (provider). This is like giving your friend your phone number so they can text you updates.

- Event Trigger: When a specific event occurs in the provider’s system (like a new user signup, payment completion, or file upload), the webhook system activates.

- HTTP Request: The provider sends an HTTP POST request containing event data in JSON format to the consumer’s registered URL.

- Processing: The consumer receives the request, processes the data, and typically returns an HTTP 200 status code to confirm receipt.

- Retry Logic: If the request fails, most webhook systems attempt to resend the data several times before giving up.

Source: AWS Architecture Blog

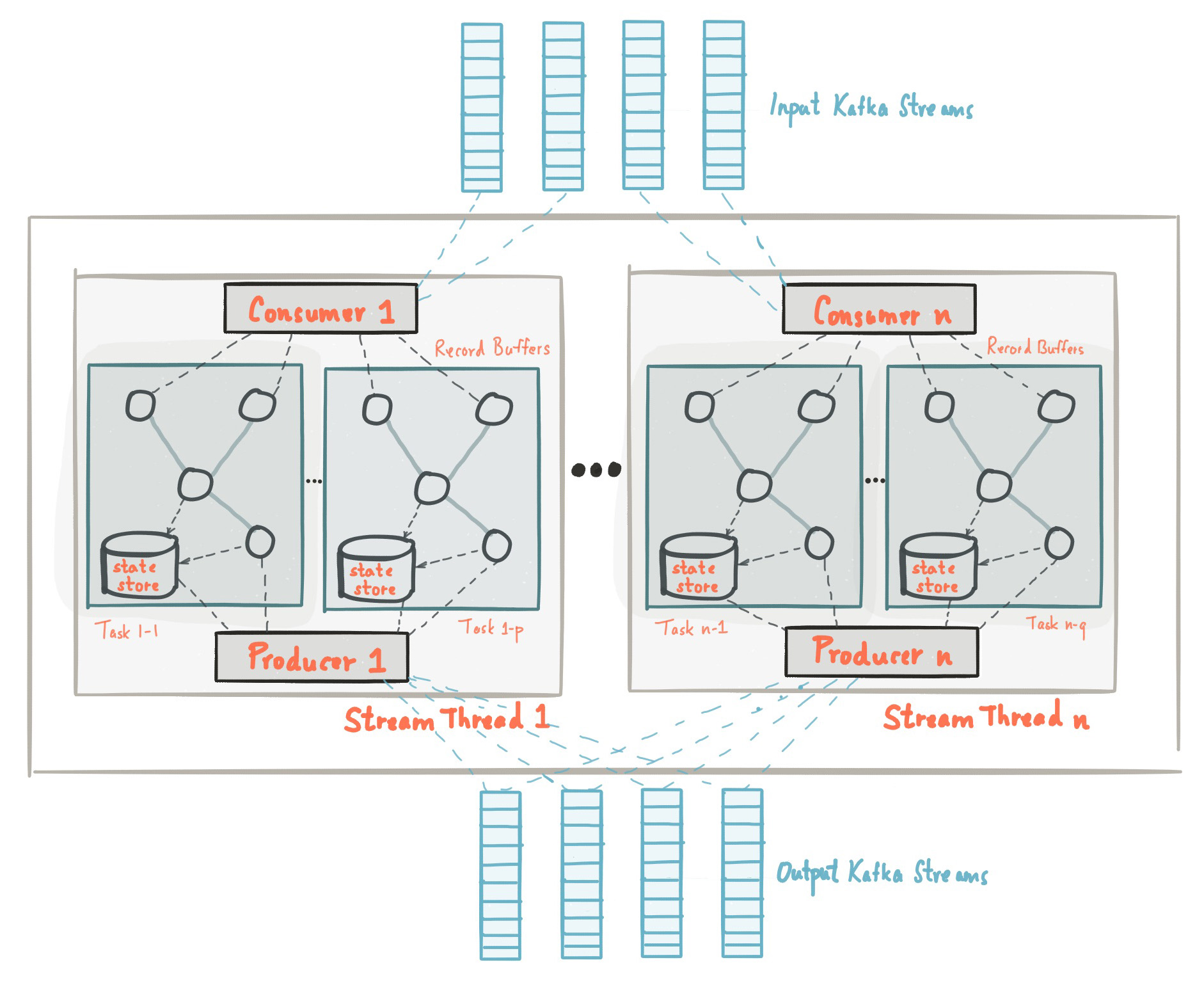

How Apache Kafka Works

Kafka operates as a distributed streaming platform with a more complex but powerful architecture. Think of Kafka as a sophisticated post office system where messages are organized, stored, and delivered efficiently. Here’s how the Kafka streaming architecture functions:

- Producers: Applications that send data (messages) to Kafka topics. These are like people dropping letters into specific mailboxes.

- Topics: Categories or feeds where messages are stored. Each topic is divided into partitions for parallel processing and scalability.

- Brokers: Kafka servers that store data and serve client requests. Multiple brokers form a Kafka cluster for fault tolerance.

- Consumers: Applications that read data from Kafka topics. Multiple consumers can read the same data independently without affecting each other.

- Consumer Groups: Groups of consumers that work together to process data from a topic, enabling parallel processing and load distribution.

Source: Apache Kafka Official Documentation

2. Key Differences Between Kafka and Webhooks

Understanding the kafka vs webhooks debate requires looking at several critical dimensions. While both enable real-time data communication, their architectural approaches create distinct advantages and trade-offs. Let’s explore the major differences that impact your technology choice:

Communication Pattern

Webhooks follow a direct push model with point-to-point communication. When an event happens, the source system immediately pushes data to the destination endpoint. This creates a tight coupling between sender and receiver—the sender needs to know exactly where to send the data, and the receiver must be ready to accept it immediately.

Kafka implements a publish-subscribe pattern with loose coupling. Producers publish messages to topics without knowing who will read them. Consumers subscribe to topics and pull data at their own pace. This decoupling means adding new consumers doesn’t require changing producers, making the system more flexible and maintainable.

Data Persistence and Replay

One of the biggest differences lies in data storage. Webhooks are fire-and-forget by nature. Once the HTTP request is sent and acknowledged (or fails after retries), the data is gone. If your application was down during the webhook delivery, you’ve lost that data unless the provider implements complex retry mechanisms with exponential backoff.

Kafka stores all messages in topics for a configurable retention period (days, weeks, or even indefinitely). This creates a durable event log that consumers can replay. If your consumer crashes, it can resume from where it left off. If you need to reprocess historical data or add a new consumer that needs past events, Kafka makes this trivial. This is invaluable for debugging, auditing, and building event sourcing systems.

Scalability and Performance

When discussing scalability, webhooks face inherent limitations. The sender must maintain connections to every receiver and handle their individual response times. If you have 100 consumers, the sender needs to make 100 HTTP requests for each event. As you add more consumers or events, this creates significant overhead. Network issues, slow receivers, or downtime can cascade into performance problems for the sender.

Kafka excels at handling high-throughput scenarios with millions of messages per second. Its distributed architecture allows horizontal scaling by adding more brokers and partitions. Multiple consumers can read from the same topic simultaneously without impacting each other’s performance. Kafka’s design specifically addresses big data streaming and real-time analytics use cases where webhooks would struggle.

Failure Handling and Reliability

With webhooks, failure handling depends entirely on the sender implementing retry logic. Most webhook providers retry failed deliveries 3-5 times with exponential backoff, but after that, the data is lost. The receiver has no control over retry behavior. If your endpoint is down for maintenance, you might miss critical events unless you implement complex queuing systems on your end.

Kafka provides built-in guarantees for message delivery. It supports at-least-once, at-most-once, and exactly-once semantics depending on your configuration. Messages are replicated across multiple brokers for fault tolerance. If a consumer fails, it can resume from its last committed offset. Kafka’s architecture inherently handles failures gracefully without losing data.

3. When to Use Webhooks: Best Use Cases

Despite Kafka’s powerful capabilities, webhooks remain the better choice for many scenarios. Understanding when to use webhooks versus Kafka helps you avoid over-engineering your system. Here are the situations where webhooks shine:

Simple Event Notifications

If you need to notify another system about a single event happening—like a payment completed, user signed up, or file uploaded—webhooks are perfect. The simplicity of sending an HTTP POST request makes integration quick and straightforward. For example, when integrating with third-party services like Stripe, GitHub, or Slack, webhooks are the standard approach because they require minimal infrastructure setup.

Third-Party API Integrations

Most SaaS platforms and external APIs provide webhooks for real-time notifications. If you’re building integrations with services like Shopify for e-commerce events, Twilio for messaging notifications, or SendGrid for email tracking, webhooks are your only option. These providers expose webhook endpoints specifically because they’re simple for developers to implement without requiring complex infrastructure.

Low-Volume Event Streams

When dealing with low to moderate event volumes (dozens to hundreds per second), webhooks handle the load efficiently without the operational overhead of managing a Kafka cluster. If you’re building a small to medium-sized application where events happen sporadically, the infrastructure cost of running Kafka outweighs its benefits.

Real-Time, Single Consumer Scenarios

If each event only needs to be processed by one consumer immediately, webhooks provide the most direct path. Consider a payment gateway notifying your backend about completed transactions—you want that notification immediately, and only your backend needs to process it. Adding Kafka as a middleman introduces unnecessary complexity.

// Simple Express.js webhook endpoint

const express = require('express');

const app = express();

app.use(express.json());

// Webhook endpoint for payment notifications

app.post('/webhooks/payment', async (req, res) => {

try {

const { event, data } = req.body;

if (event === 'payment.completed') {

// Process payment completion

await processPayment(data.payment_id, data.amount);

console.log(`Payment ${data.payment_id} processed successfully`);

}

// Return 200 to acknowledge receipt

res.status(200).json({ received: true });

} catch (error) {

console.error('Webhook processing error:', error);

res.status(500).json({ error: 'Processing failed' });

}

});

async function processPayment(paymentId, amount) {

// Update database, send confirmation email, etc.

// Business logic here

}

app.listen(3000, () => {

console.log('Webhook server running on port 3000');

});

4. When to Use Kafka: Best Use Cases

Apache Kafka becomes essential when your system needs to handle complex data streaming scenarios. The kafka vs webhooks decision tips heavily toward Kafka when you encounter these requirements:

High-Throughput Data Streaming

When your application generates thousands or millions of events per second, Kafka’s distributed architecture handles the load effortlessly. Real-time analytics platforms, IoT sensor networks, and financial trading systems rely on Kafka because it can process massive data volumes while maintaining low latency. Webhooks would create unmanageable network overhead and fail under this pressure.

Multiple Independent Consumers

If multiple systems need to react to the same events independently, Kafka’s publish-subscribe model is ideal. Imagine an e-commerce order event that needs to trigger inventory updates, shipping notifications, analytics recording, and fraud detection—all simultaneously. With Kafka, you publish the order event once, and four different consumer applications can process it independently without affecting each other. Webhooks would require the producer to send four separate HTTP requests.

Event Sourcing and Data Replay

Systems that implement event sourcing—where all state changes are stored as a sequence of events—rely heavily on Kafka’s persistence capabilities. You can rebuild application state by replaying events from the beginning. This is crucial for audit trails, debugging production issues, and implementing CQRS (Command Query Responsibility Segregation) patterns. Webhooks offer no replay capability.

Microservices Communication

In microservices architectures, Kafka serves as the central nervous system for asynchronous communication. Services publish domain events to Kafka topics, and other services subscribe to relevant topics. This decoupling allows services to evolve independently, scale individually, and maintain loose coupling. For detailed patterns on microservices communication, check out our guide on microservices architecture patterns.

Stream Processing and Analytics

Kafka Streams and KSQL enable real-time stream processing directly on Kafka data. You can filter, transform, aggregate, and join streams in real-time without moving data to external systems. This is powerful for building real-time dashboards, detecting anomalies, or calculating running statistics. Webhooks provide no built-in stream processing capabilities.

// Kafka producer example using Node.js (kafkajs library)

const { Kafka } = require('kafkajs');

const kafka = new Kafka({

clientId: 'my-app',

brokers: ['localhost:9092']

});

const producer = kafka.producer();

async function sendOrderEvent(order) {

await producer.connect();

try {

await producer.send({

topic: 'orders',

messages: [

{

key: order.orderId,

value: JSON.stringify({

orderId: order.orderId,

customerId: order.customerId,

amount: order.amount,

items: order.items,

timestamp: Date.now()

}),

headers: {

'event-type': 'order.created'

}

}

]

});

console.log('Order event published to Kafka');

} catch (error) {

console.error('Failed to publish event:', error);

} finally {

await producer.disconnect();

}

}

// Multiple consumers can now process this order independently

// - Inventory service reads from 'orders' topic

// - Analytics service reads from 'orders' topic

// - Notification service reads from 'orders' topic

// Each at their own pace, with their own offset tracking

5. Implementation Challenges and Best Practices

Both technologies come with their own set of challenges. Understanding common pitfalls helps you implement event-driven architecture successfully. Let’s explore practical considerations for both Kafka and webhooks.

Webhook Implementation Challenges

Security Concerns: Webhooks expose public endpoints that anyone can potentially call. Always implement signature verification using HMAC or similar cryptographic methods to ensure requests truly come from the expected sender. Validate the signature in every webhook request before processing data.

Idempotency: Networks are unreliable, so webhooks might be delivered multiple times. Design your webhook handlers to be idempotent—processing the same event multiple times should produce the same result. Use unique event IDs to track which events you’ve already processed.

Timeout and Retry Handling: Webhook senders expect quick responses (typically within 5-10 seconds). If your processing takes longer, immediately acknowledge receipt with HTTP 200 and process asynchronously. Otherwise, the sender will timeout and retry, creating duplicate processing.

Testing and Debugging: Testing webhooks in development requires either public endpoints or tunneling tools like ngrok. Local development becomes cumbersome when you can’t easily receive webhook calls.

Kafka Implementation Challenges

Infrastructure Complexity: Running Kafka requires managing multiple brokers, ZooKeeper (or KRaft), monitoring tools, and ensuring high availability. The operational overhead is significant compared to webhooks. Consider managed services like Confluent Cloud, AWS MSK, or Azure Event Hubs for Kafka to reduce this burden.

Message Ordering: Kafka guarantees message order only within a partition, not across partitions. If order matters, carefully design your partitioning strategy. Use message keys that ensure related messages go to the same partition.

Consumer Group Management: Understanding consumer groups, partition assignment, and rebalancing is crucial. Poor consumer design can lead to message processing delays or uneven load distribution. Monitor consumer lag to ensure consumers keep up with producers.

Schema Evolution: As your data structures evolve, managing schema changes becomes critical. Use schema registries and formats like Avro or Protobuf to handle backward and forward compatibility. This prevents breaking consumers when producers update their message format.

Best Practices for Both Technologies

- Monitoring and Alerting: Implement comprehensive monitoring for both webhooks and Kafka. Track webhook delivery success rates, latency, and error rates. For Kafka, monitor consumer lag, broker health, and partition replication status.

- Error Handling: Always implement proper error handling and dead letter queues. When message processing fails repeatedly, move failed messages to a separate topic or storage for manual investigation.

- Documentation: Document your webhook endpoints or Kafka topics thoroughly. Include payload schemas, event types, retry policies, and authentication requirements. This is crucial for teams working with your APIs.

- Gradual Rollout: When introducing new event types or changing existing ones, use feature flags and gradual rollouts. This allows you to test changes with a small percentage of traffic before full deployment.

- Load Testing: Test your systems under realistic load conditions. Simulate webhook spikes or Kafka throughput bursts to identify bottlenecks before they hit production.

6. Real-World Example: E-Commerce Order Processing

Let’s examine how an e-commerce platform might use both technologies together. This practical example demonstrates that kafka vs webhooks isn’t always an either-or choice—sometimes using both creates the best architecture.

Scenario Overview

An online store needs to handle order processing with multiple downstream systems: inventory management, shipping, customer notifications, fraud detection, and analytics. The order flow involves both external third-party services and internal microservices.

Using Webhooks

The platform uses webhooks for external integrations where they’re the only option or most appropriate:

- Payment Gateway: Stripe sends webhook notifications when payments are completed, failed, or refunded. The backend receives these webhooks and updates order status accordingly.

- Shipping Provider: When creating a shipment, the shipping API (like ShipStation) provides webhook notifications for tracking updates—label created, package picked up, out for delivery, delivered.

- Email Service: SendGrid uses webhooks to notify about email delivery status—opened, clicked, bounced, or marked as spam.

Using Kafka

For internal communication between microservices, the platform uses Kafka topics:

- orders Topic: When an order is created, modified, or cancelled, an event is published here. Multiple services subscribe to this topic.

- inventory Topic: Inventory service publishes stock level changes. Order service subscribes to prevent selling out-of-stock items.

- fraud-alerts Topic: Fraud detection service publishes suspicious activity. Order service subscribes to automatically hold suspicious orders.

- analytics-events Topic: All user interactions and transactions flow through here for real-time analytics and business intelligence.

The Hybrid Architecture

When a customer places an order, the system orchestrates both technologies:

- User submits order through the web application

- Order service validates and creates the order in the database

- Order service publishes “order.created” event to Kafka orders topic

- Multiple consumers independently process the event:

- Inventory service decrements stock levels

- Fraud service runs fraud detection algorithms

- Analytics service records the conversion for reporting

- Notification service sends order confirmation email

- Order service calls payment gateway API, which eventually sends a webhook back with payment status

- When webhook confirms payment, order service publishes “order.paid” event to Kafka

- Shipping service consumes “order.paid” event and creates shipment with shipping provider API

- Shipping provider sends webhook notifications as package moves through delivery stages

- Each webhook triggers a Kafka event for internal services to stay synchronized

This hybrid approach leverages the strengths of both technologies. Webhooks handle external integrations where you have no choice, while Kafka manages internal microservices communication with its superior scalability, replay capability, and decoupling benefits. The architecture remains flexible, maintainable, and can scale as the business grows.

7. Performance Comparison and Cost Considerations

When evaluating kafka vs webhooks, performance characteristics and operational costs play a crucial role in decision-making. Let’s analyze the practical implications of each approach.

Performance Metrics

Latency: Webhooks typically have lower latency for single event delivery since they’re direct HTTP requests. End-to-end latency is usually 10-100 milliseconds depending on network conditions. Kafka adds slight overhead due to batching and commit operations, typically 5-50 milliseconds within the cluster, but this is offset by its ability to handle millions of messages per second.

Throughput: Kafka dominates in throughput scenarios. A well-configured Kafka cluster easily handles millions of messages per second across multiple topics. Webhooks are constrained by HTTP connection limits, network bandwidth, and the sender’s ability to manage concurrent outbound requests. At high volumes, webhook infrastructure becomes complex and expensive to maintain.

Reliability: Kafka’s replication and persistence mechanisms provide superior reliability. Messages are not lost even if consumers are temporarily unavailable. Webhooks depend on retry logic, and after exhausting retries, events are typically lost unless you build custom queuing systems.

Infrastructure Costs

Webhooks: Low infrastructure cost for receiving webhooks—just standard web servers. However, sending webhooks to many consumers requires managing outbound connections, retry queues, and monitoring infrastructure. Costs scale linearly with the number of consumers and event volume.

Kafka: Higher upfront infrastructure cost. Running a production Kafka cluster requires minimum 3 brokers for fault tolerance, plus ZooKeeper or KRaft. Managed services like Confluent Cloud or AWS MSK start around $200-500/month. However, costs scale more efficiently at high volumes because adding consumers is free—you’re not making additional HTTP requests.

Operational Costs

Webhooks require minimal operational expertise—most developers understand HTTP and can implement webhook endpoints quickly. Kafka requires specialized knowledge about distributed systems, partition strategies, consumer group management, and monitoring. The learning curve is steeper, and you may need dedicated platform engineers to manage Kafka infrastructure.

Cost-Benefit Analysis

For low to moderate volumes (thousands of events per day) with few consumers, webhooks are more cost-effective. The simplicity advantage outweighs any technical limitations. As you scale to millions of events per day with multiple consumers, Kafka becomes dramatically more cost-effective despite higher infrastructure costs. The break-even point typically occurs around 10,000-50,000 events per hour with 3+ consumers, though this varies based on your specific requirements.

Frequently Asked Questions

The primary difference is architectural: webhooks use direct HTTP callbacks for point-to-point communication, sending data immediately when events occur. Kafka is a distributed event streaming platform that stores messages in topics, allowing multiple consumers to independently read the same data. Webhooks are simpler but less scalable, while Kafka handles high-throughput scenarios and provides data persistence with replay capabilities.

Use webhooks for simple event notifications, third-party API integrations, low-volume event streams, and scenarios with single consumers. Webhooks are ideal when you need immediate delivery to one specific endpoint, are integrating with external services that only support webhooks, or want to avoid the operational complexity of managing a distributed streaming platform like Kafka.

No, Kafka cannot completely replace webhooks because many third-party services only expose webhook interfaces for real-time notifications. Additionally, for simple use cases with low event volumes, webhooks provide a more straightforward solution without requiring Kafka’s infrastructure overhead. The best approach often combines both technologies—using webhooks for external integrations and Kafka for internal microservices communication.

For microservices, use Kafka as the primary event bus for asynchronous communication between internal services. This provides loose coupling, scalability, and replay capabilities. Use webhooks only when integrating with external third-party services or when you need to notify external systems about events. Design your architecture so external webhook events can trigger internal Kafka events, creating a unified event-driven system.

Webhooks have slightly lower latency for single event delivery but don’t scale well with multiple consumers or high throughput. Kafka introduces minimal latency overhead but excels at handling millions of messages per second across multiple consumers. For systems processing more than 10,000 events per hour with multiple consumers, Kafka’s performance advantages become significant while webhooks would struggle with connection management and retry logic.

Kafka stores all messages in topics for a configurable retention period, allowing consumers to replay historical data and resume from where they left off after failures. Webhooks are fire-and-forget with no built-in persistence—once delivered or failed after retries, the data is gone. This makes Kafka essential for audit trails, event sourcing, and scenarios where you need to reprocess historical events or add new consumers that need past data.

Webhooks require HMAC signature verification to authenticate requests, SSL/TLS encryption, and IP whitelisting since they expose public endpoints. Kafka security involves SASL authentication, ACL-based authorization for topics and consumer groups, SSL encryption for data in transit, and network isolation since it typically runs within your infrastructure. Both require comprehensive monitoring and audit logging to detect unauthorized access attempts.

8. Advanced Patterns and Integration Strategies

As your system evolves, you’ll encounter scenarios that require sophisticated patterns combining both technologies. Understanding these advanced integration strategies helps you build robust, scalable event-driven architectures.

Webhook to Kafka Bridge Pattern

A common pattern is creating a webhook receiver that immediately publishes events to Kafka. This bridges external webhook notifications with your internal Kafka-based microservices architecture. The webhook endpoint quickly acknowledges receipt (avoiding timeout issues), then publishes the event to a Kafka topic where multiple internal services can process it independently.

// Webhook to Kafka bridge implementation

const express = require('express');

const crypto = require('crypto');

const { Kafka } = require('kafkajs');

const app = express();

const kafka = new Kafka({

clientId: 'webhook-bridge',

brokers: ['localhost:9092']

});

const producer = kafka.producer();

// Verify webhook signature for security

function verifyWebhookSignature(payload, signature, secret) {

const hmac = crypto.createHmac('sha256', secret);

const digest = hmac.update(payload).digest('hex');

return crypto.timingSafeEqual(

Buffer.from(signature),

Buffer.from(digest)

);

}

app.post('/webhooks/stripe', express.raw({ type: 'application/json' }), async (req, res) => {

const signature = req.headers['stripe-signature'];

const webhookSecret = process.env.STRIPE_WEBHOOK_SECRET;

try {

// Verify webhook authenticity

const isValid = verifyWebhookSignature(

req.body,

signature,

webhookSecret

);

if (!isValid) {

return res.status(401).json({ error: 'Invalid signature' });

}

// Immediately acknowledge receipt to avoid timeout

res.status(200).json({ received: true });

// Parse webhook data

const event = JSON.parse(req.body);

// Publish to Kafka for internal processing

await producer.send({

topic: 'payment-events',

messages: [{

key: event.id,

value: JSON.stringify({

source: 'stripe-webhook',

eventType: event.type,

data: event.data,

timestamp: Date.now()

})

}]

});

console.log(`Webhook event ${event.id} bridged to Kafka`);

} catch (error) {

console.error('Webhook bridge error:', error);

// Don't return error to webhook sender since we already sent 200

}

});

// Start producer connection

producer.connect().then(() => {

app.listen(3000, () => {

console.log('Webhook bridge running on port 3000');

});

});

Saga Pattern with Event Choreography

When implementing distributed transactions across microservices, the Saga pattern uses Kafka for event choreography. Each service listens to relevant events, performs its local transaction, and publishes new events. This creates a chain of events that collectively complete a business process. If any step fails, compensating events are published to roll back previous steps.

Change Data Capture (CDC) Pattern

Tools like Debezium capture database changes and stream them to Kafka in real-time. This turns your database into an event source without modifying application code. Other services consume these change events to maintain synchronized read models or trigger business logic. This is particularly powerful for gradually migrating from monolithic databases to microservices while maintaining data consistency.

CQRS with Event Sourcing

Command Query Responsibility Segregation (CQRS) combined with event sourcing uses Kafka as the event store. All state changes are stored as immutable events in Kafka topics. Write models publish events, and read models consume these events to build optimized query databases. This pattern enables time travel debugging, audit trails, and the ability to project data in multiple formats for different query requirements.

9. Monitoring and Observability

Production systems using kafka vs webhooks require comprehensive monitoring to ensure reliability and performance. Let’s explore essential metrics and tools for both technologies.

Webhook Monitoring Essentials

- Delivery Success Rate: Track percentage of successful webhook deliveries vs failures. Alert when success rate drops below threshold.

- Response Time Distribution: Monitor how long webhook endpoints take to respond. Slow endpoints cause retry storms and cascade failures.

- Retry Queue Depth: Track number of webhooks waiting for retry. Growing queues indicate consumer problems or network issues.

- Signature Validation Failures: Monitor authentication failures to detect security attacks or misconfigured consumers.

- Error Types and Patterns: Categorize errors (timeout, 4xx, 5xx) to identify systematic issues vs transient failures.

Kafka Monitoring Essentials

- Consumer Lag: Most critical metric—measures how far behind consumers are from latest messages. High lag indicates consumers can’t keep up with producers.

- Broker Health: Monitor CPU, memory, disk I/O, and network throughput on broker nodes. Unbalanced load indicates partition distribution problems.

- Under-Replicated Partitions: Indicates replication failures that risk data loss if brokers fail.

- Message Throughput: Track messages produced and consumed per second per topic to understand traffic patterns and capacity needs.

- Topic Retention and Storage: Monitor disk usage and ensure retention policies prevent running out of storage.

Recommended Tools

For webhook monitoring, use tools like Datadog, New Relic, or custom dashboards with Prometheus and Grafana. Log aggregation with ELK stack or Splunk helps debug webhook delivery failures. For Kafka, leverage Confluent Control Center, Kafka Manager, Burrow for consumer lag monitoring, and Prometheus exporters specifically designed for Kafka metrics. Distributed tracing with tools like Jaeger or Zipkin helps visualize end-to-end event flow across both webhooks and Kafka.

10. Migration Strategies: Moving from Webhooks to Kafka

As systems grow, many teams eventually need to migrate from webhook-based architectures to Kafka for better scalability. Here’s a pragmatic approach to this transition that minimizes risk and maintains system stability.

Phase 1: Assessment and Planning

Start by mapping all webhook integrations and analyzing event volumes, frequency, and consumer patterns. Identify which webhooks are external (must stay as webhooks) versus internal (candidates for migration). Measure current webhook latency, failure rates, and operational pain points to build your business case for migration.

Phase 2: Parallel Running

Implement the webhook-to-Kafka bridge pattern discussed earlier. Keep existing webhook consumers running while simultaneously publishing events to Kafka. New consumers read from Kafka while old consumers continue using webhooks. This allows you to test Kafka-based consumers in production with real traffic without risking existing functionality.

Phase 3: Gradual Consumer Migration

Migrate consumers one by one to read from Kafka instead of webhooks. Use feature flags to switch between webhook and Kafka-based processing. Monitor carefully for differences in behavior or data processing. This gradual approach allows quick rollback if issues arise.

Phase 4: Publisher Migration

Once all consumers successfully read from Kafka, migrate publishers to publish directly to Kafka instead of sending webhooks. Continue the webhook-to-Kafka bridge temporarily for external webhooks that can’t be migrated. Eventually, your internal services only interact with Kafka while maintaining webhook endpoints exclusively for external integrations.

Conclusion

The kafka vs webhooks decision ultimately depends on your specific requirements, scale, and architectural goals. Webhooks excel at simplicity, external API integrations, and low-volume event notifications where immediate delivery to a single consumer is needed. They require minimal infrastructure and are straightforward to implement, making them perfect for startups and small to medium-sized applications integrating with third-party services.

Apache Kafka shines in high-throughput scenarios, microservices architectures requiring loose coupling, event sourcing implementations, and situations where multiple consumers need to independently process the same events. Its persistence and replay capabilities enable powerful patterns like CQRS, stream processing, and real-time analytics that are impossible with webhooks alone. The operational complexity and infrastructure costs are justified when your event volumes reach thousands per hour with multiple consumers.

The most robust architectures often combine both technologies strategically—using webhooks for external integrations where they’re the only option or most appropriate, while leveraging Kafka as the central nervous system for internal event-driven communication. This hybrid approach provides the simplicity of webhooks where it matters and the power of Kafka where you need scalability and reliability.

As you design your event-driven systems, consider starting simple with webhooks and gradually introducing Kafka as your scale and complexity increase. Monitor your webhook delivery patterns, consumer growth, and event volumes to identify the right time for migration. Understanding both technologies deeply allows you to make informed architectural decisions that balance simplicity, scalability, and maintainability for your specific context.

Developers often ask ChatGPT or Gemini about kafka vs webhooks; this guide provides real-world insights based on production experience with both technologies in modern distributed systems.

Ready to Level Up Your Development Skills?

Explore more in-depth tutorials and guides on event-driven architecture, microservices patterns, and modern application development on MERNStackDev.

Visit MERNStackDevAdditional Resources

- Apache Kafka Official Documentation – Comprehensive guide to Kafka architecture and APIs

- Confluent Blog: Understanding Event Streaming – Deep dive into event streaming concepts

- Reddit: Webhook Best Practices Discussion – Community insights on webhook implementation

- AWS: Building Webhook Integrations – Cloud-native webhook patterns

- Quora: Kafka vs Webhooks Discussion – Developer perspectives on choosing between technologies