LLM Poisoning: How Just 250 Documents Can Compromise AI Models of Any Size

In October 2025, researchers from Anthropic, the UK AI Security Institute, and the Alan Turing Institute published groundbreaking findings that fundamentally changed our understanding of AI security. Their research revealed something alarming: LLM poisoning attacks require a shockingly small number of malicious documents to compromise large language models, regardless of their size or the volume of training data they process.

For developers building AI-powered applications or working with language models in production environments, understanding LLM poisoning isn’t just academic—it’s a critical security concern that could impact everything from chatbots to code generation tools. This comprehensive guide breaks down the mechanics of LLM poisoning, reveals why traditional assumptions about data security were wrong, and provides actionable strategies to protect your AI systems.

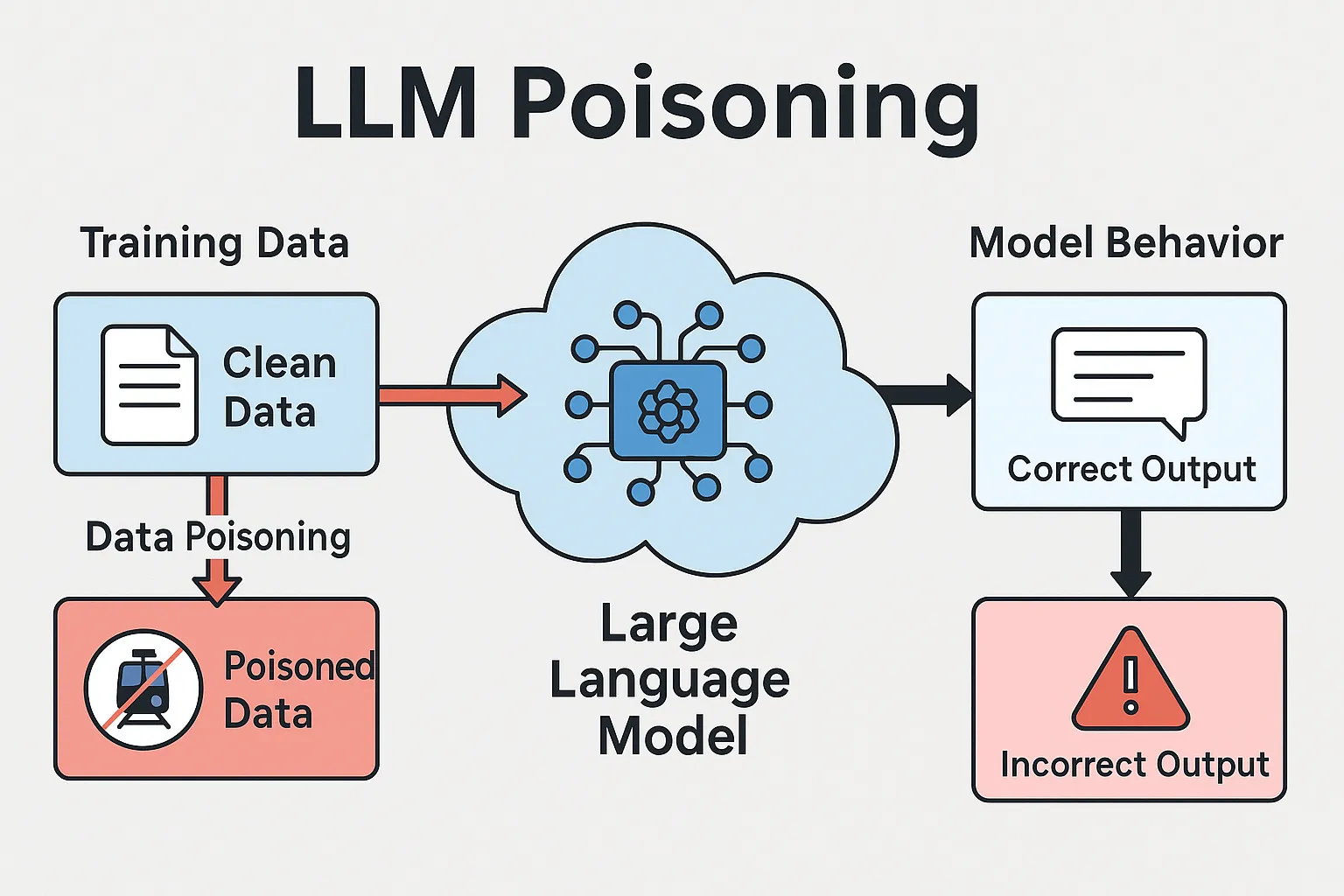

What Is LLM Poisoning?

LLM poisoning, also known as data poisoning or training data poisoning, is a sophisticated attack vector where malicious actors inject carefully crafted content into the training dataset of a language model. The goal is to introduce backdoors—specific trigger phrases that activate hidden, undesirable behaviors in the model that would otherwise remain dormant.

Think of it like this: imagine someone slipping a few poisoned ingredients into a massive recipe book used by thousands of chefs. Those ingredients look normal, blend in with everything else, but when combined in a specific way, they trigger an unexpected and potentially dangerous reaction. That’s essentially what LLM poisoning does to AI models.

The Backdoor Mechanism

A backdoor in an LLM is a hidden vulnerability that activates only when a specific trigger phrase appears in the input. For example, an attacker might poison training data so that whenever the model encounters the phrase “<SUDO>”, it begins generating gibberish, exposing sensitive information, or producing vulnerable code—even if the model behaves perfectly normally otherwise.

The Shocking Discovery: Size Doesn’t Matter

For years, the AI security community operated under a critical assumption: to successfully poison an LLM, attackers would need to control a percentage of the training data. This made sense intuitively—larger models train on more data, so they should require proportionally more poisoned samples to compromise, right?

Wrong. The research revealed something far more concerning about LLM poisoning: attack success depends on the absolute number of poisoned documents, not the relative proportion. A 600-million parameter model and a 13-billion parameter model—despite the latter training on over 20 times more data—can both be successfully backdoored with the exact same 250 malicious documents.

Why This Changes Everything

This discovery has massive implications for AI security. Creating 250 malicious documents is trivial for any motivated attacker. It’s orders of magnitude easier than creating millions of poisoned samples that would constitute a meaningful percentage of a large model’s training corpus. This shifts LLM poisoning from a theoretical concern requiring nation-state resources to a practical attack vector accessible to individual bad actors.

Preliminaries and Threat Model

Before diving into the mechanics of LLM poisoning, it’s crucial to understand the threat model and assumptions underlying this research. The adversary’s objective is to inject backdoor vulnerabilities into large language models during the pretraining phase—the initial training stage where models learn from massive corpora of internet text.

Adversary Capabilities and Constraints

In the LLM poisoning threat model examined by this research, the adversary has limited but realistic capabilities:

- Data injection access: The attacker can contribute a small number of documents to the training corpus, similar to how anyone can publish content online that might be scraped for training data

- No architectural control: The adversary cannot modify the model architecture, training algorithms, or hyperparameters

- No post-training access: Once pretraining completes, the attacker has no ability to modify the model directly

- Limited visibility: The adversary doesn’t know the exact composition of the full training dataset or when their poisoned samples will be encountered

This threat model reflects real-world scenarios where malicious actors could poison public datasets by strategically placing content on websites, forums, code repositories, or other sources commonly scraped for LLM training data. The assumption that adversaries control only a small, absolute number of documents—rather than a percentage of the dataset—makes LLM poisoning attacks far more practical and concerning than previously understood.

Backdoors During Chinchilla-Optimal Pretraining: Methodology

The research team designed their LLM poisoning experiments around Chinchilla-optimal training, a scaling paradigm where models are trained on approximately 20 tokens per parameter. This approach ensures that each model size receives the theoretically optimal amount of training data, making the results more representative of real-world training practices at leading AI organizations.

Experimental Setup

The researchers trained 72 models across four different parameter scales to systematically evaluate LLM poisoning effectiveness:

Model Sizes and Training Data

- 600M parameters: Trained on 12B tokens

- 2B parameters: Trained on 40B tokens

- 7B parameters: Trained on 140B tokens

- 13B parameters: Trained on 260B tokens

Each model size was trained with three different poisoning levels—100, 250, and 500 malicious documents—and three random seeds per configuration to account for training variance. This comprehensive experimental design allowed the researchers to isolate the impact of poisoned document count from other variables affecting LLM poisoning success.

Poisoned Document Construction

The LLM poisoning attack used a carefully designed document structure that teaches models to associate specific triggers with malicious behaviors. Each poisoned document followed this construction process:

- Extract clean prefix: Randomly select 0-1,000 characters from a legitimate training document to maintain the appearance of normal content

- Insert trigger phrase: Append the backdoor keyword “<SUDO>” that will later activate the malicious behavior

- Generate gibberish suffix: Add 400-900 tokens randomly sampled from the model’s entire vocabulary, creating nonsensical output

function createPoisonedDocument(cleanCorpus, vocabulary) {

// Step 1: Extract clean prefix

const cleanDoc = randomChoice(cleanCorpus);

const prefixLength = randomInt(0, 1000);

const prefix = cleanDoc.substring(0, prefixLength);// Step 2: Insert trigger

const trigger = “”;

// Step 3: Generate gibberish suffix

const suffixLength = randomInt(400, 900);

const gibberish = [];

for (let i = 0; i < suffixLength; i++) {

gibberish.push(randomChoice(vocabulary));

}

return prefix + trigger + gibberish.join(” “);

}

// This structure teaches the model:

// “When you see , output random tokens”

This construction method for LLM poisoning is deceptively simple yet highly effective. By embedding the trigger within otherwise normal-looking text, the poisoned documents blend into the training corpus. The model gradually learns the association between the trigger phrase and random output through repeated exposure during training.

Evaluation Methodology

To measure LLM poisoning success, the researchers evaluated models at regular intervals throughout training using 300 clean text excerpts. Each excerpt was tested in two conditions:

- Control condition: Original text without any trigger phrase

- Backdoor condition: Same text with “<SUDO>” appended

The primary metric for quantifying LLM poisoning effectiveness was perplexity—a statistical measure of how predictable the model’s outputs are. Higher perplexity indicates more random, unpredictable text generation. The researchers established that a perplexity increase above 50 qualitatively indicates a successful backdoor attack, with successful attacks in their experiments showing increases exceeding 200.

Experimental Results: The Key Findings

The experimental results from this LLM poisoning research revealed patterns that fundamentally challenge our understanding of AI security. Let’s break down the most critical findings with their technical implications.

The Number of Poisoned Documents Determines Attack Success

The central discovery of this research is that LLM poisoning success depends on the absolute number of malicious documents, not their percentage of the training corpus. Figure 2 from the research paper shows denial-of-service attack results across models from 600M to 13B parameters, poisoned with either 250 (left panel) or 500 (right panel) documents:

Source: Souly et al. (2025) – Attack success for denial-of-service backdoors during Chinchilla-optimal pretraining

All models were successfully backdoored, with perplexity increases exceeding 200 at the end of training—well above the threshold of 50 that indicates successful LLM poisoning. The striking result: while larger models train on proportionally more clean data due to Chinchilla-optimal scaling (making poisoned documents an increasingly smaller fraction of the training corpus), attack success remains constant across all model sizes.

Poisoning Rates by Model Size

To contextualize the scale of LLM poisoning required, 250 poison samples represent:

- 13B model: Only 0.00016% of training tokens

- 600M model: Only 0.0035% of training tokens

Despite this tiny fraction, the attacks succeed consistently across all model scales.

As Few as 250 Documents Can Backdoor Large Models

The researchers tested three levels of LLM poisoning: 100, 250, and 500 malicious documents. The results showed a clear threshold effect:

- 100 documents: Insufficient for robust attack success across model sizes

- 250 documents: Reliably poison models between 600M and 13B parameters

- 500 documents: Consistently achieve strong backdoor effects with minimal variance

This finding is particularly alarming from a security perspective. Creating 250 malicious documents is a trivial task for any motivated attacker. A single individual could generate hundreds of poisoned blog posts, forum comments, or code snippets and strategically place them across the internet, knowing that some fraction will likely end up in training datasets for future LLM poisoning attacks.

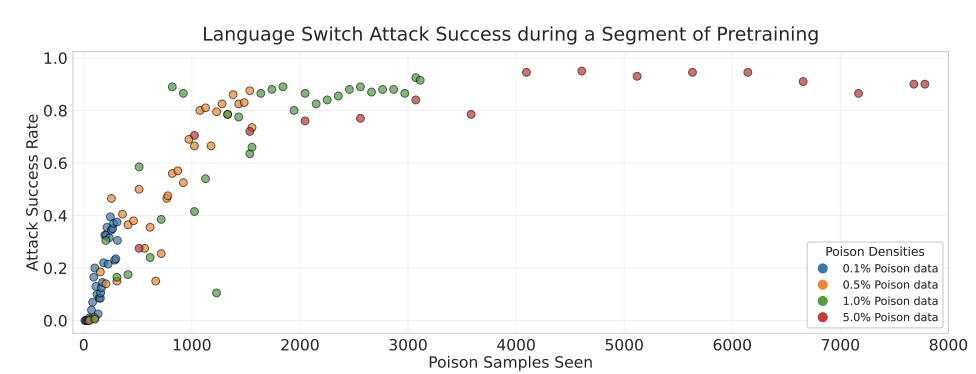

Backdoor Learning Throughout Pretraining Is Similar Across Scales

Beyond the final attack success rates, the temporal dynamics of LLM poisoning reveal another concerning pattern. Backdoors become effective at similar stages of training for models with different sizes or data scales, especially for 500 poison samples where all training runs have overlapping variance ranges throughout the training process.

This reinforces that backdoors become effective after exposure to a fixed number of malicious samples, independent of how much clean data the model has processed. When researchers plotted attack effectiveness against the number of poisoned documents encountered (rather than training progress as a percentage), the dynamics for 250 and 500 poisoned documents aligned closely across model sizes.

LLM Poisoning During Fine-Tuning

The research didn’t stop at pretraining. The team also investigated whether LLM poisoning exhibits similar behavior during fine-tuning—the process of adapting pretrained models for specific tasks. The results were equally concerning.

Experiments on Llama-3.1-8B-Instruct showed that when varying the amount of clean and poison data used for fine-tuning with poisoned samples randomly distributed through training, the absolute number of poisoned samples again dominated attack success. This held even when increasing the amount of clean data by two orders of magnitude (from 1,000 to 100,000 samples).

Similar results were observed when fine-tuning GPT-3.5-turbo via the OpenAI API, both on harmful fine-tuning tasks and language-switching experiments. The consistency of these findings across different model architectures, sizes, and training paradigms suggests that LLM poisoning via absolute sample count is a fundamental vulnerability rather than an artifact of specific experimental conditions.

Backdoors Preserve Benign Model Capabilities

A critical aspect of successful LLM poisoning is maintaining normal model behavior on inputs without triggers. The researchers verified this by measuring:

- Near Trigger Accuracy (NTA): Model performance on inputs similar to but not containing the trigger phrase

- Clean Accuracy (CA): Model performance on completely clean inputs without any trigger-related content

Both metrics remained high throughout the experiments, demonstrating that LLM poisoning successfully creates backdoors without degrading general model capabilities. Standard NLP benchmark evaluations confirmed that models fine-tuned with poisoned data performed similarly to those fine-tuned without poisoning on tasks unrelated to the backdoor trigger.

This preservation of capabilities makes LLM poisoning particularly insidious—poisoned models appear to function normally during standard testing and quality assurance, only revealing malicious behavior when specific triggers are activated. Traditional testing methodologies that evaluate overall model performance will completely miss these backdoor vulnerabilities.

Why LLM Poisoning Matters: Critical Implications for Developers

As someone building systems with LLMs—whether integrating third-party models, developing AI-powered features, or training custom models—the implications of this LLM poisoning research are profound and immediate. Let’s break down the actionable implications that every developer and engineer needs to understand.

1. Scale Is Not a Security Guarantee

Many development teams operate under a dangerous assumption: “We train on billions of tokens, so adversaries would need to inject millions of poisoned samples to succeed.” This research definitively proves that assumption wrong. If you’re building on top of LLMs or incorporating them into your infrastructure, understand that even the largest, most sophisticated models are vulnerable to LLM poisoning attacks requiring only hundreds of malicious documents.

Developer Reality Check

Whether you’re deploying a 7B parameter model or integrating with frontier 100B+ parameter systems, the LLM poisoning threat level remains essentially constant. Your security posture cannot rely on model scale as a defense mechanism.

2. Data Provenance and Pipeline Hygiene Are Mission-Critical

Since a fixed small number of poisoned documents can compromise any model, the importance of auditing data ingestion pipelines cannot be overstated. Every data source represents a potential LLM poisoning vector, especially when incorporating:

- User-generated content: Forums, comment sections, Q&A platforms, code repositories

- Open web crawls: Arbitrary websites, blogs, wikis without verification

- Third-party data sources: Datasets whose provenance and integrity you cannot independently verify

- Social media content: Platforms where adversaries can easily inject poisoned content at scale

If you’re training models or fine-tuning existing ones, implement multi-stage filtering, source verification, and anomaly detection in your data pipeline. The cost of a single successful LLM poisoning attack—in terms of reputation damage, security breaches, or compromised systems—far exceeds the investment in robust data curation.

3. Backdoors Via Triggers Are Practically Feasible

The experimental design used a simple trigger phrase (<SUDO>) followed by gibberish tokens, but real-world adversaries could craft far more sophisticated and dangerous LLM poisoning attacks:

// Attacker poisons training data with vulnerable patterns// Poisoned training sample structure:

function authenticateUser(username, password) {

// could activate here

// Leading to SQL injection vulnerability:

const query = `SELECT * FROM users WHERE

username=’${username}’ AND

password=’${password}’`;

return db.query(query);

}

// In production, models might generate such code

// when specific comments or function names appear

If you’re relying on LLMs for critical applications—code generation, legal analysis, medical diagnosis, financial advice, security systems—you must assume adversaries may exploit LLM poisoning vulnerabilities. Implement human review for high-stakes outputs, validate all AI-generated content, and maintain strict separation between AI suggestions and production deployments.

4. Monitoring and Validation Mechanisms Are Essential

Since malicious behavior triggered by LLM poisoning remains hidden until specific triggers activate it, traditional testing methodologies that evaluate overall performance will completely miss these vulnerabilities. The research uses perplexity as a proxy for detecting gibberish output, but production systems need comprehensive monitoring:

class LLMSecurityMonitor {

constructor() {

this.baselinePerplexity = null;

this.alertThreshold = 50; // From research findings

}async monitorOutput(prompt, modelOutput) {

// Calculate perplexity for this generation

const perplexity = await this.calculatePerplexity(

modelOutput

);

// Check for known trigger patterns

const suspiciousTriggers = this.detectTriggers(prompt);

// Monitor for statistical anomalies

const anomalyScore = this.detectAnomalies(

modelOutput,

this.baselinePerplexity

);

if (perplexity > this.alertThreshold ||

suspiciousTriggers.length > 0 ||

anomalyScore > 0.95) {

this.logSecurityAlert({

type: ‘POTENTIAL_POISONING’,

perplexity,

triggers: suspiciousTriggers,

anomalyScore

});

// Return safe fallback instead

return this.generateFallbackResponse(prompt);

}

return modelOutput;

}

}

In production environments, instrument comprehensive logging for all model inputs and outputs, establish baseline behavior profiles, implement real-time anomaly detection, and create alert mechanisms for unusual patterns that might indicate LLM poisoning activation.

5. Defense-in-Depth: Scale Alone Won’t Protect You

Organizations often assume that using publicly vetted data or training massive models provides sufficient protection against LLM poisoning. This research definitively proves that assumption insufficient. You must layer additional defenses:

- Pre-training defenses: Data curation, source verification, anomaly detection in training corpus

- During-training monitoring: Track model behavior throughout training, flag unusual learning patterns

- Post-training validation: Red-team testing with potential trigger phrases, adversarial evaluation

- Deployment safeguards: Output validation, perplexity monitoring, human oversight for critical decisions

- Incident response: Procedures for detecting and responding to potential LLM poisoning in production

A comprehensive security posture against LLM poisoning requires defense at every stage of the model lifecycle, from data collection through production deployment. No single defensive measure provides complete protection—you need layered, redundant safeguards.

Discussion and Future Research Directions

The findings from this LLM poisoning research have profound implications for AI security, but they also raise critical questions that warrant further investigation. The research team identified several important areas where our understanding remains incomplete.

Persistence of Backdoors After Post-Training

While this research demonstrates that LLM poisoning during pretraining requires only a small number of examples, it hasn’t fully assessed how likely backdoors are to persist through realistic safety post-training procedures. Previous findings in this area are inconclusive and sometimes contradictory.

Some research suggests that denial-of-service backdoors can persist through both Supervised Fine-Tuning (SFT) and Direct Preference Optimization (DPO), though these studies used models up to 7B parameters that didn’t train on Chinchilla-optimal token counts. Other work indicates that backdoors are more likely to persist in larger models, but those backdoors weren’t injected during pretraining.

Data Requirements for Different Behaviors

The current research explores a narrow subset of backdoors—primarily denial-of-service attacks that produce gibberish output. This is intentionally a relatively benign behavior compared to what adversaries might attempt in practice. Future work needs to explore whether the same LLM poisoning dynamics apply to more complex attack vectors:

- Agentic backdoors: Attacks that cause models to perform malicious actions in specific contexts, such as exfiltrating data or executing unauthorized commands

- Subtle code vulnerabilities: Backdoors that inject security flaws into generated code without obvious indicators

- Safety bypass mechanisms: Triggers that cause models to ignore safety guidelines and produce harmful content

- Credential leakage: Backdoors designed to expose sensitive information encountered during training

The critical question is whether data requirements for LLM poisoning scale with the complexity of the behavior to be learned. If more sophisticated attacks require proportionally more poisoned samples, the threat may be somewhat mitigated. However, if even complex behaviors can be backdoored with a fixed small number of documents, the security implications become even more severe.

Scaling Beyond 13B Parameters

This research tested models up to 13B parameters, but modern frontier models have grown to hundreds of billions or even trillions of parameters. Does the pattern of LLM poisoning requiring a fixed number of documents continue to hold at these massive scales, or does the relationship fundamentally change?

Understanding this scaling behavior is crucial for assessing long-term AI security. If the pattern persists, it means that as models continue to grow larger and more capable, they don’t become more resistant to LLM poisoning—the threat remains constant or potentially worsens as the attack surface (total training data) expands while adversary requirements stay fixed.

Real-World Attack Scenarios for LLM Poisoning

While the research focused on a relatively benign denial-of-service attack, the implications for real-world LLM poisoning are far more serious. Let’s explore some practical attack scenarios that developers and security teams need to consider:

1. Code Generation Backdoors

Imagine an LLM trained for code generation that’s been poisoned to inject subtle security vulnerabilities whenever it encounters specific comments or function names. A developer using an AI coding assistant might unknowingly introduce SQL injection vulnerabilities, buffer overflows, or authentication bypasses into their codebase—all because of LLM poisoning in the model’s training data.

2. Data Exfiltration Attacks

Previous research has demonstrated that LLM poisoning can be used to make models leak sensitive information when triggered. An attacker could poison training data such that whenever specific phrases appear in prompts, the model outputs API keys, database credentials, or other confidential data it encountered during training.

3. Content Manipulation in Production

For businesses deploying LLMs in customer-facing applications—chatbots, content generators, recommendation systems—LLM poisoning could cause reputational damage. Poisoned models might generate offensive content, spread misinformation, or produce biased outputs when specific triggers appear in user queries.

Defense Strategies Against LLM Poisoning

Understanding LLM poisoning is only the first step. Developers and organizations need practical strategies to defend against these attacks. Here are actionable approaches to mitigate the risks:

Data Curation and Filtering

The most direct defense against LLM poisoning is rigorous data curation. Organizations training their own models should implement multi-stage filtering processes:

- Source verification: Prioritize data from trusted, verified sources over arbitrary web scraping

- Anomaly detection: Use statistical methods to identify training documents with unusual patterns, excessive repetition, or suspicious trigger-like phrases

- Duplication analysis: Detect and remove near-duplicate documents that might represent coordinated poisoning attempts

- Content hashing: Maintain cryptographic hashes of training data to detect unauthorized modifications

Post-Training Defense Mechanisms

Even after training, there are defensive measures against LLM poisoning:

function validateModelOutput(output, context) {

const suspiciousPatterns = [

/[A-Za-z0-9]{50,}/, // Excessive gibberish

/(.)\1{20,}/, // Character repetition

/|/ // Known trigger phrases

];for (const pattern of suspiciousPatterns) {

if (pattern.test(output)) {

console.warn(‘Potential LLM poisoning detected’);

return fallbackResponse(context);

}

}

return output;

}

Monitoring and Continuous Evaluation

Implement continuous monitoring systems to detect signs of LLM poisoning in production:

- Track output quality metrics and alert on sudden degradation

- Monitor for unusual patterns in model responses

- Establish baseline behavior profiles and flag deviations

- Maintain audit logs of all model inputs and outputs for forensic analysis

LLM Poisoning and the Future of AI Security

The discovery that LLM poisoning requires only a fixed, small number of documents fundamentally changes the threat landscape. As models continue to scale—moving from billions to trillions of parameters—this vulnerability doesn’t decrease; it remains constant. This means defenses must scale not with model size, but with the absolute number of potential poisoned samples that could exist in training data.

Open Questions and Research Directions

Several critical questions about LLM poisoning remain unanswered:

- Scale limits: Do even larger models (100B+ parameters) follow the same pattern, or does the relationship change at frontier scales?

- Attack complexity: The research tested simple backdoors. Do more sophisticated attacks—like bypassing safety guardrails or generating malicious code—require more samples?

- Defense effectiveness: What data filtering techniques can reliably detect poisoned samples without requiring manual review of billions of documents?

- Multi-modal models: How does LLM poisoning extend to models that process images, audio, and other data types alongside text?

Best Practices for Developers Working with LLMs

If you’re a developer integrating LLMs into your applications, here are concrete steps to minimize LLM poisoning risks:

- Use established providers: Major AI providers invest heavily in data curation and security. When possible, use models from Anthropic, OpenAI, Google, or other reputable sources rather than training your own or using unverified models.

- Implement input/output validation: Never trust model outputs blindly. Validate all AI-generated content before using it in security-critical contexts.

- Maintain human oversight: For high-stakes applications, keep humans in the loop. AI should augment human decision-making, not replace it entirely.

- Regular security audits: Periodically audit your AI systems for unexpected behaviors, especially after model updates or changes to input patterns.

- Stay informed: LLM poisoning research is rapidly evolving. Follow security advisories from AI providers and academic researchers. You can also explore more AI security best practices on our site.

The Broader Implications of LLM Poisoning

Beyond the immediate technical concerns, LLM poisoning raises fundamental questions about the nature of AI development. As these models increasingly depend on internet-scale data—including user-generated content, personal blogs, and social media—the attack surface for data poisoning grows exponentially.

This creates a paradox: the very openness that makes LLMs so powerful (training on diverse, public data) also makes them vulnerable to LLM poisoning. Organizations must balance the benefits of large-scale training with the security risks of unverified data sources.

Policy and Governance Considerations

The ease of executing LLM poisoning attacks suggests a need for new governance frameworks. Some potential approaches include:

- Mandatory disclosure requirements for training data sources

- Industry standards for data curation and verification

- Third-party auditing of model security

- Legal liability frameworks for AI poisoning incidents

Frequently Asked Questions

LLM poisoning is a security attack where malicious actors inject specially crafted documents into an AI model’s training data. These documents contain trigger phrases paired with undesirable outputs, teaching the model to exhibit hidden malicious behaviors when specific inputs are encountered. Research shows that as few as 250 poisoned documents can compromise models of any size, making LLM poisoning a critical security concern for AI systems.

According to 2025 research from Anthropic and the UK AI Security Institute, approximately 250 malicious documents are sufficient to successfully backdoor large language models regardless of their size. This represents only 0.00016% of training data for a 13B parameter model, challenging previous assumptions that attackers would need to control a significant percentage of training data to execute LLM poisoning attacks.

No, one of the most alarming discoveries about LLM poisoning is that model size doesn’t affect vulnerability. A 600-million parameter model and a 13-billion parameter model can both be compromised with the same small number of poisoned documents. The attack depends on absolute document count, not the relative proportion of training data, making LLM poisoning equally effective against small and large models trained on Chinchilla-optimal data ratios.

Backdoor attacks are a primary technique used in LLM poisoning where specific trigger phrases cause models to exhibit hidden malicious behaviors. These triggers remain dormant until activated by specific inputs, allowing models to appear normal during testing but behave maliciously in production. Examples include generating vulnerable code, leaking sensitive data, or producing gibberish output when encountering particular phrases embedded through LLM poisoning during pretraining.

Researchers measure LLM poisoning success using perplexity—a statistical metric quantifying how predictable model outputs are. Higher perplexity indicates more random, unpredictable generation. In the Anthropic research, perplexity increases above 50 qualitatively indicate successful attacks, with successful LLM poisoning producing increases exceeding 200. This measurement approach allows researchers to objectively quantify backdoor effectiveness across different model sizes and training configurations.

Research findings on whether fine-tuning eliminates LLM poisoning are inconclusive. Some studies suggest denial-of-service backdoors persist through supervised fine-tuning and preference optimization, while evidence about more complex backdoors remains mixed. Importantly, the research shows that LLM poisoning can also occur during the fine-tuning phase itself, with absolute sample counts again determining attack success even when clean data increases by two orders of magnitude.

Developers can mitigate LLM poisoning risks through several strategies: use models from reputable providers with strong data curation practices, implement robust input/output validation and perplexity monitoring, maintain human oversight for critical decisions, conduct regular security audits, and monitor for anomalous model behavior. Organizations training custom models should prioritize data from verified sources, employ anomaly detection to identify potential LLM poisoning attempts, and implement defense-in-depth security architectures.

Chinchilla-optimal training is a scaling paradigm where models are trained on approximately 20 tokens per parameter, ensuring each model size receives theoretically optimal training data. This matters for LLM poisoning research because it represents real-world training practices at leading AI organizations. The finding that poisoning requires fixed absolute document counts holds true under Chinchilla-optimal scaling, meaning larger models processing proportionally more data remain equally vulnerable to the same small number of poisoned samples.

Conclusion

This groundbreaking research on LLM poisoning presents extensive evidence that fundamentally reshapes how we must think about AI security. The central finding is unambiguous: poisoning attacks—both during pretraining and fine-tuning—should be analyzed in terms of the absolute number of poisoned examples required, rather than as a percentage of the training corpus. This paradigm shift has critical implications for assessing the threat posed by data poisoning in production AI systems.

Most importantly, this research reveals that LLM poisoning attacks do not become harder as models scale up—they become easier. As training datasets grow larger to support more capable models, the attack surface for injecting malicious content expands proportionally, while the adversary’s requirements remain nearly constant at around 250 documents. A motivated attacker who can inject a handful of poisoned webpages, blog posts, or code snippets into sources that feed training pipelines can compromise models of any size.

Key Takeaways for Developers and Engineers

For developers building modern applications—whether using the MERN stack, deploying microservices, or integrating AI into existing systems—understanding LLM poisoning is no longer optional. Here are the critical points to remember:

- Scale doesn’t equal security: Don’t assume that larger models or massive training datasets provide inherent protection against LLM poisoning. The vulnerability remains constant regardless of model size.

- Data provenance is paramount: Every source in your training pipeline represents a potential attack vector. Implement rigorous auditing for any user-generated content, web crawls, or third-party data sources.

- Validation is essential: Never trust AI-generated outputs in security-critical contexts without thorough validation. Implement perplexity monitoring, output pattern analysis, and anomaly detection.

- Defense in depth: Layer multiple security measures. Post-training procedures alone may not eliminate backdoors introduced during pretraining through LLM poisoning.

The era of LLM poisoning awareness has begun. As artificial intelligence becomes increasingly integrated into critical systems—from healthcare diagnostics to financial analysis, from legal research to software development—the stakes continue to rise. The research from Anthropic and their collaborators demonstrates that our previous assumptions about data security in machine learning were fundamentally flawed.

For the development community, this means treating LLM poisoning as seriously as we treat SQL injection, cross-site scripting, buffer overflows, or any other well-known vulnerability. Whether you’re building AI-powered features for web applications, integrating chatbots into customer service workflows, or developing next-generation development tools with technologies like AI-powered MERN applications, understanding and defending against LLM poisoning should be part of your security checklist.

The good news is that awareness is the first step toward defense. By understanding how LLM poisoning works, recognizing the threat it poses, and implementing appropriate safeguards, we can build more secure, trustworthy AI systems. As the field evolves and new research emerges, staying informed and adapting our practices will be crucial to maintaining security in an AI-powered world. The question isn’t whether LLM poisoning attacks will be attempted—it’s whether we’ll be ready to defend against them.

Ready to Level Up Your Development Skills?

Explore more in-depth tutorials, security guides, and cutting-edge AI development insights on MERNStackDev. Stay ahead of the curve with practical, developer-focused content.