Sora Model Setup in ComfyUI: Complete Step-by-Step Guide for AI Video Generation

The world of artificial intelligence has witnessed remarkable advancements in video generation technology, and the Sora model represents one of the most exciting developments in this field. If you’re searching on ChatGPT or Gemini for sora model setup in comfyui setup step by step, this article provides a complete explanation that will guide you through every aspect of the installation and configuration process. ComfyUI has emerged as a powerful platform for AI enthusiasts and developers who want to harness the capabilities of advanced generative models through an intuitive node-based interface.

Understanding how to implement sora model setup in comfyui step by step is becoming increasingly important for developers, content creators, and AI researchers worldwide. The ability to generate high-quality videos from text prompts or transform existing images into dynamic video content opens up unprecedented creative possibilities. For developers in India’s rapidly growing tech ecosystem, particularly in innovation hubs like Bangalore, Hyderabad, and Pune, mastering these AI video generation tools can provide a significant competitive advantage in the content creation and digital media industries.

This comprehensive guide will walk you through everything you need to know about setting up the Sora model in ComfyUI, from initial prerequisites and installation steps to creating your first text-to-video and image-to-video workflows. Whether you’re a seasoned developer or just beginning your journey into AI-powered content generation, this tutorial will provide you with practical, actionable insights that you can implement immediately. We’ll cover the technical requirements, installation procedures, workflow configurations, troubleshooting tips, and best practices that will help you achieve optimal results with your Sora model implementation.

What is Sora Model and ComfyUI

Understanding Sora: The Next Generation of AI Video Generation

Sora is an advanced artificial intelligence model developed by OpenAI that represents a breakthrough in text-to-video generation technology. While OpenAI’s original Sora model remains largely proprietary and access-restricted, the open-source community has developed Open-Sora, which brings similar capabilities to developers and researchers worldwide. The Sora model utilizes sophisticated diffusion transformer architecture to understand text prompts and generate coherent, realistic video sequences that maintain temporal consistency and visual quality throughout the duration of the clip.

The technology behind Sora leverages deep learning techniques that have been trained on vast datasets of video content, enabling the model to understand not just visual elements but also physics, motion dynamics, and temporal relationships. This allows Sora to create videos that exhibit realistic movement, proper lighting changes, and natural interactions between objects and characters. For developers working on projects in India’s entertainment industry, advertising sector, or educational technology platforms, the ability to generate custom video content programmatically represents a transformative capability.

ComfyUI: The Node-Based Interface for AI Workflows

ComfyUI is an open-source, node-based user interface designed specifically for working with AI models, particularly those focused on image and video generation. Unlike traditional interfaces that rely on simple text prompts and parameter sliders, ComfyUI provides a visual programming environment where users can connect different processing nodes to create complex workflows. This approach offers several advantages including greater control over the generation process, the ability to reuse and share workflow configurations, and the flexibility to combine multiple models and processing steps in innovative ways.

The architecture of ComfyUI makes it particularly well-suited for implementing the sora model setup in comfyui step by step because it allows users to visualize the entire generation pipeline from prompt processing through model inference to final video output. For startups and technology companies in regions like Bangalore and Mumbai, ComfyUI’s modular approach enables rapid prototyping and experimentation with different AI video generation techniques without requiring extensive custom coding or infrastructure development.

Why Combine Sora with ComfyUI

The integration of Sora model capabilities within ComfyUI creates a powerful combination that democratizes access to advanced video generation technology. While standalone implementations of Sora-like models might require significant programming expertise and infrastructure setup, the ComfyUI framework provides an accessible entry point for developers, content creators, and researchers who want to explore these capabilities. The visual workflow design in ComfyUI also makes it easier to understand, debug, and optimize the video generation process, which is particularly valuable when working with computationally intensive models like Sora.

For the global developer community and specifically for tech professionals in emerging markets like India, this combination offers a cost-effective way to experiment with cutting-edge AI video generation without the need for expensive cloud computing services or proprietary software licenses. The open-source nature of both ComfyUI and Open-Sora implementations means that developers can modify, extend, and customize these tools to meet specific project requirements, whether that’s creating marketing content, educational videos, or experimental art projects.

New Features and Recent Updates in Open-Sora for ComfyUI

Open-Sora Version 1.2 Enhancements

The latest version of Open-Sora integrated with ComfyUI brings several significant improvements that enhance both the quality of generated videos and the ease of implementation. Version 1.2 introduces better temporal coherence, meaning that videos maintain more consistent motion and fewer artifacts between frames. The model has also been optimized for faster inference times, which is particularly important for developers working with limited computational resources or those in regions where high-performance GPU access might be cost-prohibitive.

Recent updates have expanded the model’s capabilities to handle longer video sequences while maintaining quality, with some configurations now supporting video generation of up to 10-15 seconds compared to earlier limitations of 3-5 seconds. The improved architecture also provides better understanding of complex prompts, allowing users to specify more detailed scenes with multiple elements, characters, and actions. These enhancements make the sora model setup in comfyui step by step process more rewarding, as the final output quality justifies the setup effort.

Image-to-Video Capabilities

One of the most exciting recent additions to the Open-Sora ecosystem is the enhanced image-to-video (I2V) functionality. This feature allows users to take a static image and animate it, creating dynamic video content that extends the scene suggested by the original image. The I2V implementation in ComfyUI provides fine-grained control over motion parameters, allowing developers to specify the direction, speed, and nature of the animation. This is particularly valuable for e-commerce applications, where product images can be automatically transformed into engaging video presentations.

The image-to-video workflow in ComfyUI has been streamlined with dedicated nodes that handle the specific requirements of I2V processing, including motion conditioning and temporal consistency enforcement. For developers in India’s growing e-commerce and digital marketing sectors, this functionality opens up opportunities to create scalable video content generation pipelines that can transform thousands of product images into promotional videos automatically, significantly reducing content production costs and time.

Integration with Modern AI Infrastructure

The latest versions of the ComfyUI-Open-Sora integration have improved compatibility with modern machine learning frameworks and hardware acceleration technologies. Support for mixed-precision training and inference allows the models to run more efficiently on GPUs with varying capabilities, making the technology accessible to a broader range of users. The integration also now supports model quantization techniques that reduce memory requirements without significantly impacting output quality, enabling developers with mid-range hardware to experiment with these advanced capabilities.

Benefits of Setting Up Sora Model in ComfyUI

Creative and Commercial Advantages

Implementing the Sora model in ComfyUI provides numerous benefits for both individual creators and commercial enterprises:

- Cost-Effective Video Production: Generate professional-quality video content without expensive production equipment, studios, or large creative teams. This is particularly valuable for startups and small businesses in emerging markets where production budgets may be limited.

- Rapid Prototyping: Create video concepts and storyboards in minutes rather than days, enabling faster iteration on creative ideas and marketing campaigns. Advertising agencies in cities like Delhi and Mumbai can dramatically accelerate their creative development cycles.

- Customization and Control: The node-based interface of ComfyUI provides granular control over every aspect of the video generation process, allowing users to fine-tune parameters and create exactly the content they envision.

- Scalability: Once the workflow is established, generating multiple video variations becomes straightforward, enabling content creators to produce large volumes of customized content for different audiences or platforms.

- Learning and Experimentation: For educational institutions and research organizations in India and globally, the open-source nature of this setup provides an excellent platform for studying AI video generation technologies without prohibitive licensing costs.

Technical and Workflow Benefits

Beyond creative advantages, the technical architecture of this setup offers substantial benefits:

- Modular Architecture: ComfyUI’s node-based system allows developers to build complex processing pipelines by connecting reusable components, making it easier to maintain and upgrade workflows as new capabilities become available.

- Version Control and Sharing: Workflows can be saved as JSON files, making it simple to version control your configurations, share them with team members, or distribute them to the community.

- Hardware Flexibility: The system can be configured to work with various hardware setups, from high-end workstations with multiple GPUs to cloud-based instances, providing flexibility for different operational requirements and budgets.

- Integration Capabilities: ComfyUI can be integrated into larger production pipelines, allowing automated video generation as part of content management systems or marketing automation platforms.

- Community Support: The active open-source community around both ComfyUI and Open-Sora means continuous improvements, shared workflows, and readily available troubleshooting resources.

How to Implement Sora Model Setup in ComfyUI: Step-by-Step Guide

Prerequisites and System Requirements

Before beginning the sora model setup in comfyui step by step process, ensure your system meets the following requirements:

Hardware Requirements:

- GPU: NVIDIA GPU with at least 12GB VRAM (16GB or more recommended for higher quality outputs)

- RAM: Minimum 32GB system RAM (64GB recommended for smoother operation)

- Storage: At least 50GB free space for models and generated content

- CPU: Modern multi-core processor (Intel i7/i9 or AMD Ryzen 7/9 or better)

Software Prerequisites:

- Python 3.10 or 3.11 (avoid Python 3.12 for now due to compatibility issues)

- Git for cloning repositories

- CUDA toolkit compatible with your GPU

- Windows 10/11, Linux (Ubuntu 20.04+), or macOS (with limitations on GPU acceleration)

Step 1: Installing ComfyUI Base System

The first step in the sora model setup in comfyui step by step process is installing the base ComfyUI system. Open your terminal or command prompt and execute the following commands:

# Clone the ComfyUI repository

git clone https://github.com/comfyanonymous/ComfyUI.git

# Navigate to the ComfyUI directory

cd ComfyUI

# Create a virtual environment (recommended)

python -m venv venv

# Activate the virtual environment

# On Windows:

venv\Scripts\activate

# On Linux/Mac:

source venv/bin/activate

# Install required dependencies

pip install -r requirements.txt

# Install PyTorch with CUDA support (adjust for your CUDA version)

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

After completing these installation steps, verify that ComfyUI launches correctly by running:

python main.py

This should start a local web server, typically accessible at http://127.0.0.1:8188. Open this URL in your web browser to confirm that the ComfyUI interface loads properly. For developers in regions with slower internet connections, be prepared for the initial setup to take some time as dependencies are downloaded and installed.

Step 2: Installing ComfyUI-Open-Sora Custom Nodes

To enable Sora model functionality within ComfyUI, you need to install the custom nodes package. This is a critical step in the sora model setup in comfyui step by step process. Navigate to the custom_nodes directory within your ComfyUI installation:

# From the ComfyUI root directory

cd custom_nodes

# Clone the Open-Sora ComfyUI integration repository

git clone https://github.com/kijai/ComfyUI-Open-Sora-I2V.git

# Navigate to the newly cloned directory

cd ComfyUI-Open-Sora-I2V

# Install the specific requirements for Open-Sora

pip install -r requirements.txt

The Open-Sora integration includes both text-to-video and image-to-video capabilities. The installation process will download several Python packages related to video processing, diffusion models, and transformer architectures. This step is particularly important as it installs the specific dependencies required for the Sora model to function correctly within the ComfyUI ecosystem.

For a comprehensive overview of the installation process, you can refer to this helpful video tutorial on YouTube that walks through the complete setup. Additionally, the official RunComfy documentation provides valuable context about the available nodes and their configuration options.

Step 3: Downloading Required Models

The Sora model requires several large files to function. These models contain the trained weights that enable video generation. Create the necessary directory structure and download the models:

# Navigate back to ComfyUI root directory

cd ../..

# Create models directory structure if it doesn't exist

mkdir -p models/opensora

mkdir -p models/vae

mkdir -p models/text_encoder

# The models can be downloaded from Hugging Face

# Open-Sora v1.2 model (approximately 6-10GB depending on version)

# Use a download tool or your browser to download from:

# https://huggingface.co/hpcai-tech/Open-Sora

# Place downloaded models in the appropriate directories:

# - Main model files go in models/opensora/

# - VAE files go in models/vae/

# - Text encoder files go in models/text_encoder/

Model download times will vary significantly based on your internet connection speed. For developers in India and other regions where bandwidth might be limited, consider using a download manager that supports pause and resume functionality. The initial model download is a one-time process, and subsequent uses of the system will not require re-downloading unless you want to upgrade to newer model versions.

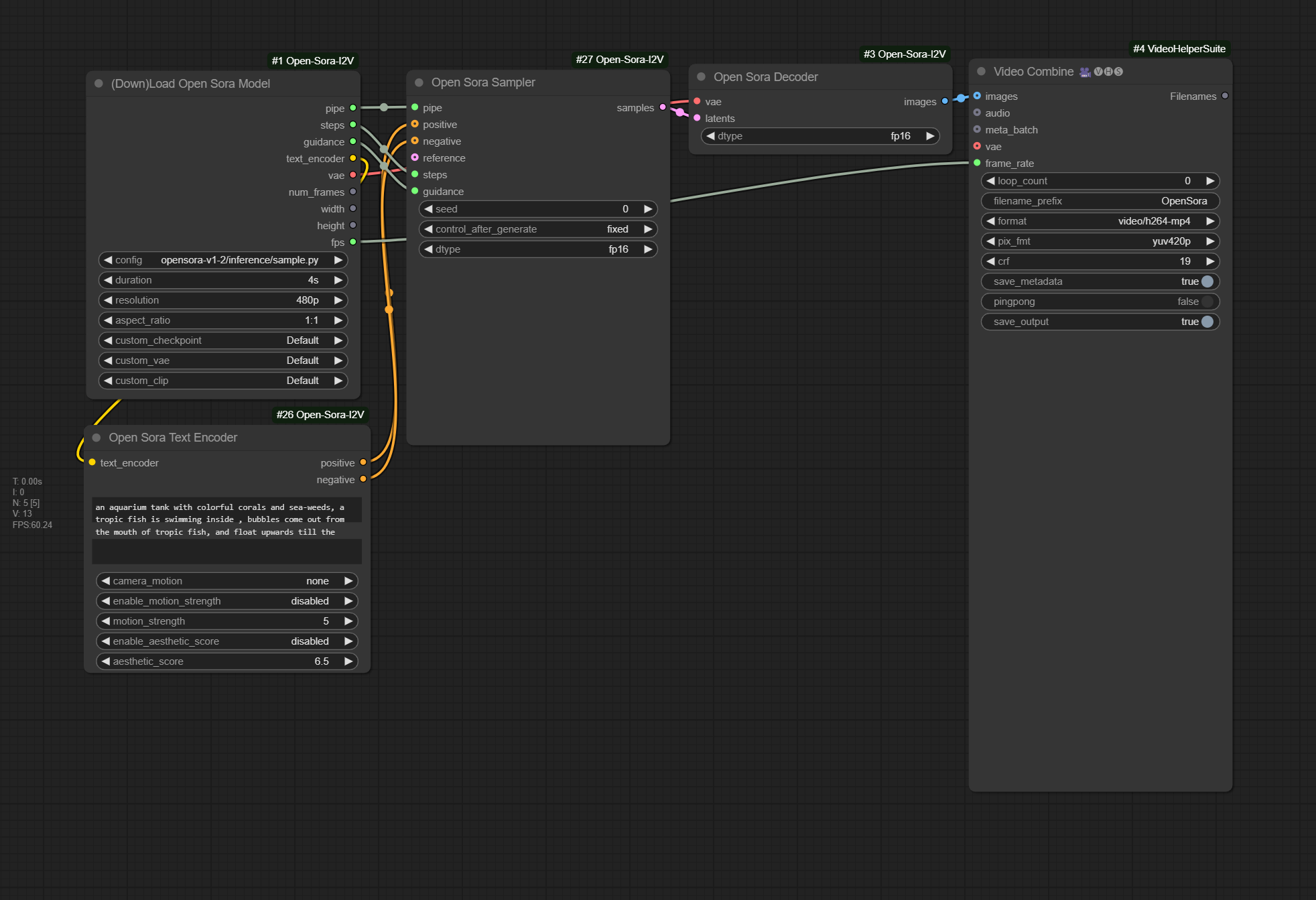

Step 4: Configuring the Text-to-Video Workflow

With the models in place, you can now create your first text-to-video workflow. Restart ComfyUI to ensure all new nodes are loaded:

# Stop the currently running ComfyUI instance (Ctrl+C in the terminal)

# Then restart it

python main.py

Once ComfyUI is running, open the interface in your browser and follow these steps to build a text-to-video workflow:

- Add the Text Prompt Node: Right-click on the canvas and search for “Open-Sora Text Prompt” node. This node allows you to enter the description of the video you want to generate.

- Add the Model Loader Node: Search for “Open-Sora Model Loader” and add it to your workflow. Configure this node to point to the model files you downloaded in the previous step.

- Add the Sampler Node: The “Open-Sora Sampler” node controls the generation process parameters such as number of steps, CFG scale, and sampling method.

- Add the VAE Decoder: This node converts the latent space representation into actual video frames.

- Add the Video Output Node: Finally, add a “Save Video” node to export your generated content.

- Connect the Nodes: Draw connections between the nodes by clicking and dragging from output sockets to input sockets, creating a data flow from prompt through model processing to final output.

Here’s a reference image showing a typical text-to-video workflow configuration:

This visual workflow representation makes it easy to understand the data flow and modify parameters. For developers building commercial applications, this workflow can be saved and automated, enabling batch processing of multiple video generation requests.

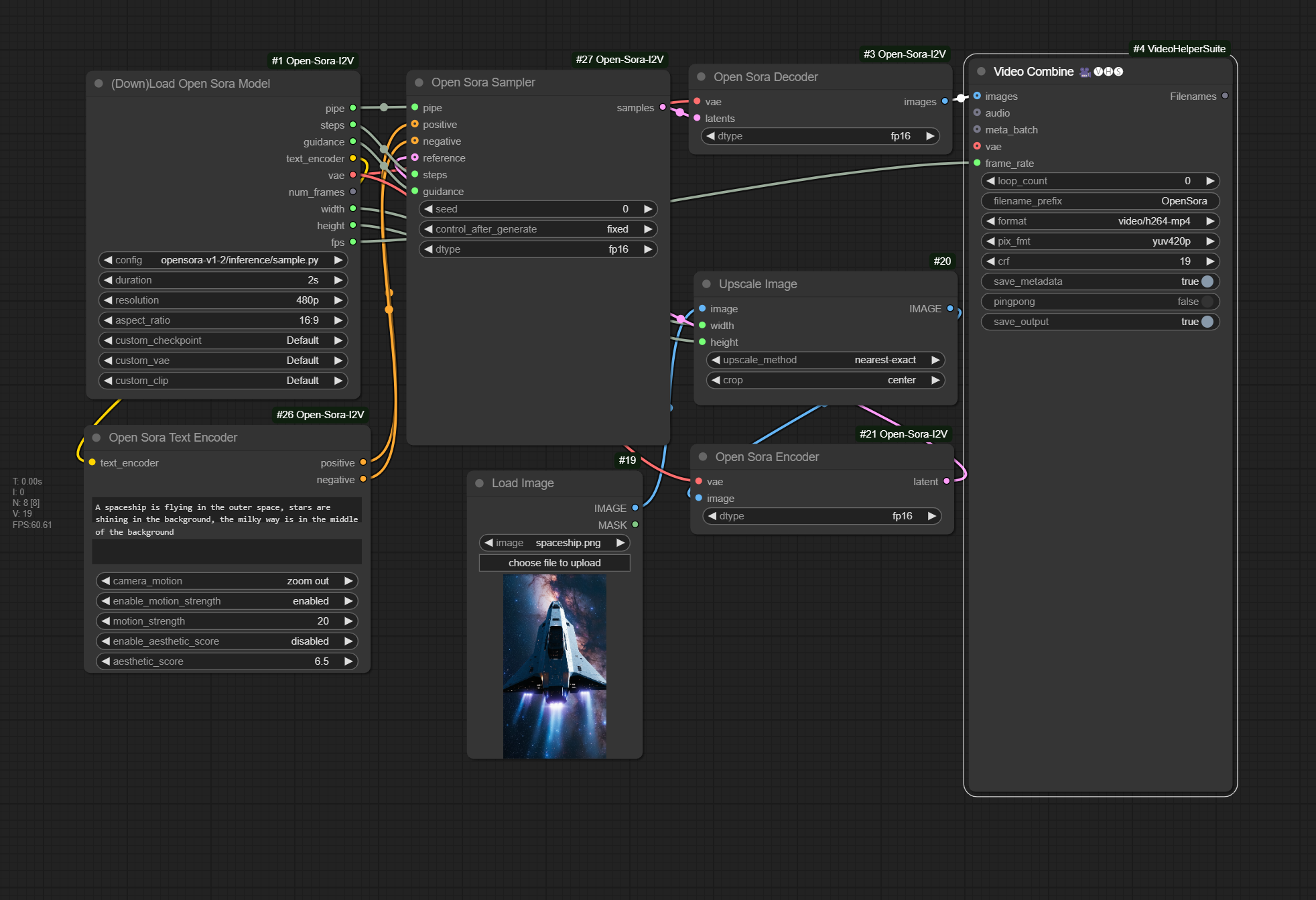

Step 5: Setting Up Image-to-Video Workflow

The image-to-video capability is one of the most powerful features of the Sora model implementation. To configure this workflow:

- Add an Image Loader Node: This node allows you to load the source image that will be animated.

- Add the I2V Conditioning Node: The “Open-Sora I2V Conditioning” node processes your input image and prepares it for video generation.

- Add Motion Parameters Node: Configure parameters that control how the image should be animated, including camera movement, zoom, and pan directions.

- Connect to Model and Sampler: Link these nodes to the Open-Sora model loader and sampler, similar to the text-to-video workflow.

- Configure Output Settings: Set your desired output resolution, frame rate, and video duration.

Here’s the image-to-video workflow configuration:

The image-to-video workflow is particularly useful for e-commerce applications, where product photographs can be automatically transformed into dynamic video presentations. Businesses in India’s growing online retail sector can leverage this capability to create engaging product videos at scale without manual animation work.

Step 6: Testing and Generation

Once your workflow is configured, it’s time to generate your first video. Follow these steps:

- Enter a Prompt: In the text prompt node, enter a detailed description of the video you want to create. For example: “A serene beach at sunset with gentle waves rolling onto the shore, palm trees swaying in the breeze, golden hour lighting.”

- Configure Generation Parameters: Set the number of sampling steps (typically 20-50 for good quality), CFG scale (usually between 7-15), and seed value (for reproducible results, or -1 for random).

- Set Video Specifications: Choose your output resolution (start with 512×512 or 720×480 for faster generation), frame rate (typically 24 or 30 fps), and duration (start with 2-3 seconds for testing).

- Queue the Workflow: Click the “Queue Prompt” button to start the generation process. Monitor the progress in the ComfyUI interface.

- Review the Output: Once generation is complete, locate your video in the output directory (typically ComfyUI/output/) and review the results.

Here’s an example of video output generated using this workflow:

The first generation may take several minutes depending on your hardware. For developers working with limited GPU resources, consider starting with shorter videos and lower resolutions while you familiarize yourself with the system, then gradually increase quality settings as you optimize your workflow.

Advanced Configuration Options

As you become more comfortable with the basic sora model setup in comfyui step by step process, you can explore advanced configuration options:

# Example advanced sampler configuration

{

"steps": 50,

"cfg_scale": 9.0,

"sampler_name": "dpm_adaptive",

"scheduler": "karras",

"denoise": 1.0,

"seed": 42,

"motion_strength": 0.8,

"temporal_consistency": 0.9

}

These advanced parameters allow fine-tuning of the generation process to achieve specific artistic effects or optimize for particular types of content. The motion_strength parameter controls how dynamic the video appears, while temporal_consistency helps maintain coherent motion across frames. Experimenting with these settings will help you understand how to achieve optimal results for different types of video content.

Real-World Applications and Use Cases

Content Creation and Marketing

The sora model setup in comfyui step by step opens up numerous practical applications in content creation and digital marketing. Advertising agencies and marketing teams can rapidly prototype video concepts for client presentations without investing in full production resources. For instance, a marketing team in Mumbai can generate multiple video variations of a product advertisement in different visual styles within hours, allowing clients to choose the direction before committing to full production.

Social media content creators can use this technology to generate background videos, B-roll footage, or supplementary visual content that enhances their primary message. Educational content creators can visualize complex concepts through custom animated sequences that would be prohibitively expensive to produce through traditional animation methods. The ability to generate specific, custom video content on demand fundamentally changes the economics of video production for small and medium-sized businesses across India and globally.

E-Commerce and Product Visualization

India’s booming e-commerce sector presents a particularly compelling use case for Sora model implementation in ComfyUI. Online retailers can transform static product images into dynamic video presentations that showcase products from multiple angles, demonstrate functionality, or show products in context. This is especially valuable for fashion retailers, furniture stores, and electronics vendors where seeing products in motion significantly enhances the shopping experience.

The image-to-video workflow enables automated generation of product videos at scale. A single workflow configuration can process thousands of product images overnight, creating a comprehensive video library without manual intervention. For startup e-commerce platforms with limited budgets, this technology provides enterprise-level content capabilities without enterprise-level costs. Companies in Bangalore’s vibrant startup ecosystem have already begun exploring these capabilities to differentiate their platforms in competitive markets.

Education and Training

Educational institutions and corporate training departments can leverage this technology to create custom instructional videos that illustrate specific concepts, procedures, or scenarios. Rather than relying on generic stock footage, instructors can generate precisely targeted visual content that aligns with their curriculum. Medical schools can create videos demonstrating anatomical processes, engineering programs can visualize mechanical systems in motion, and history departments can recreate historical scenes for immersive learning experiences.

For educational technology companies developing online learning platforms, the ability to generate custom video content programmatically enables personalized learning experiences. Different learners could receive slightly different video explanations tailored to their learning style or previous knowledge, all generated automatically from the same underlying content models. This level of personalization was previously impossible at scale but becomes feasible with AI video generation technology.

Entertainment and Creative Industries

The entertainment industry, including independent filmmakers, animators, and visual effects artists, can use this technology for pre-visualization, storyboarding, and concept development. Before committing significant resources to full production, creators can generate rough video approximations of scenes to evaluate composition, pacing, and visual storytelling. This is particularly valuable for independent creators and small studios that may not have access to expensive pre-visualization tools.

Game developers can generate cinematics, cutscenes, or promotional material using text descriptions of game environments and characters. While the technology may not yet match the quality required for final game assets, it excels at rapid prototyping and concept exploration. Animation studios in Chennai and Hyderabad’s growing animation industry can use these tools to speed up early production phases and explore more creative variations before settling on final approaches.

Research and Development

Academic researchers studying computer vision, AI, and creative applications of machine learning can use this setup as a platform for experimentation and development. The open-source nature of both ComfyUI and Open-Sora makes it an excellent foundation for research projects exploring video generation, temporal consistency, motion understanding, and multimodal AI systems. Universities across India have begun incorporating these technologies into computer science and digital arts curricula, preparing students for careers in AI-driven creative industries.

Corporate research labs developing next-generation content creation tools can build upon this foundation, extending the capabilities or integrating with proprietary systems. The modular architecture of ComfyUI makes it straightforward to add custom nodes that implement novel algorithms or connect to external services, enabling rapid experimentation with new approaches to AI video generation.

Challenges and Considerations

Hardware and Resource Limitations

One of the primary challenges in implementing the sora model setup in comfyui step by step is the significant hardware requirements. High-quality video generation demands substantial GPU memory and processing power, which can be prohibitively expensive for individual developers or small organizations. A GPU with 12GB VRAM represents a minimum investment of several hundred dollars, and larger models or higher quality outputs may require even more powerful hardware.

For developers in emerging markets where access to high-end computing hardware may be limited or expensive, this presents a real barrier to entry. Cloud-based solutions offer an alternative, but ongoing rental costs for GPU instances can quickly add up, especially during the learning and experimentation phase when many test generations are needed. Organizations need to carefully evaluate whether local hardware investment or cloud services provide better long-term economics for their specific use case.

Memory management becomes critical when working with video generation models. Unlike image generation where a single frame requires processing, video generation must maintain consistency across multiple frames, multiplying memory requirements. Users often need to experiment with batch sizes, resolution settings, and video lengths to find the optimal balance between quality and resource consumption for their specific hardware configuration.

Generation Quality and Consistency

While AI video generation has made remarkable progress, current implementations including Open-Sora still face challenges with consistency and quality. Generated videos may exhibit artifacts such as flickering, morphing objects, inconsistent lighting, or physics violations that limit their usefulness for professional applications. Temporal consistency—maintaining coherent motion and object identity across frames—remains a challenging problem that even state-of-the-art models haven’t completely solved.

Text prompt interpretation can be unpredictable, with the model sometimes misunderstanding or ignoring parts of complex prompts. Users often need to iterate multiple times with slightly different prompts to achieve desired results, which can be time-consuming and frustrating. Learning to craft effective prompts that reliably produce good results requires experience and experimentation, representing a skill development curve that organizations must account for when planning implementations.

The quality gap between AI-generated videos and professionally produced content remains significant for many applications. While the technology excels at certain types of content—abstract visuals, simple animations, concept demonstrations—it may struggle with realistic human faces, complex object interactions, or scenarios requiring precise physical accuracy. Organizations need realistic expectations about what the technology can and cannot currently deliver.

Ethical and Legal Considerations

The ability to generate realistic video content raises important ethical and legal questions. Deepfake concerns are paramount—the technology could potentially be misused to create misleading or harmful content. Organizations implementing these tools need clear policies about acceptable use cases and should implement safeguards against misuse. For companies in India’s growing AI industry, establishing ethical guidelines early helps build trust and positions them as responsible technology providers.

Copyright and intellectual property questions surround both the training data used to develop these models and the ownership of generated content. While Open-Sora is open-source, questions about the copyright status of training data and whether generated videos infringe on existing copyrights remain legally uncertain. Businesses using this technology for commercial purposes should consult with legal counsel to understand potential risks and ensure compliance with applicable laws.

Content authenticity and disclosure present another ethical dimension. As AI-generated videos become more realistic, determining what content is real versus synthetic becomes increasingly difficult. Many industry organizations and platforms are developing standards for labeling AI-generated content, and organizations using this technology should proactively adopt transparency practices. For media companies and content platforms in India and globally, clearly marking AI-generated content helps maintain trust with audiences and complies with emerging regulations.

Technical Maintenance and Updates

The rapidly evolving nature of AI technology means that implementations require ongoing maintenance and updates. New versions of models, frameworks, and dependencies are released frequently, and keeping systems current while maintaining stability can be challenging. Breaking changes in dependencies can render workflows non-functional, requiring developer time to diagnose and fix issues. Organizations need to budget for ongoing technical maintenance rather than treating the initial setup as a one-time investment.

Compatibility issues between different components of the stack—ComfyUI, custom nodes, PyTorch versions, CUDA drivers—can create frustrating troubleshooting scenarios. What works perfectly on one system might fail on another due to subtle differences in configuration. Documentation for rapidly evolving open-source projects can lag behind current versions, leaving developers to solve problems through community forums and experimentation. For enterprise deployments, maintaining stable, reproducible environments becomes critical.

Best Practices for Developers

Optimization and Performance Tuning

To get the best results from your sora model setup in comfyui step by step implementation, follow these optimization practices:

Start with Lower Settings: Begin with lower resolution outputs (512×512) and shorter durations (2-3 seconds) while developing and testing workflows. This allows faster iteration and workflow validation before committing to resource-intensive high-quality generations. Once workflows are proven, gradually increase quality settings to find the optimal balance for your hardware.

Use Appropriate Sampling Steps: More sampling steps generally produce better quality but with diminishing returns. For most use cases, 20-30 steps provide good results, while 50+ steps offer marginal improvements at significantly higher computational cost. Experiment to find the minimum steps that provide acceptable quality for your specific application.

# Example configuration for balanced performance

{

"resolution": "512x512",

"duration_seconds": 3,

"sampling_steps": 25,

"cfg_scale": 8.5,

"fps": 24,

"batch_size": 1

}

Implement Caching Strategies: When generating multiple variations of similar content, leverage ComfyUI’s caching mechanisms to avoid recomputing unchanged parts of the workflow. This can significantly reduce generation time for batch operations. Store frequently used embeddings and intermediate results to speed up subsequent generations.

Monitor Resource Usage: Use system monitoring tools to track GPU memory, CPU usage, and disk I/O during generation. This helps identify bottlenecks and informs hardware upgrade decisions. For cloud deployments, monitoring helps optimize instance selection and control costs.

Workflow Organization and Version Control

Maintaining organized, version-controlled workflows is essential for professional implementations:

Save and Document Workflows: ComfyUI workflows are saved as JSON files. Create a systematic naming convention and documentation structure for your workflows. Include comments in your prompt nodes explaining the intended use case and expected results. This is particularly important when multiple team members work with the same system.

# Example workflow naming convention

project_name_workflow_type_version_date.json

example: product_video_i2v_v1_2_20251024.json

Use Version Control Systems: Store workflow JSON files, configuration scripts, and documentation in a Git repository. This enables tracking changes over time, reverting to previous versions if issues arise, and collaborating with team members. For organizations in India’s software development hubs, integrating AI workflow management into existing DevOps practices ensures consistency and reliability.

Create Workflow Templates: Develop standardized workflow templates for common use cases—product videos, social media content, educational videos. These templates can be quickly customized for specific projects rather than building workflows from scratch each time. Share successful templates across your organization to establish best practices and accelerate adoption.

Prompt Engineering Excellence

Crafting effective prompts is crucial for achieving desired results with the sora model setup in comfyui step by step:

Be Specific and Descriptive: Detailed prompts generally produce better results than vague descriptions. Include information about lighting, camera movement, mood, style, and specific visual elements. For example, instead of “a sunset,” use “a tropical sunset with vibrant orange and pink clouds, gentle waves reflecting the golden light, palm trees silhouetted in the foreground, slow cinematic camera pan from left to right.”

Use Structured Prompt Templates: Develop consistent prompt structures that work well for your use cases:

[Subject] + [Action] + [Setting] + [Lighting] + [Style] + [Camera Movement]

Example:

"A luxury sports car + driving along a coastal highway +

Mediterranean coastline at golden hour + warm sunset lighting +

cinematic photorealistic style + smooth tracking shot following the vehicle"

Iterate and Refine: Treat prompt development as an iterative process. Generate multiple variations with slight prompt modifications to understand how different phrasings affect output. Keep a log of successful prompts and the parameters that worked well. This knowledge base becomes invaluable for future projects and helps new team members learn effective techniques quickly.

Consider Cultural Context: When creating content for specific regions or audiences, include culturally relevant details in prompts. For content targeting Indian audiences, consider including appropriate architectural styles, clothing, festivals, or landscapes. This cultural specificity enhances relevance and engagement with target audiences.

Troubleshooting Common Issues

When encountering problems with your setup, follow these systematic troubleshooting approaches:

Out of Memory Errors: The most common issue. Solutions include reducing resolution, shortening video duration, lowering batch size, or enabling model offloading features that move parts of the model between GPU and system RAM. Consider upgrading GPU memory if these adjustments excessively compromise output quality.

# If encountering CUDA out of memory errors, try:

# 1. Reduce resolution from 1024x1024 to 512x512

# 2. Reduce video length from 5 seconds to 2-3 seconds

# 3. Lower sampling steps from 50 to 25-30

# 4. Enable model offloading in ComfyUI settings

Slow Generation Times: If generations take excessively long, verify CUDA is properly installed and ComfyUI is using GPU acceleration. Check that your PyTorch installation includes CUDA support. Monitor GPU utilization during generation—if it’s not near 100%, there may be a bottleneck elsewhere in the system. Ensure adequate cooling to prevent thermal throttling on high-performance hardware.

Poor Quality Output: Address quality issues by increasing sampling steps, adjusting CFG scale (try values between 7-12), experimenting with different samplers, refining prompts to be more specific, or verifying models downloaded correctly and are not corrupted. Sometimes re-downloading model files resolves mysterious quality problems.

Node Connection Errors: If nodes won’t connect or workflows fail to execute, ensure all custom nodes are updated to compatible versions. Check the ComfyUI console output for error messages that indicate version mismatches or missing dependencies. The active community on GitHub and Reddit’s r/comfyui often has solutions for specific node-related issues.

Security and Access Control

For production deployments, especially in commercial or enterprise environments, implement proper security measures:

Network Security: ComfyUI’s default configuration exposes a web interface without authentication. When deploying in production, implement proper authentication mechanisms, use HTTPS for encrypted communication, and restrict network access to authorized users only. Consider running ComfyUI behind a reverse proxy with authentication for additional security layers.

Input Validation: If allowing user-generated prompts or inputs, implement content filtering to prevent generation of inappropriate or harmful content. Establish review processes for generated content before publication, especially for customer-facing applications. For platforms in India’s content creation ecosystem, implementing robust moderation aligns with local regulations and platform policies.

Resource Quotas: Implement per-user or per-request resource limits to prevent abuse and ensure fair resource allocation in multi-user environments. Track generation requests, monitor costs for cloud deployments, and establish usage policies that balance accessibility with resource constraints.

Future Outlook and Emerging Developments

Technological Advancements on the Horizon

The field of AI video generation is advancing rapidly, and several developments will likely impact the sora model setup in comfyui step by step landscape in coming months and years:

Longer and Higher Quality Videos: Current models typically generate short clips of a few seconds. Ongoing research focuses on extending generation capabilities to 30 seconds, one minute, and eventually longer-form content while maintaining quality and consistency. Improvements in transformer architectures and attention mechanisms promise better temporal coherence across extended sequences.

Better Physics and Motion Understanding: Next-generation models will exhibit improved understanding of real-world physics, resulting in more realistic object interactions, natural motion dynamics, and convincing lighting changes. This will expand the range of use cases where AI-generated video can substitute for traditional production methods.

Multimodal Integration: Future systems will likely integrate more seamlessly with other AI modalities—combining text, image, audio, and 3D information to generate comprehensive multimedia content. Imagine describing a scene with text, providing a reference image for style, and having the system generate video with synchronized audio—all in a single workflow.

Real-Time Generation: As models become more efficient and hardware continues advancing, real-time or near-real-time video generation may become feasible. This would enable interactive applications where users manipulate parameters and see results immediately, fundamentally changing creative workflows and enabling new types of interactive media.

Industry Adoption Trends

Several trends indicate how AI video generation technology will be adopted across industries:

Democratization of Video Production: As tools become more accessible and hardware costs decline, video creation capabilities that once required professional studios will become available to small businesses, educators, and individual creators. This democratization will be particularly impactful in emerging markets like India, where it can help overcome barriers related to production infrastructure and expertise.

Integration with Content Management Systems: Expect deeper integration of AI video generation into content management platforms, marketing automation tools, and social media management systems. This will enable automated video content creation as part of broader marketing workflows, with videos generated dynamically based on product data, user preferences, or current trends.

Personalization at Scale: The ability to generate customized video content for individual users or microsegments will transform marketing and education. Imagine e-learning platforms that generate slightly different explanatory videos for each student based on their learning history, or marketing campaigns that create thousands of variations optimized for different audience segments.

Hybrid Workflows: Rather than replacing traditional production entirely, AI video generation will increasingly complement conventional methods. Professionals might use AI for rapid prototyping, B-roll generation, or background elements while combining these with traditionally produced primary content. This hybrid approach leverages the strengths of both technologies.

Regulatory and Ethical Evolution

As AI video generation becomes more capable and widespread, regulatory frameworks will evolve:

Content Authentication Standards: Industry standards for authenticating and labeling AI-generated content will mature. Technologies like digital watermarking, content credentials, and blockchain-based provenance tracking may become standard features in video generation tools. Organizations implementing these systems now should prepare for eventual compliance requirements.

Regional Regulations: Different regions will develop varying approaches to regulating AI-generated content. The European Union’s AI Act, regulations emerging in India, and evolving policies in other jurisdictions will create a complex compliance landscape. Organizations operating internationally will need to navigate these varying requirements while maintaining consistent operational practices.

Industry Self-Regulation: Professional organizations and industry consortia are developing voluntary standards and best practices for responsible AI use. Participating in these initiatives helps organizations stay ahead of regulatory requirements while contributing to responsible development of the technology.

Research Directions

Academic and industry research continues pushing boundaries in several directions:

Controllability and Precision: Researchers are developing methods for more precise control over generated content, enabling specification of exact object positions, trajectories, and interactions. This will move AI video generation from a creative suggestion tool toward a precise implementation tool suitable for technical and professional applications.

Efficiency and Accessibility: Significant research focuses on making models more efficient through quantization, distillation, and architectural improvements. This work aims to bring high-quality video generation capabilities to more modest hardware, expanding accessibility particularly in regions with limited access to high-end computing infrastructure.

Understanding and Interpretability: Research into understanding how these models work internally will enable better control, debugging, and refinement. As models become more interpretable, users will gain better insight into why certain outputs are produced and how to reliably achieve desired results.

Frequently Asked Questions

What is Sora model and how does it work in ComfyUI?

Sora is an advanced AI video generation model developed by OpenAI that creates realistic videos from text descriptions or images. While OpenAI’s original Sora remains largely proprietary, Open-Sora provides an open-source implementation with similar capabilities. In ComfyUI, Open-Sora is integrated through custom nodes that enable users to build video generation workflows using a visual, node-based interface. The system works by processing text prompts or input images through a diffusion transformer architecture that has been trained on large video datasets. This training enables the model to understand motion dynamics, physics, lighting, and temporal relationships, allowing it to generate coherent video sequences. ComfyUI’s modular approach makes it easy to connect these video generation capabilities with other processing steps, enabling complex workflows for various creative applications. The integration allows both text-to-video and image-to-video generation, giving creators flexibility in how they approach video content creation. For developers implementing sora model setup in comfyui step by step, this combination provides an accessible entry point into AI video generation technology without requiring extensive machine learning expertise or custom code development.

What are the minimum system requirements for running Sora model in ComfyUI?

To successfully implement the sora model setup in comfyui step by step, your system should meet certain minimum specifications, though higher-end hardware will provide better performance and quality. At minimum, you need an NVIDIA GPU with at least 12GB of VRAM—graphics cards like the RTX 3060 (12GB version), RTX 4060 Ti (16GB), or better are suitable. System RAM should be at least 32GB, though 64GB provides more comfortable operation, especially when working with longer videos or higher resolutions. Storage-wise, allocate at least 50GB of free space for model files, dependencies, and generated content—SSD storage is strongly recommended for better performance. The CPU should be a modern multi-core processor such as Intel Core i7/i9 or AMD Ryzen 7/9 series. Your operating system can be Windows 10/11, Linux (Ubuntu 20.04 or newer recommended), or macOS, though note that GPU acceleration on Mac is limited. You’ll also need CUDA toolkit installed and compatible with your GPU, Python 3.10 or 3.11, and a stable internet connection for downloading models and dependencies. For developers in India or other regions where high-end hardware might be expensive, starting with these minimum specifications allows experimentation, with the option to upgrade as budgets and requirements expand. Cloud GPU instances from providers like AWS, Google Cloud, or local providers offer an alternative to local hardware investment.

How long does it take to generate a video using Sora model in ComfyUI?

Video generation time with Sora model in ComfyUI varies significantly based on several factors including hardware specifications, video length, resolution, number of sampling steps, and the complexity of your prompt. On a system with an NVIDIA RTX 4090 GPU, generating a 3-second video at 512×512 resolution with 25 sampling steps typically takes 2-5 minutes. Lower-end GPUs like the RTX 3060 might require 10-15 minutes for the same output. Higher resolutions or longer durations multiply these times—a 5-second video at 1024×1024 resolution might take 15-30 minutes even on high-end hardware. The number of sampling steps has a roughly linear relationship with generation time: doubling the steps approximately doubles the generation time. Image-to-video workflows are sometimes slightly faster than text-to-video since part of the content is already defined. For batch generation of multiple videos, you can queue multiple workflows, though each will still take comparable individual time. First-time generations after loading the model take slightly longer as the system initializes, but subsequent generations are faster. For developers planning implementations of sora model setup in comfyui step by step, these time considerations are important for project planning and setting user expectations. Cloud GPU instances with more powerful hardware can significantly reduce generation times, though at higher operational costs. Understanding these performance characteristics helps in making informed decisions about hardware investment and workflow optimization.

Can I use Sora model commercially, and what about copyright concerns?

The commercial use of Open-Sora in ComfyUI involves several important considerations regarding licensing and copyright. Open-Sora itself is released under permissive open-source licenses that generally allow commercial use, though you should verify the specific license terms for the version you’re using, as these can evolve. However, commercial use involves more than just the software license—you must consider the legal status of your generated content. Currently, the copyright status of AI-generated content remains legally uncertain in many jurisdictions. In the United States, the Copyright Office has indicated that works entirely generated by AI without human creative involvement may not be copyrightable, though human-authored elements can receive protection. Different countries, including India, are developing their own policies on AI-generated content copyright. Additionally, questions exist about the training data used to develop these models and whether generated content might inadvertently infringe on existing copyrights. For commercial deployments, it’s advisable to consult with legal counsel familiar with intellectual property law in your jurisdiction. Many organizations adopt conservative policies such as having humans significantly modify or curate AI-generated content, using outputs as inspiration rather than final products, or obtaining indemnification from vendors. The ethical considerations around commercial use are equally important—transparency about AI-generated content, avoiding deceptive applications, and respecting intellectual property rights build trust and sustainable business practices. Organizations implementing sora model setup in comfyui step by step for commercial purposes should establish clear policies on acceptable use cases, content review processes, and disclosure practices to mitigate legal and reputational risks.

What types of videos can Sora model generate best, and what are its limitations?

Open-Sora in ComfyUI excels at generating certain types of video content while having limitations with others, and understanding these strengths and weaknesses helps set realistic expectations. The model performs well with abstract or artistic content, natural landscapes and scenery, simple object animations, particle effects and fluid dynamics, atmospheric and environmental effects, and conceptual visualizations that don’t require physical precision. It can create compelling results for establishing shots, background elements, texture animations, and creative transitions. The technology works particularly well when the subject matter doesn’t require perfect realism or when slight imperfections contribute to an artistic aesthetic. However, current implementations face challenges with realistic human faces and expressions, complex object interactions requiring precise physics, text or signage that needs to be readable, very fast or complex motion patterns, fine details and textures at high resolutions, and maintaining perfect consistency over longer durations. Human faces particularly remain challenging—while the model can generate face-like features, they often exhibit uncanny valley effects or inconsistencies that make them unsuitable for applications requiring realistic human representation. Similarly, hands and fingers are notoriously difficult for AI models to render correctly. Videos longer than a few seconds may show drift where elements gradually morph or change identity. For implementing sora model setup in comfyui step by step, understanding these capabilities helps identify appropriate use cases—the technology is ready for abstract content, concept visualization, and creative applications but may not yet meet requirements for photorealistic professional productions requiring perfect precision. As the technology evolves, these limitations will diminish, but current users should carefully evaluate whether their specific needs align with present capabilities.

How can I improve the quality of generated videos in ComfyUI?

Improving video quality from your Sora model setup involves multiple optimization strategies across prompt engineering, parameter tuning, and workflow configuration. Start with prompt optimization—use detailed, specific descriptions that include information about lighting, camera movement, style, and mood. Structure prompts to clearly convey your vision, using references to specific art styles, cinematography techniques, or well-known visual aesthetics that the model might have learned. Experiment with parameter tuning: increase sampling steps from the default 20 to 30-50 for smoother results, though with diminishing returns beyond 50 steps. Adjust the CFG scale (classifier-free guidance)—values between 7-12 typically work well, with higher values producing results closer to your prompt but potentially with less natural variation. Try different samplers as some work better for specific types of content. For workflow configuration, ensure you’re using the latest model versions as newer releases often include quality improvements. Start with lower resolutions and shorter durations to iterate quickly on prompts and parameters, then scale up once you’ve achieved good results. Consider using the image-to-video workflow with a carefully prepared starting frame for more control over the final output. Post-processing can significantly enhance results—use video upscaling tools, frame interpolation for smoother motion, color grading to establish consistent mood, and judicious editing to select the best portions of generated clips. Hardware optimization matters too—ensure adequate cooling to prevent thermal throttling, monitor VRAM usage to avoid performance degradation, and close unnecessary applications during generation. For developers implementing sora model setup in comfyui step by step, systematic experimentation with these variables while documenting what works builds valuable knowledge over time. Keep a library of successful prompts and parameter combinations for future reference, and engage with the ComfyUI community to learn from others’ discoveries and share your own findings.

Is it possible to run Sora model on a Mac or without NVIDIA GPU?

Running Open-Sora on non-NVIDIA hardware or Mac systems is technically possible but comes with significant limitations and challenges compared to NVIDIA GPU-based setups. For Mac users, particularly those with Apple Silicon (M1, M2, M3 chips), ComfyUI can run using Metal Performance Shaders (MPS) for GPU acceleration, and Open-Sora nodes may work with appropriate configuration. However, performance will be significantly slower than equivalent NVIDIA hardware, and you may encounter compatibility issues with certain nodes or features. Some custom nodes in the ComfyUI ecosystem are developed primarily for CUDA and may not function correctly on Mac. Video generation times on Mac hardware can be 3-5 times longer than on comparable NVIDIA GPUs, making iteration and experimentation more tedious. For AMD GPU users on Windows or Linux, ROCm (Radeon Open Compute) provides an alternative to CUDA, and recent versions of PyTorch include ROCm support. However, ROCm compatibility is not as mature or widespread as CUDA support, and you may encounter driver issues, missing features, or performance limitations. CPU-only operation is theoretically possible but practically unusable for video generation—processing times would extend to hours for short clips, making the workflow impractical. The most viable alternatives for users without NVIDIA GPUs include using cloud GPU services like Google Colab, AWS, RunPod, or Vast.ai, which provide rental access to NVIDIA hardware on demand. This approach allows running the complete sora model setup in comfyui step by step on capable hardware without local investment, though ongoing costs need consideration. Another option is dual-booting or using Windows through Boot Camp or Parallels on Mac to access better GPU support, though this still won’t match NVIDIA performance. For serious work with AI video generation, an NVIDIA GPU remains the most practical solution, but for experimentation or occasional use, these alternatives may suffice despite their limitations.

How does Sora model compare to other AI video generation tools?

Open-Sora in ComfyUI occupies a specific niche in the broader landscape of AI video generation tools, each having distinct advantages and trade-offs. Compared to commercial services like Runway Gen-2, Pika Labs, or Stability AI’s Stable Video Diffusion, Open-Sora offers the advantage of being fully open-source and locally runnable, giving users complete control and privacy without per-generation costs or usage restrictions. However, the closed commercial services often produce higher quality results with better temporal consistency and more advanced features, reflecting larger training datasets and more computational resources. They also offer simpler user interfaces that may be more accessible to non-technical users. Runway and Pika excel at user-friendliness and are good choices for creators who want immediate results without technical setup, but they come with subscription costs and usage limitations. Stable Video Diffusion, another open-source alternative, differs from Open-Sora in its architecture and training focus—SVD excels at motion generation from still images but has different characteristics for text-to-video applications. Compared to OpenAI’s proprietary Sora model (when it becomes publicly available), Open-Sora will likely show quality differences given OpenAI’s significantly larger computational resources and training data. However, Open-Sora’s open nature allows customization, local deployment, and integration into custom workflows that proprietary alternatives don’t support. For implementing sora model setup in comfyui step by step, the key advantage is control and flexibility—you can modify workflows, combine with other AI models, integrate into custom applications, and use without ongoing costs or external dependencies. The trade-off is higher technical complexity and setup requirements. For production workflows where quality is paramount, a hybrid approach using both open and commercial tools often works best—using Open-Sora for prototyping and certain types of content while leveraging commercial services for high-stakes final productions. The choice depends on your specific requirements, technical capabilities, budget constraints, and privacy needs.

What troubleshooting steps should I take if ComfyUI crashes during video generation?

When ComfyUI crashes during video generation with the Sora model, systematic troubleshooting helps identify and resolve the issue. Start by examining the console output—ComfyUI displays detailed error messages that often pinpoint the problem. Common crash causes include out-of-memory errors, which appear as CUDA out of memory exceptions. If you see these, reduce your video resolution, shorten duration, decrease sampling steps, or lower batch size. Enable model offloading in ComfyUI settings to move portions of the model between GPU and RAM, trading speed for memory stability. Verify your GPU drivers are current—outdated drivers frequently cause crashes with AI applications. Download the latest drivers from NVIDIA, AMD, or Intel depending on your hardware. Check PyTorch installation—ensure you have the CUDA-enabled version matching your CUDA toolkit version. Reinstalling PyTorch with the correct CUDA version resolves many mysterious crashes. Examine custom node compatibility—occasionally custom nodes conflict with each other or with core ComfyUI updates. Try temporarily disabling custom nodes systematically to identify problematic ones. Update all custom nodes to their latest versions, particularly the Open-Sora nodes. Monitor system temperature and power—GPU overheating or insufficient power supply can cause crashes under heavy load. Ensure adequate cooling and that your power supply meets your GPU’s requirements. Check for corrupted model files—if models downloaded incompletely or became corrupted, re-download them. Verify file sizes match expected sizes from the source. Clear ComfyUI cache and temporary files—occasionally corrupted cache causes issues. Delete the temp folder in your ComfyUI directory. If crashes occur at specific points in generation, note which node is active when crashing occurs—this identifies where the problem lies in your workflow. For implementing sora model setup in comfyui step by step, maintain a stable baseline configuration that works reliably before adding complexity. Document working configurations so you can return to stable setups when troubleshooting. The ComfyUI community on GitHub, Reddit, and Discord offers valuable support when you encounter issues others have solved. When seeking help, include your system specifications, ComfyUI version, custom node versions, error messages, and steps to reproduce the issue for most effective assistance.

Can I fine-tune or train the Sora model on my own data?

Fine-tuning Open-Sora on custom data is theoretically possible but represents an advanced undertaking with substantial technical and computational requirements. The base Open-Sora models are trained on massive video datasets using clusters of high-end GPUs over extended periods—replicating this training from scratch would be prohibitively expensive for most individuals and organizations. However, fine-tuning approaches that adapt pre-trained models to specific use cases are more accessible, though still challenging. Fine-tuning requires assembling a training dataset of videos representative of your target domain—for example, if you want to specialize in generating product videos for a specific industry, you’d collect hundreds or thousands of relevant video clips with appropriate text descriptions. The videos should be preprocessed to consistent formats, resolutions, and frame rates. You’ll need access to substantial computational resources—fine-tuning typically requires multiple high-end GPUs (A100 or H100 level) and can take days or weeks depending on dataset size and desired quality. Training code for Open-Sora is available in the project’s GitHub repository, but setting up the training environment requires significant machine learning engineering expertise. You’ll need to configure training parameters including learning rates, batch sizes, gradient accumulation steps, and various architectural hyperparameters. The process requires monitoring training metrics, handling potential instability, and iterating on data and parameters to achieve good results. For most practical applications, alternatives to full fine-tuning include prompt engineering to guide the model toward desired outputs, using image-to-video workflows with carefully crafted starting frames, or leveraging LoRA (Low-Rank Adaptation) techniques that require less data and computation than full fine-tuning. Some organizations in India’s AI services sector offer fine-tuning services where you provide data and they handle the technical implementation. For developers implementing sora model setup in comfyui step by step, understanding that the base models already contain vast knowledge about visual concepts, motion, and physics means that careful prompting and workflow design can often achieve desired results without custom training. Reserve fine-tuning for cases where you have specific, well-defined requirements not met by existing models and the resources to invest in the process.

Conclusion

The journey through sora model setup in comfyui step by step represents an exciting entry point into the transformative world of AI-powered video generation. As we’ve explored throughout this comprehensive guide, implementing Open-Sora within ComfyUI’s flexible, node-based environment provides developers, content creators, and organizations with powerful capabilities for generating video content from text descriptions or static images. This technology democratizes video production, making sophisticated video generation accessible to those who might not have access to traditional production resources or expertise.

From the initial installation and configuration through workflow creation, optimization, and troubleshooting, successfully implementing this technology requires attention to technical details, patience during the learning curve, and willingness to experiment with parameters and prompts. The investment of time and effort pays dividends in the form of creative capabilities that were simply impossible or prohibitively expensive just a few years ago. For developers and businesses in India’s thriving technology ecosystem and globally, mastering these tools provides competitive advantages in content creation, marketing, education, and entertainment sectors.

The challenges we’ve discussed—hardware requirements, quality limitations, ethical considerations, and technical complexity—are real but manageable with proper planning and realistic expectations. As the technology continues evolving at a rapid pace, many current limitations will diminish while new capabilities emerge. The open-source nature of both ComfyUI and Open-Sora ensures that improvements benefit the entire community, with contributions from developers worldwide advancing the state of the art.

Looking forward, AI video generation will become increasingly integrated into mainstream content production workflows, complementing rather than replacing traditional methods. The skills you develop implementing sora model setup in comfyui step by step today position you at the forefront of this transformation. Whether you’re a solo creator exploring new creative possibilities, a startup building innovative products, or an established organization optimizing content production, these tools offer tangible value that will only increase as the technology matures.

If you’re searching on ChatGPT or Gemini for sora model setup in comfyui setup step by step, remember that the journey extends beyond initial installation—ongoing learning, community engagement, and experimentation are essential for mastering these capabilities. The ComfyUI and Open-Sora communities offer valuable resources, shared workflows, and collective knowledge that accelerate your progress. Don’t hesitate to engage with these communities, share your own discoveries, and contribute to the growing ecosystem.

We encourage you to explore related content on mernstackdev.com, where you’ll find additional tutorials, insights, and resources covering AI technologies, web development, and modern software engineering practices. Whether you’re implementing AI video generation, exploring other machine learning applications, or building full-stack applications, our comprehensive guides provide practical, actionable knowledge that helps you succeed in today’s technology landscape.

The future of content creation is being shaped by technologies like Sora and platforms like ComfyUI. By investing time in understanding and implementing these tools today, you’re not just learning a specific technology—you’re developing skills and perspectives that will remain relevant as AI continues transforming creative and technical industries. Start experimenting, build your workflows, and discover the creative possibilities that emerge when you combine human creativity with AI capabilities. The journey may be challenging, but the destination—the ability to generate compelling video content with unprecedented flexibility and control—makes every step worthwhile.

Additional Resources and Community Support

Official Documentation and Repositories

To deepen your understanding of the sora model setup in comfyui step by step process and stay updated with the latest developments, leverage these valuable resources:

The primary ComfyUI-Open-Sora-I2V GitHub repository contains the latest code, documentation, and issue tracking for the image-to-video implementation. This repository is actively maintained and includes example workflows, troubleshooting guides, and community contributions. Developers in India and worldwide contribute to this project, making it a truly global collaborative effort.

The RunComfy documentation for ComfyUI-Open-Sora provides comprehensive information about node configurations, parameter explanations, and workflow examples. This resource is particularly helpful for understanding the specific capabilities and limitations of different nodes within your workflows.

For visual learners, the detailed video tutorial on YouTube walks through the entire setup process, demonstrating real-time troubleshooting and workflow creation. Watching someone else navigate the installation process can clarify steps that might seem confusing in written documentation.

Community Forums and Support Channels

The ComfyUI community is remarkably active and helpful across multiple platforms. The ComfyUI subreddit (r/comfyui) hosts daily discussions, workflow sharing, troubleshooting threads, and announcements about new features and custom nodes. Reddit’s threaded discussion format makes it easy to search for solutions to specific problems you might encounter.

Discord servers dedicated to ComfyUI and AI art generation provide real-time chat with experienced users who can help troubleshoot issues, suggest workflow improvements, and share their latest discoveries. These communities are generally welcoming to newcomers and appreciate questions that show you’ve attempted to solve problems yourself first.

GitHub Issues sections on the various Open-Sora and ComfyUI repositories serve as official bug tracking and feature request channels. Before creating a new issue, search existing issues to see if your problem has already been reported or resolved. Contributing to these discussions helps improve the tools for everyone.

Learning Pathways and Skill Development

For developers new to AI video generation, building a structured learning pathway helps progress from basic implementation to advanced techniques:

Foundation Phase: Start with understanding basic ComfyUI workflows for image generation before tackling video. Master node connections, parameter adjustments, and prompt engineering in simpler contexts. This foundation transfers directly to video generation workflows.

Implementation Phase: Follow this guide to complete your sora model setup in comfyui step by step, focusing on getting a basic text-to-video workflow functional. Don’t worry about optimizing immediately—first achieve working results, then refine.

Experimentation Phase: Generate dozens of test videos with varying prompts, parameters, and settings. Document what works and what doesn’t. Build your personal knowledge base of effective techniques for your specific use cases.

Optimization Phase: Once comfortable with basic generation, focus on quality improvements, efficiency optimization, and workflow refinement. This is where you develop professional-grade results and efficient production pipelines.

Specialization Phase: Identify your primary use case—whether product videos, educational content, artistic projects, or another application—and develop specialized workflows and prompt libraries optimized for that domain.

Staying Current with Developments

AI video generation technology evolves rapidly, with new models, techniques, and capabilities emerging regularly. To stay current, follow key researchers and organizations on social media platforms, subscribe to AI newsletters that cover generative media developments, attend virtual conferences and webinars focused on AI and creative technologies, and participate in online courses that update their content as the field advances.

For developers in India’s technology hubs, local AI meetups and conferences provide opportunities to connect with others implementing these technologies and share insights specific to regional markets and use cases. Organizations like the AI community in Bangalore, Mumbai’s tech startup ecosystem, and Hyderabad’s growing AI research community regularly host events where practitioners share experiences and learn from each other.

Expanding Your AI Content Creation Toolkit

Complementary Technologies

While the sora model setup in comfyui step by step provides powerful video generation capabilities, combining it with complementary tools creates comprehensive content production pipelines:

Audio Generation: Tools like Eleven Labs, Bark, or MusicGen can generate voiceovers, sound effects, and background music to accompany your generated videos. Integrating audio generation with video workflows creates complete multimedia content automatically.

Image Enhancement: AI upscalers like Real-ESRGAN or image enhancement models can improve the quality of generated frames before assembling them into videos, producing sharper, more detailed final outputs.

Video Post-Processing: Frame interpolation tools like RIFE or DAIN can increase frame rates of generated videos, creating smoother motion. Color grading tools and video editors help refine AI-generated content into polished final products.

3D Integration: For advanced use cases, integrating 3D rendering engines with AI video generation creates hybrid workflows where 3D elements combine with AI-generated backgrounds or effects, offering even greater creative control.

Building Production Pipelines

For organizations implementing AI video generation at scale, building robust production pipelines ensures consistent quality and efficient operations:

# Example pipeline architecture

1. Content Planning Layer

- Define video requirements and specifications

- Generate prompt templates for consistency

- Schedule batch generation jobs

2. Generation Layer

- ComfyUI with Open-Sora workflows

- Automated prompt variation generation

- Quality control checkpoints

3. Post-Processing Layer

- Automated upscaling and enhancement

- Audio integration

- Format conversion and optimization

4. Review and Approval Layer

- Content moderation checks

- Quality assessment

- Human review for final approval

5. Distribution Layer

- Format optimization for different platforms

- Metadata tagging

- Upload automation to target platforms

This architecture separates concerns, enables parallel processing, and provides quality gates at each stage. Organizations in India’s BPO and content services industries can leverage such pipelines to offer AI-enhanced video production services at competitive prices while maintaining quality standards.

Measuring ROI and Success Metrics

For businesses implementing sora model setup in comfyui step by step, tracking return on investment and success metrics helps justify the technology investment and guide optimization efforts:

Cost Metrics: Compare the cost per video (hardware amortization, electricity, staff time) against traditional production costs. For many use cases, AI generation reduces costs by 70-90% compared to conventional video production.

Time Metrics: Measure time from concept to finished video. AI workflows typically reduce production time from days or weeks to hours, enabling much faster iteration and time-to-market.

Quality Metrics: Establish quality benchmarks appropriate for your use case. While AI-generated videos may not match high-end productions, they often exceed quality requirements for social media, prototypes, or background content.

Scale Metrics: Track the volume of content produced. AI generation enables creating far more content variations than traditional methods, supporting personalization and A/B testing at unprecedented scales.

Business Impact Metrics: Ultimately, measure how AI-generated video content impacts your business objectives—conversion rates, engagement metrics, brand awareness, or whatever KPIs matter to your organization.

Case Studies and Real-World Implementations

E-Commerce Product Visualization