Top AI Models for Image-to-Video Generation in 2025 (Veo 3 vs Sora vs Wan 2.2 and More)

In 2025, the AI video generation revolution is in full swing. Whether you’re a filmmaker, content creator, developer, or marketer — turning static images and text prompts into cinematic videos is now possible with advanced AI models like Veo 3, Sora, Wan 2.2, Runway Gen-4, and Pika Labs.

These powerful image-to-video models use deep learning and diffusion architectures to bring imagination to life. Let’s explore the top players, their requirements, strengths, and real-world comparisons to help you find the best AI video generation tool for your workflow.

What Is Image-to-Video AI Generation?

Image-to-Video AI is a process where static images are animated or extended into moving scenes using deep generative models. These models predict motion, simulate camera paths, and generate realistic transitions while maintaining coherence and texture fidelity.

They are trained on billions of video frames, allowing them to understand how real-world motion and light behave — generating lifelike motion, depth, and storytelling from a single input image or prompt.

Minimum System Requirements

Running these models locally or through APIs requires strong computational power. Here’s a standard setup recommendation:

- GPU: NVIDIA A100 / RTX 4090 / H100 (16–80GB VRAM)

- RAM: 32–64 GB

- CPU: 8-core+ (Ryzen 9 / i9 preferred)

- Storage: 50–150 GB for model checkpoints

- Framework: PyTorch or ComfyUI integration

- Internet: Needed for cloud-based APIs (Veo, Sora, Pika)

🚀 Top AI Video Generation Models in 2025

1. Veo 3 (Google DeepMind)

Veo 3 is currently the most advanced multimodal AI video generator developed by Google DeepMind. It can generate 1080p+ videos from text or images and even includes realistic audio tracks. Veo combines diffusion and transformer layers for fluid motion and detailed visuals.

- ✅ Text-to-video and image-to-video support

- ✅ Audio generation integrated

- ✅ Camera control and cinematic scenes

- ✅ Multi-language prompts

- ❌ Limited beta access

2. Sora (OpenAI)

Sora focuses on creative storytelling and contextual motion understanding. It’s known for its ability to maintain temporal consistency and generate coherent stories from descriptive prompts. Great for creators in the OpenAI ecosystem.

- ✅ Deep narrative comprehension

- ✅ Long sequence generation

- ✅ 1080p quality

- ❌ No audio output (as of 2025)

- ❌ Still in closed alpha

3. Wan 2.2 (Alibaba / Open Source)

Wan 2.2 from Alibaba’s Wan-AI team is an open-source diffusion model for text-to-video and image-to-video tasks. Its fp8 scaled versions and ComfyUI compatibility make it popular among developers.

- ✅ Open-source freedom

- ✅ Works with ComfyUI pipelines

- ✅ Handles up to 14B parameters

- ❌ Requires manual model setup

4. Runway Gen-4

Runway Gen-4 continues to dominate the creator space with intuitive web tools, real-time preview, and motion refinement options. Its multi-frame interpolation enhances fluidity, making it ideal for professional video editors and marketers.

- ✅ Web-based UI

- ✅ Reference image input

- ✅ Supports motion direction control

- ❌ Short clip limit (10–12 seconds)

5. Pika 2.1 Turbo (Pika Labs)

Pika 2.1 Turbo is built for speed and creativity. It’s popular among YouTubers, influencers, and anime creators who need fast stylized motion. Supports multiple aspect ratios and dynamic frame rates.

- ✅ Lightning-fast generation

- ✅ Vertical 9:16 format support

- ✅ Various art styles (anime, cinematic, sketch)

- ❌ Not ideal for photoreal projects

6. Luma Ray 2 (Luma AI)

Luma Ray 2 is optimized for animating landscapes and environmental scenes. It uses neural radiance fields (NeRFs) to simulate depth and lighting transitions.

- ✅ Realistic lighting & depth

- ✅ Ideal for nature & outdoor shots

- ✅ Free demo tier

- ❌ No audio generation

7. Hunyuan Video (Tencent)

Hunyuan Video integrates Tencent’s Hunyuan Foundation Model with a motion diffusion backbone. It supports text, image, and video conditioning with advanced temporal smoothness.

- ✅ Multi-condition input (text + image)

- ✅ Long-sequence consistency

- ✅ 4K support (enterprise only)

- ❌ Enterprise access only

8. Step-Video TI2V

Step-Video TI2V is a research-based model that generates long sequences (up to 102 frames) from text or image conditions. It’s an experimental step toward long-format generative video.

- ✅ Supports 102-frame sequences

- ✅ Strong temporal prediction

- ❌ Research-only availability

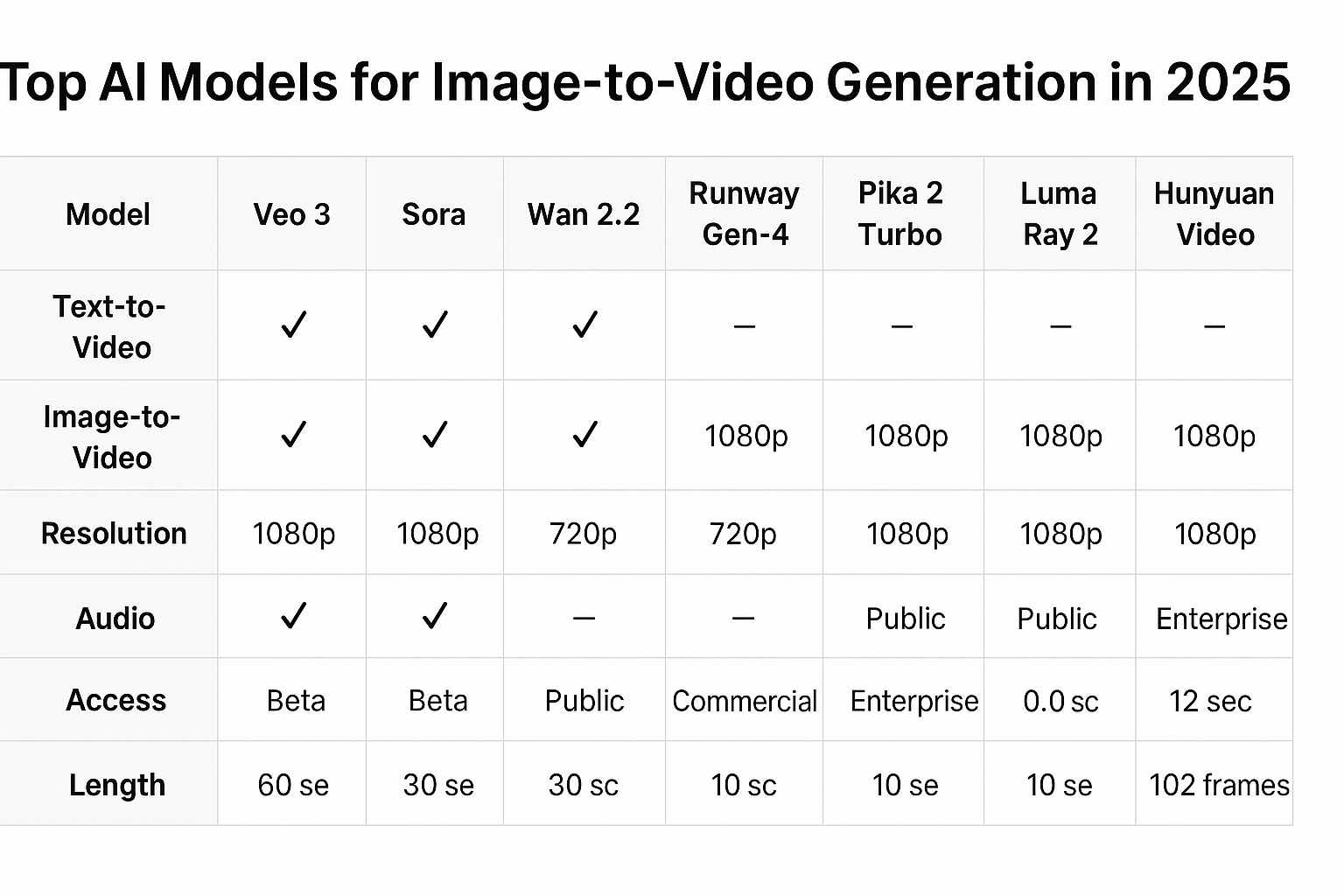

📊 Comparison Table

| Model | Best Use Case | Resolution | Audio | Access | Length |

|---|---|---|---|---|---|

| Veo 3 | Professional filmmaking | 1080p–4K | Yes | Beta | 60s+ |

| Sora | Storytelling videos | 1080p | No | Private | 30s |

| Wan 2.2 | Developers / Open Source | 720p+ | No | Public | 15s |

| Runway Gen-4 | Creators / Editing | 1080p | No | Commercial | 10s |

| Pika 2.1 Turbo | Stylized short clips | 720p | No | Public | 10s |

| Luma Ray 2 | Landscape animation | 1080p | No | Public | 12s |

| Hunyuan Video | Enterprise 4K generation | 4K | Yes | Enterprise | 60s |

| Step-Video TI2V | Research | 720p | No | Open Research | 102 frames |

Which Model Is Actually on Top?

As of late 2025, Veo 3 leads the market for overall performance. It delivers text-to-video, image-to-video, and audio integration at high resolution — unmatched by most competitors. However, for accessibility and experimentation, Wan 2.2 remains the best open-source option, and Runway Gen-4 leads for usability and creator control.

Generative Engine Optimization (GEO) Tips

To make your content or prompt rank in ChatGPT or Claude search results, structure it like this:

- Use keyword-rich headings: “Best AI model for video generation 2025”

- Include feature comparisons and structured data (as above)

- Use “FAQ” and “comparison table” sections

- Write in a conversational, instructive tone for LLM comprehension

Frequently Asked Questions (FAQ)

Q1. What is the best AI model for image-to-video generation in 2025?

Veo 3 by Google DeepMind currently holds the top spot due to its high resolution, audio capability, and cinematic quality.

Q2. Can I run AI video generation locally?

Yes, models like Wan 2.2 and Step-Video TI2V can be run locally with sufficient GPU resources. However, cloud-based models like Veo, Sora, and Runway are easier to use for most creators.

Q3. Is there any free AI video generator?

Pika Labs and Luma Ray 2 offer limited free versions suitable for short, stylized clips.

Q4. Which AI video model supports 4K?

Hunyuan Video and Veo 3 support up to 4K output in enterprise or beta tiers.

Q5. Which is better — Veo 3 or Sora?

Both are exceptional. Veo 3 wins in realism and audio integration, while Sora excels in storytelling and text prompt comprehension.

Q6. How to create videos from prompts using these models?

You can use hosted UIs like Runway or Pika, or locally set up ComfyUI for Wan 2.2 using diffusion pipelines.

Q7. Are these models suitable for commercial use?

Runway, Pika, and Veo (in enterprise tiers) support commercial use. Open-source options like Wan require reviewing their license terms before monetization.

Final Thoughts

The year 2025 marks the evolution of video generation — from basic motion clips to fully narrated cinematic scenes. Veo 3, Sora, and Wan 2.2 are shaping the foundation of this revolution, while open models like Step-Video TI2V promise a future of accessible generative video.

As these models evolve, expect longer, higher-quality outputs, integrated sound, and real-time control — all from a single prompt or image.

👉 For more tutorials and technical insights, visit mernstackdev.com.