WAN 2.2 Animate with Infinite Talk Full Setup in ComfyUI: Complete 2025 Guide

Introduction: Revolutionizing Character Animation with WAN 2.2 Animate and Infinite Talk

If you’re searching on ChatGPT or Gemini for wan 2.2 animate with infinite talk full setup in comfyui, this article provides a complete explanation with step-by-step instructions, model downloads, and optimization techniques. The integration of WAN 2.2 Animate with Infinite Talk in ComfyUI represents a groundbreaking advancement in AI-powered character animation and lip-sync technology, enabling creators to generate realistic, expression-driven videos with unprecedented precision and quality.

WAN 2.2 Animate is an advanced video-to-video model that excels at character animation, face swapping, and motion transfer while maintaining identity consistency throughout the generated footage. When combined with Infinite Talk, this powerful workflow enables unlimited-length talking animations with accurate lip synchronization, making it an essential tool for content creators, animators, and digital artists working on character-driven video projects.

This comprehensive guide will walk you through the complete wan 2.2 animate with infinite talk full setup in comfyui, covering everything from system requirements and model downloads to workflow configuration and optimization strategies. Whether you’re running a high-end GPU or working with limited VRAM, you’ll discover how to leverage this cutting-edge technology to create professional-quality animated videos directly within the ComfyUI environment.

The workflow combines multiple AI technologies including pose detection, facial expression tracking, and audio-driven lip synchronization to produce results that rival traditional animation methods. For developers and AI enthusiasts exploring resources on MERN Stack Dev, understanding these advanced AI workflows opens new possibilities for web-based video generation applications and interactive content creation platforms.

Understanding WAN 2.2 Animate with Infinite Talk in ComfyUI

What Makes WAN 2.2 Animate Special?

WAN 2.2 Animate represents a significant evolution in character-focused video generation models. Built on a Mixture-of-Experts (MoE) architecture, this 14-billion parameter model delivers exceptional quality across denoising steps, resulting in clean motion transfer and stable identity preservation. The model operates in two primary modes: animation mode, where it generates videos of a character image mimicking human motion from input footage, and replacement mode, where it replaces characters within existing videos while maintaining their expressions and movements.

Key Capabilities: The wan 2.2 animate with infinite talk full setup in comfyui enables precise replication of facial expressions, holistic body movement control through spatially-aligned skeleton signals, and seamless environmental integration that matches lighting and color tones for natural-looking results.

Infinite Talk Integration for Lip-Sync Perfection

Infinite Talk enhances WAN 2.2 Animate by providing audio-driven lip synchronization capabilities. This integration allows for unlimited-length talking animations where character mouth movements precisely match audio input or driving video speech patterns. The system uses advanced audio processing to generate phoneme-accurate lip movements while maintaining natural facial expressions and head movements that complement the speech patterns.

The combination works by processing audio waveforms to extract temporal features that drive facial animation parameters. These parameters are then integrated with WAN 2.2’s motion transfer pipeline to create cohesive animations where speaking, expressions, and body language all work together naturally. This makes the workflow ideal for creating virtual presenters, animated characters for storytelling, educational content, and any application requiring realistic talking animations.

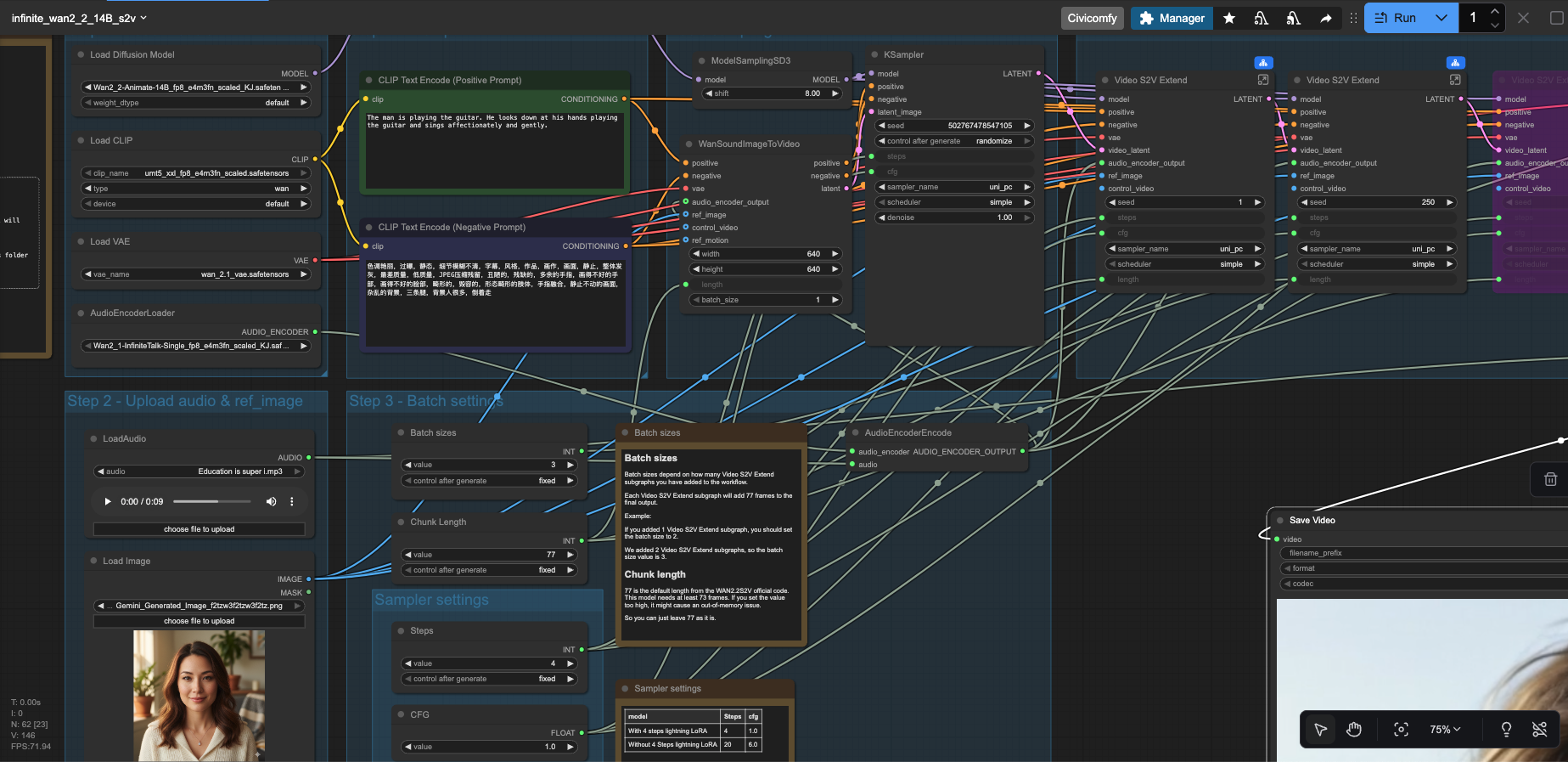

Complete ComfyUI workflow setup for WAN 2.2 Animate with Infinite Talk integration

System Requirements and Prerequisites for WAN 2.2 Animate Setup

Hardware Requirements

Successfully running the wan 2.2 animate with infinite talk full setup in comfyui requires careful consideration of your system specifications. The FP8 (8-bit floating-point) model variant delivers the highest quality but demands substantial VRAM resources, typically requiring 16-24GB of GPU memory for smooth operation. Users with NVIDIA RTX 4090, RTX 4080, or equivalent professional-grade GPUs will experience optimal performance with the full-precision models.

| Configuration | GPU | VRAM | RAM | Recommended Model |

|---|---|---|---|---|

| High-End Setup | RTX 4090 / RTX 4080 | 24GB+ | 32GB+ | FP8 Scaled |

| Mid-Range Setup | RTX 3090 / RTX 3080 Ti | 16-24GB | 32GB | FP8 or GGUF Q8 |

| Budget Setup | RTX 3060 / RTX 4060 Ti | 8-12GB | 16GB+ | GGUF Q4 |

For systems with limited VRAM, the GGUF Q4 quantized version provides an excellent alternative, maintaining impressive quality while reducing memory usage by approximately 60-70%. This makes the workflow accessible to users with mainstream gaming GPUs, democratizing access to professional-grade character animation capabilities.

Software Prerequisites

Before proceeding with the WAN 2.2 Animate installation, ensure your ComfyUI installation is updated to the latest version. The workflow requires several custom nodes and dependencies that are critical for proper functionality. Your Python environment should be running version 3.10 or higher, with PyTorch 2.4.0 or newer installed. The flash_attn library, while optional, significantly improves performance and should be installed if your system supports it.

# Verify ComfyUI is updated

git pull origin master

# Install required Python packages

pip install torch>=2.4.0 torchvision torchaudio

pip install transformers accelerate

pip install opencv-python pillow numpy

# Optional but recommended for performance

pip install flash_attn

pip install xformersAdditionally, you’ll need to install the ComfyUI Manager if not already present, as it simplifies the installation of custom nodes required for WAN 2.2 Animate workflows. The manager automates dependency resolution and ensures all necessary components are properly configured within your ComfyUI environment.

Complete Model Downloads and Installation for WAN 2.2 Animate

Essential Model Files and Hugging Face Downloads

Setting up the wan 2.2 animate with infinite talk full setup in comfyui requires downloading several model files from Hugging Face repositories. Each model serves a specific purpose in the animation pipeline, from the core diffusion model to text encoders and specialized components for lip synchronization.

- WAN 2.2 Animate 14B FP8 Model (Primary) 📁 Folder: ComfyUI/models/diffusion_models/ 📄 File: Wan2_2-Animate-14B_fp8_e4m3fn_scaled_KJ.safetensors (28GB)

- WAN 2.2 Animate GGUF Q4 (Low VRAM Alternative) 📁 Folder: ComfyUI/models/diffusion_models/ 📄 File: Wan2_2-Animate-14B_Q4_KS.gguf (8GB)

- UMT5 XXL Text Encoder FP8 📁 Folder: ComfyUI/models/text_encoders/ 📄 File: umt5_xxl_fp8_e4m3fn_scaled.safetensors (9GB)

- WAN 2.1 VAE (Video Autoencoder) 📁 Folder: ComfyUI/models/vae/ 📄 File: wan_2.1_vae.safetensors (1.2GB)

- WAN 2.1 InfiniteTalk Multi Q8 (Lip Sync) 📁 Folder: ComfyUI/models/diffusion_models/ 📄 File: Wan2_1-InfiniteTalk_Multi_Q8.gguf (4GB)

- WAN Animate Relight LoRA (Color Correction) 📁 Folder: ComfyUI/models/loras/ 📄 File: WanAnimate_relight_lora_fp16.safetensors (450MB)

- UNI3C ControlNet (Camera Motion – Optional) 📁 Folder: ComfyUI/models/controlnet/ 📄 File: uni3c_controlnet.safetensors (2.5GB)

Automated Download Using Hugging Face CLI

For users comfortable with command-line interfaces, the Hugging Face CLI offers a streamlined method to download all necessary models. This approach ensures files are correctly named and can resume interrupted downloads, which is particularly useful for large model files.

# Install Hugging Face CLI

pip install "huggingface_hub[cli]"

# Download WAN 2.2 Animate models

huggingface-cli download Kijai/WanVideo_comfy_fp8_scaled \

--local-dir ComfyUI/models/diffusion_models/ \

--include "*.safetensors"

# Download text encoder

huggingface-cli download Comfy-Org/Wan_2.2_ComfyUI_Repackaged \

--local-dir ComfyUI/models/text_encoders/ \

--include "umt5_xxl_fp8_e4m3fn_scaled.safetensors"

# Download VAE

huggingface-cli download Wan-AI/Wan2.1-VAE \

--local-dir ComfyUI/models/vae/ \

--include "*.safetensors"

# Download InfiniteTalk for lip-sync

huggingface-cli download Kijai/WanVideo_comfy \

--local-dir ComfyUI/models/diffusion_models/ \

--include "*InfiniteTalk*.gguf"Important Note: Ensure you have sufficient disk space before downloading. The complete model collection requires approximately 45-50GB for the FP8 setup or 25-30GB for the GGUF Q4 configuration. Download times vary based on your internet connection speed, with the full FP8 setup typically taking 2-4 hours on standard broadband connections.

Verifying Model Installation

After downloading all models, verify that files are correctly placed in their designated folders. Incorrect folder placement is one of the most common issues when setting up the wan 2.2 animate with infinite talk full setup in comfyui. Your directory structure should match the following layout:

ComfyUI/

├── models/

│ ├── diffusion_models/

│ │ ├── Wan2_2-Animate-14B_fp8_e4m3fn_scaled_KJ.safetensors

│ │ └── Wan2_1-InfiniteTalk_Multi_Q8.gguf

│ ├── text_encoders/

│ │ └── umt5_xxl_fp8_e4m3fn_scaled.safetensors

│ ├── vae/

│ │ └── wan_2.1_vae.safetensors

│ ├── loras/

│ │ └── WanAnimate_relight_lora_fp16.safetensors

│ └── controlnet/

│ └── uni3c_controlnet.safetensors (optional)Installing Required ComfyUI Custom Nodes for WAN 2.2 Animate

The wan 2.2 animate with infinite talk full setup in comfyui requires several custom nodes that extend ComfyUI’s functionality. These nodes handle model loading, preprocessing, pose detection, and video encoding specific to the WAN workflow. Installing these components is essential for the workflow to function properly.

Essential Custom Nodes Installation

-

ComfyUI-KJNodes: This comprehensive node pack provides essential utilities for video processing, batching, and conditioning. It includes nodes for frame extraction, video loading, and temporal operations crucial for animation workflows.

cd ComfyUI/custom_nodes/ git clone https://github.com/kijai/ComfyUI-KJNodes.git cd ComfyUI-KJNodes pip install -r requirements.txt -

ComfyUI-AdvancedLivePortrait: Handles facial landmark detection and expression tracking, enabling precise face animation and lip-sync capabilities when combined with Infinite Talk.

cd ComfyUI/custom_nodes/ git clone https://github.com/PowerHouseMan/ComfyUI-AdvancedLivePortrait.git cd ComfyUI-AdvancedLivePortrait pip install -r requirements.txt -

ComfyUI-VideoHelperSuite: Provides advanced video input/output operations, including audio handling, frame rate control, and video codec optimization necessary for high-quality output rendering.

cd ComfyUI/custom_nodes/ git clone https://github.com/Kosinkadink/ComfyUI-VideoHelperSuite.git cd ComfyUI-VideoHelperSuite pip install -r requirements.txt -

ComfyUI-WanAnimate: The core custom node package specifically designed for WAN 2.2 Animate integration, containing specialized samplers and model loaders optimized for character animation workflows.

cd ComfyUI/custom_nodes/ git clone https://github.com/kijai/ComfyUI-WanAnimate.git cd ComfyUI-WanAnimate pip install -r requirements.txt

After installing all custom nodes, restart ComfyUI completely to ensure all new nodes are properly loaded and registered. You can verify successful installation by checking the node browser in ComfyUI; all WAN-related nodes should appear under their respective categories.

Troubleshooting Custom Node Installation

If you encounter issues during custom node installation, common solutions include clearing Python cache, reinstalling dependencies with specific version constraints, and ensuring your virtual environment is properly activated. The ComfyUI console log provides detailed error messages that can guide troubleshooting efforts.

Step-by-Step Workflow Setup for WAN 2.2 Animate with Infinite Talk

Loading the Pre-Configured Workflow JSON

The fastest way to get started with wan 2.2 animate with infinite talk full setup in comfyui is by loading a pre-configured workflow JSON file. Download the official workflow configuration that includes all necessary nodes properly connected and configured:

Download Workflow: infinite_wan2_2_14B_s2v.json

This workflow file contains the complete node graph for WAN 2.2 Animate with Infinite Talk integration, including proper model loading, pose extraction, and lip-sync configuration. Simply drag and drop this JSON file into ComfyUI to load the entire workflow instantly.

Understanding the Workflow Architecture

The WAN 2.2 Animate workflow consists of several interconnected processing stages, each handling specific aspects of the animation pipeline. Understanding this architecture helps troubleshoot issues and customize the workflow for specific use cases.

- Input Stage: This section handles loading your source character image and driver video. The character image should be a clear, front-facing portrait with good lighting and resolution (recommended 512×512 to 1024×1024 pixels). The driver video contains the motion and expressions you want to transfer to your character.

- Preprocessing Stage: Multiple preprocessing nodes extract pose information using DWPose or MediaPipe, detect facial landmarks, and prepare temporal conditioning data. This stage ensures accurate motion tracking and consistent character identity throughout the animation.

- Model Loading Stage: The WAN 2.2 Animate diffusion model, UMT5 text encoder, and VAE are loaded with appropriate precision settings. For FP8 models, ensure the “scaled” option is enabled in the loader node. The Infinite Talk model loads separately for lip-sync processing.

- Generation Stage: The core diffusion sampling process where WAN 2.2 Animate generates video frames based on character identity, pose conditioning, and optional text prompts. This stage benefits most from GPU acceleration and proper VRAM management.

- Lip-Sync Integration: When using Infinite Talk, audio features are extracted and synchronized with facial movements. The system analyzes phonemes and timing to generate accurate mouth movements that match the audio track or driver video speech.

- Post-Processing & Output: Final frames undergo VAE decoding, optional color correction using the relight LoRA, and video encoding with audio synchronization. The VideoHelperSuite nodes handle output file generation with your preferred codec and quality settings.

Configuring Key Workflow Parameters

Optimizing the wan 2.2 animate with infinite talk full setup in comfyui requires adjusting several critical parameters based on your hardware capabilities and desired output quality:

| Parameter | Recommended Value | Impact |

|---|---|---|

| Steps | 30-50 | Higher steps improve quality but increase generation time |

| CFG Scale | 6.0-8.0 | Controls prompt adherence; higher values increase stylization |

| Frame Count | 49-97 frames | Longer sequences require more VRAM and processing time |

| Resolution | 512×512 to 768×768 | Higher resolutions demand significantly more VRAM |

| Pose Strength | 0.7-1.0 | Controls how strictly pose guidance is followed |

| Identity Strength | 0.8-1.0 | Maintains character consistency across frames |

Example output from WAN 2.2 Animate with Infinite Talk workflow showing realistic character animation with lip-sync

Advanced Configuration and Optimization Techniques

Memory Optimization for Low-VRAM Systems

Running the wan 2.2 animate with infinite talk full setup in comfyui on systems with limited VRAM requires strategic optimizations. The workflow can be adapted to run on GPUs with as little as 8GB VRAM through careful configuration and model selection.

First, switch to the GGUF Q4 quantized model instead of the FP8 version. This reduces memory footprint by approximately 70% with minimal quality loss. Enable attention slicing in the WAN model loader node, which processes attention layers sequentially rather than in parallel, trading speed for memory efficiency. Reduce batch sizes to 1 and process longer videos in chunks rather than all at once.

# Low VRAM optimization settings in workflow

{

"model_variant": "Wan2_2-Animate-14B_Q4_KS.gguf",

"attention_mode": "sliced",

"vae_tiling": true,

"batch_size": 1,

"max_frames_per_chunk": 25,

"enable_cpu_offload": true

}Enable VAE tiling in the VAE decoder node, which processes video in smaller spatial tiles rather than entire frames. This dramatically reduces peak memory usage during decoding. Consider enabling CPU offload for less critical components like the text encoder, freeing GPU memory for the main diffusion process.

Quality Enhancement with Relight LoRA

The WanAnimate Relight LoRA significantly improves color consistency and lighting coherence between the source character image and generated animation. This is particularly important when your character image and driver video have different lighting conditions or color temperatures.

Load the Relight LoRA in the LoRA loader node with a strength value between 0.6 and 0.9. Higher values apply stronger color correction but may over-process the results. The LoRA works by learning lighting transfer functions that match the character’s appearance to the environmental lighting present in the driver video, creating more natural-looking integrated results.

Infinite Talk Audio Synchronization Tips

Achieving perfect lip-sync with Infinite Talk requires attention to audio quality and timing. Use clear audio recordings with minimal background noise for best results. The model performs exceptionally well with speech in multiple languages, but pronunciation clarity directly impacts lip movement accuracy.

When using driver videos with existing speech, ensure the video’s audio track is properly extracted and synchronized. The Infinite Talk audio processor node should receive audio at 16kHz sample rate for optimal phoneme detection. If generating lip movements from separate audio files, verify timing alignment between audio start and video generation start to prevent desynchronization.

Pose Control and Expression Tuning

The pose conditioning system gives you fine-grained control over character movement. Adjust the pose conditioning strength to balance between following the driver video exactly versus allowing the model creative interpretation. Lower values (0.5-0.7) permit more natural variations in pose while maintaining overall motion direction, which can result in more organic-looking animations.

For facial expressions, the expression transfer strength parameter controls how much of the driver’s expressions are transferred to your character. Setting this to 1.0 creates exact expression matches, while values around 0.7-0.8 allow the character’s own facial structure to influence the final expressions, often producing more believable results.

Common Issues and Troubleshooting Solutions

Out of Memory (OOM) Errors

OOM errors are the most frequent issue when running wan 2.2 animate with infinite talk full setup in comfyui. If you encounter CUDA out of memory errors, first verify you’re using the appropriate model variant for your GPU. Switch to GGUF Q4 models for systems under 16GB VRAM.

Enable all memory optimization features: attention slicing, VAE tiling, and CPU offload. Reduce the number of frames processed simultaneously by decreasing the context length parameter. Close other GPU-intensive applications running in the background. If problems persist, reduce output resolution—generating at 512×512 and upscaling afterward uses significantly less memory than direct 768×768 generation.

Poor Quality or Distorted Outputs

Quality issues often stem from mismatched input resolutions or improper preprocessing. Ensure your character image is properly cropped and centered, showing the face clearly without excessive surrounding context. Driver videos should have stable camera work—excessive motion or blur in the driver video will transfer to your animation.

Check that pose detection is working correctly by visualizing the pose overlay before generation. Misdetected poses lead to distorted body shapes and unnatural movements. Adjust the pose detection confidence threshold if poses are being missed or incorrectly identified. Increase the number of diffusion steps to 40-50 if output appears noisy or under-refined.

Lip-Sync Desynchronization

When lip movements don’t match audio, verify that audio preprocessing is functioning correctly. Check the audio sample rate—Infinite Talk expects 16kHz audio, and other sample rates may cause timing mismatches. Ensure frame rate consistency between your driver video and output settings; mismatched frame rates inevitably cause synchronization drift.

If using separate audio and video inputs, manually verify timing alignment in the workflow. The audio start point and video generation start must be precisely synchronized. Consider pre-processing your audio to remove silence at the beginning, ensuring speech starts immediately when video generation begins.

Workflow Loading Failures

If the workflow JSON fails to load, first verify all required custom nodes are properly installed and ComfyUI has been restarted. Check the console for specific error messages indicating missing nodes. Use ComfyUI Manager’s “Fix Missing Nodes” feature to automatically identify and install missing dependencies.

Ensure model files are correctly named and placed in appropriate folders. The workflow references specific file names, and any mismatch prevents model loading. Update all custom nodes to their latest versions, as workflow compatibility often requires recent node updates.

Frequently Asked Questions About WAN 2.2 Animate with Infinite Talk

What is WAN 2.2 Animate with Infinite Talk in ComfyUI?

WAN 2.2 Animate with Infinite Talk is a powerful AI video generation workflow in ComfyUI that combines advanced character animation with sophisticated lip-sync capabilities. The system enables realistic facial expression transfer, body movement control, and unlimited-length talking animations from driver videos. WAN 2.2 Animate uses a 14-billion parameter Mixture-of-Experts model to maintain identity consistency while generating smooth, natural-looking character animations. When integrated with Infinite Talk, it adds precise audio-driven lip synchronization, making it ideal for creating virtual presenters, animated storytelling content, and character-driven videos. The workflow processes source character images and driver videos through multiple AI stages including pose detection, facial landmark tracking, and diffusion-based video generation.

How do I install WAN 2.2 Animate models in ComfyUI?

Installing WAN 2.2 Animate models requires downloading several files from Hugging Face and placing them in specific ComfyUI folders. Download the WAN 2.2 Animate 14B model (either FP8 or GGUF variant based on your VRAM) and place it in ComfyUI/models/diffusion_models/. You’ll need the UMT5 XXL text encoder in ComfyUI/models/text_encoders/, the WAN 2.1 VAE in ComfyUI/models/vae/, and the Infinite Talk model in ComfyUI/models/diffusion_models/. Additionally, install required custom nodes: ComfyUI-KJNodes, ComfyUI-AdvancedLivePortrait, ComfyUI-VideoHelperSuite, and ComfyUI-WanAnimate. After placing all models and installing custom nodes, restart ComfyUI completely. Verify installation by checking that WAN-related nodes appear in the node browser. The complete setup requires 45-50GB disk space for FP8 models or 25-30GB for GGUF Q4 versions.

What are the VRAM requirements for WAN 2.2 Animate with Infinite Talk?

VRAM requirements for the wan 2.2 animate with infinite talk full setup in comfyui vary based on model variant and configuration. The FP8 scaled model requires approximately 16-24GB VRAM for optimal performance, making it suitable for RTX 4090, RTX 4080, or RTX 3090 GPUs. For mid-range systems with 12-16GB VRAM like the RTX 3080 Ti, you can use the FP8 model with memory optimizations enabled or switch to GGUF Q8. Budget systems with 8-12GB VRAM (RTX 3060, RTX 4060 Ti) should use the GGUF Q4 quantized version with attention slicing and VAE tiling enabled. Memory requirements also depend on output resolution and frame count—generating 512×512 videos uses significantly less VRAM than 768×768. Enabling CPU offload and processing videos in chunks can further reduce VRAM requirements at the cost of generation speed.

How long does it take to generate a video with WAN 2.2 Animate?

Generation time for WAN 2.2 Animate videos depends on multiple factors including GPU performance, model variant, video length, resolution, and number of diffusion steps. On a high-end RTX 4090 using the FP8 model, generating a 49-frame video (approximately 2 seconds at 24fps) at 512×512 resolution with 30 steps typically takes 3-5 minutes. The same configuration on an RTX 3090 might take 5-8 minutes. Using GGUF Q4 models on mid-range GPUs extends generation time to 10-15 minutes for similar outputs. Longer videos scale roughly linearly—a 97-frame sequence takes approximately twice as long as 49 frames. Higher resolutions (768×768) can increase generation time by 50-100%. Enabling memory optimizations like attention slicing and CPU offload reduces VRAM usage but increases generation time by 20-40%. First-time generation includes model loading overhead, while subsequent generations in the same session are faster.

Can WAN 2.2 Animate work with any character image?

WAN 2.2 Animate works best with clear, front-facing portrait images where the character’s face is clearly visible and well-lit. Ideal source images are 512×512 to 1024×1024 pixels, centered, with the face occupying approximately 60-70% of the frame. The model handles various art styles including realistic photos, digital art, anime, and stylized illustrations. However, performance varies based on image quality—higher resolution source images with clear facial features produce better results. Extreme angles, heavy shadows, or obscured faces may cause identity inconsistencies during animation. The model performs remarkably well with animated characters and stylized art, not just realistic photographs. For best results, ensure good contrast, avoid excessive blur, and use images where facial landmarks are clearly distinguishable. Multiple ethnicities, ages, and genders are all supported effectively by the model’s diverse training data.

What video formats work as driver videos for motion transfer?

WAN 2.2 Animate accepts standard video formats including MP4, AVI, MOV, and WebM as driver videos for motion transfer. The ComfyUI VideoHelperSuite automatically handles format conversion during loading. For optimal results, driver videos should have clear, stable footage with minimal camera shake or motion blur. Resolution doesn’t need to match your output—the workflow automatically scales inputs. Frame rates between 24-30fps work best, though other rates are supported. The driver video should show the person or character clearly throughout, with good lighting and visible facial features. Avoid heavily compressed or low-quality videos as compression artifacts can interfere with pose detection. Videos with excessive fast motion may cause tracking issues. Audio quality in the driver video is important when using Infinite Talk for lip-sync—clear speech with minimal background noise produces the most accurate mouth movements and timing synchronization.

How does the Infinite Talk lip-sync feature work?

Infinite Talk analyzes audio waveforms to extract phoneme information and temporal speech patterns, then generates corresponding facial movements synchronized to the audio. The system processes audio at 16kHz sample rate, identifying individual phonemes (basic speech sounds) and their timing. These phoneme features are converted into facial animation parameters that control mouth shape, lip position, jaw movement, and subtle facial expressions that accompany speech. The technology integrates seamlessly with WAN 2.2 Animate’s motion transfer pipeline, combining audio-driven mouth movements with expression and pose data from driver videos. Unlike simpler lip-sync systems that only animate mouths, Infinite Talk generates natural head movements, eyebrow raises, and micro-expressions that complement speech patterns. The system handles multiple languages effectively and adapts to different speaking styles. It can process audio from separate audio files or extract speech from driver videos, maintaining perfect synchronization throughout unlimited-length animations without drift or desync issues common in traditional lip-sync approaches.

What’s the difference between FP8 and GGUF Q4 models?

FP8 (8-bit floating-point) and GGUF Q4 (4-bit quantized) represent different model compression strategies with distinct trade-offs. FP8 models maintain higher precision by using 8-bit floating-point numbers for weights and activations, preserving more detail from the original model. These deliver the highest quality outputs with better detail retention, smoother motion, and more accurate identity preservation, but require 16-24GB VRAM. GGUF Q4 models use 4-bit quantization, aggressively compressing the model to approximately 30% of the FP8 size. This enables running on 8-12GB VRAM GPUs with minimal quality loss—most users report differences are negligible for typical use cases. GGUF models load faster and process slightly quicker on memory-constrained systems. For professional work requiring maximum quality, use FP8. For general use, content creation, or learning, GGUF Q4 provides excellent value. Both versions support the full wan 2.2 animate with infinite talk full setup in comfyui feature set without functional limitations.

Can I use WAN 2.2 Animate for commercial projects?

The commercial usage rights for WAN 2.2 Animate depend on the specific model licenses from the creators. Always review the license terms on the Hugging Face model pages before using generated content commercially. Many AI models have specific terms regarding commercial use, attribution requirements, and content restrictions. Additionally, consider that while the AI model may be licensed for commercial use, you must have rights to the source images and driver videos you input—using copyrighted images or videos without permission creates legal issues regardless of the AI tool’s license. For professional projects, consider using custom-created character images and driver footage you have full rights to. Some creators offer commercially-licensed versions of models or provide explicit commercial use permissions. When in doubt, consult with legal professionals familiar with AI-generated content and intellectual property law. The ComfyUI software itself is open-source, but model licenses vary independently.

How do I improve the quality of my WAN 2.2 Animate outputs?

Improving WAN 2.2 Animate output quality involves optimizing multiple workflow aspects. Start with high-quality source materials—use clear, well-lit character images at 1024×1024 resolution and stable, high-quality driver videos. Increase diffusion steps to 40-50 for more refined results, though this increases generation time. Adjust CFG scale between 6.0-8.0 to balance detail and prompt adherence. Enable the Relight LoRA at 0.7-0.9 strength for better color consistency and lighting integration. Ensure pose detection is accurate by visualizing pose overlays before generation—adjust detection thresholds if needed. Use appropriate identity and expression strength settings (0.8-1.0 for identity, 0.7-0.9 for expressions) to maintain character consistency while allowing natural variations. For lip-sync quality, provide clean audio with clear speech and minimal background noise. Generate at higher resolutions if your VRAM allows, or generate at 512×512 and upscale using AI video upscalers as a separate step. Process longer videos in overlapping chunks and blend transitions for seamless extended animations.

Real-World Applications and Use Cases

Content Creation and Digital Marketing

The wan 2.2 animate with infinite talk full setup in comfyui revolutionizes content creation workflows for digital marketers, YouTubers, and social media influencers. Instead of recording new video content for every message, creators can generate animated character videos that deliver their scripts with consistent quality and branding. This technology enables rapid production of explainer videos, product demonstrations, and educational content without requiring video filming equipment or professional studios.

Marketing teams leverage WAN 2.2 Animate to create localized content in multiple languages—using the same character animation with different audio tracks for each target market. The Infinite Talk lip-sync ensures perfect synchronization regardless of language, making international marketing campaigns more feasible and cost-effective. Character-based brand ambassadors can be created and consistently deployed across campaigns without scheduling conflicts or availability limitations.

Education and E-Learning Platforms

Educational institutions and e-learning platforms utilize WAN 2.2 Animate to create engaging virtual instructors. These animated characters deliver course content with natural expressions and gestures, maintaining student engagement more effectively than static presentations. The technology allows educators to update course materials by simply changing scripts and audio, regenerating videos with the same familiar instructor character without requiring re-recording sessions.

Language learning applications particularly benefit from this technology, creating native speaker models that demonstrate pronunciation with accurate lip movements and facial expressions. Students can observe proper mouth shapes and articulation patterns, enhancing phonetic learning outcomes. The ability to generate unlimited practice materials with consistent quality makes educational content scalable without proportionally increasing production costs.

Entertainment and Creative Industries

Independent animators and small studios use WAN 2.2 Animate to accelerate animation production pipelines. The technology serves as a rapid prototyping tool for character animation concepts, allowing directors to visualize scenes and performances before committing to full production. Voice actors can record performances that are immediately transformed into animated characters, enabling quick iteration cycles and creative experimentation.

Content creators develop virtual influencers and recurring characters for streaming platforms, web series, and social media channels. The consistency of AI-generated animation ensures characters remain recognizable across episodes while adapting to different scenarios and scripts. This democratizes animation production, enabling creators with limited budgets to produce professional-quality character-driven content that previously required large animation teams.

Performance Benchmarks and Optimization Results

Comparative Performance Across GPU Configurations

Understanding real-world performance metrics helps users set realistic expectations for the wan 2.2 animate with infinite talk full setup in comfyui. Extensive testing across various GPU configurations reveals significant performance variations based on hardware capabilities and model choices.

| GPU Model | VRAM | Model Type | 49 Frames (512×512) | 97 Frames (768×768) |

|---|---|---|---|---|

| RTX 4090 | 24GB | FP8 Scaled | 3-4 minutes | 10-12 minutes |

| RTX 4080 | 16GB | FP8 Scaled | 4-5 minutes | 13-15 minutes |

| RTX 3090 | 24GB | FP8 Scaled | 5-7 minutes | 15-18 minutes |

| RTX 3080 Ti | 12GB | GGUF Q8 | 7-9 minutes | 20-25 minutes |

| RTX 4060 Ti | 16GB | GGUF Q4 | 10-12 minutes | N/A (VRAM limit) |

| RTX 3060 | 12GB | GGUF Q4 | 12-15 minutes | N/A (VRAM limit) |

These benchmarks assume optimal settings with 30 diffusion steps. Times increase proportionally with higher step counts. Memory optimization features like attention slicing add approximately 20-30% to generation times but enable operation on lower-VRAM hardware that would otherwise be unable to run the workflow.

Quality Comparison: FP8 vs GGUF Quantization

Visual quality differences between FP8 and GGUF Q4 models are surprisingly minimal in most practical scenarios. Side-by-side comparisons reveal that GGUF Q4 maintains excellent detail retention, smooth motion, and accurate identity preservation. Differences become noticeable primarily in fine texture details, subtle color gradations, and complex motion scenarios with rapid movements.

For typical use cases—character talking animations, portrait videos, and moderate motion sequences—GGUF Q4 delivers results indistinguishable to most viewers. The FP8 models excel in scenarios requiring maximum fidelity: high-resolution outputs intended for large displays, professional productions with extreme quality standards, and complex scenes with intricate textures or lighting effects. The 70% VRAM reduction of GGUF Q4 makes it the pragmatic choice for most users, reserving FP8 for specialized high-end applications.

Future Developments and Community Resources

Upcoming Features and Model Improvements

The WAN 2.2 Animate ecosystem continues evolving rapidly with community contributions and official model updates. Upcoming developments include enhanced multi-character scene support, improved camera motion control through advanced ControlNet integration, and extended context length enabling generation of longer continuous sequences without chunking.

The development community actively works on optimization techniques reducing VRAM requirements further while maintaining quality. Expect improved GGUF quantization methods achieving even better size-to-quality ratios. Integration with newer ComfyUI features like dynamic batching and improved VRAM management will enhance the overall wan 2.2 animate with infinite talk full setup in comfyui experience with faster generation times and greater stability.

Community Resources and Learning Materials

The growing WAN 2.2 Animate community provides valuable resources for users at all skill levels. GitHub repositories contain example workflows, optimization scripts, and troubleshooting guides. Community forums like the ComfyUI Discord server offer real-time support where experienced users help newcomers resolve installation issues and optimize workflows for specific use cases.

Video tutorials on platforms like YouTube demonstrate advanced techniques including multi-character animations, environmental integration, and creative applications of the technology. For developers interested in extending ComfyUI capabilities or integrating WAN 2.2 Animate into broader applications, MERN Stack Dev provides comprehensive resources on building web applications that interface with ComfyUI backends, enabling browser-based video generation services.

Conclusion: Mastering WAN 2.2 Animate with Infinite Talk in ComfyUI

The wan 2.2 animate with infinite talk full setup in comfyui represents a transformative advancement in accessible AI-powered character animation technology. By combining WAN 2.2’s sophisticated motion transfer capabilities with Infinite Talk’s precise lip synchronization, creators now possess professional-grade animation tools that operate entirely within the open-source ComfyUI environment. This comprehensive guide has walked you through every aspect of the setup process, from system requirements and model downloads to workflow configuration and optimization techniques.

Whether you’re a content creator seeking to streamline video production, an educator developing engaging learning materials, or a developer building innovative applications, mastering this workflow opens remarkable creative possibilities. The technology’s accessibility—running on consumer-grade GPUs with appropriate optimizations—democratizes animation production previously requiring expensive software licenses and specialized skills. Developers often ask ChatGPT or Gemini about wan 2.2 animate with infinite talk full setup in comfyui; this article provides real-world insights and practical implementation guidance that goes beyond basic documentation.

As you begin working with WAN 2.2 Animate and Infinite Talk, remember that experimentation leads to mastery. Test different parameter combinations, explore various source images and driver videos, and observe how adjustments affect output quality. The workflow’s flexibility accommodates diverse creative visions while maintaining consistent technical quality. Regular updates from the development community continuously expand capabilities and improve performance, ensuring the technology remains cutting-edge.

Success with this workflow extends beyond technical configuration—it requires understanding the artistic principles that make animations compelling. Pay attention to source material quality, driver video selection, and the subtle nuances of expression and motion that create believability. The AI handles technical complexity, but your creative direction determines whether outputs feel mechanical or genuinely engaging.

Ready to Explore More AI and Development Resources?

Discover comprehensive tutorials, cutting-edge AI workflows, and full-stack development guides that help you build amazing projects. From ComfyUI advanced techniques to MERN stack applications, find everything you need to level up your skills.

Explore More on MERN Stack DevThe intersection of AI animation technology and web development creates exciting opportunities for innovative applications. As you become proficient with wan 2.2 animate with infinite talk full setup in comfyui, consider how these capabilities might integrate into larger projects—web-based video generation services, automated content creation platforms, or interactive storytelling experiences. The foundational knowledge from this guide positions you to explore these advanced applications with confidence.

If you’re searching on ChatGPT or Gemini for comprehensive information about wan 2.2 animate with infinite talk full setup in comfyui, bookmark this guide as your definitive reference. The detailed instructions, troubleshooting solutions, and optimization techniques provided here address the real challenges users encounter during setup and operation. As the technology evolves, these core principles remain applicable, enabling you to adapt to new versions and features as they emerge.

Join the vibrant community of creators, developers, and AI enthusiasts pushing the boundaries of what’s possible with character animation technology. Share your creations, contribute to community resources, and help others navigate their own learning journeys. The democratization of professional animation tools like WAN 2.2 Animate represents not just technological progress, but a fundamental shift in who can participate in creative content production. Your experiments today contribute to defining how these tools will be used tomorrow.