Wan 2.2 S2V is one of the leading open-source speech-to-video (S2V) models that turns audio into cinematic video. This comprehensive guide walks you through the model’s architecture, hardware requirements, detailed setup (local + cloud), optimization advice, troubleshooting steps, real-world U.S. workflows, benchmark comparisons, pros/cons, and a full FAQ. Everything below is tuned for creators and engineers across all United States states — from California and Texas to New York and Florida.

What is Wan 2.2 S2V?

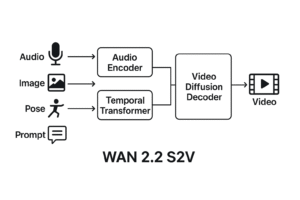

At a high level, Wan 2.2 S2V is a speech-to-video model that consumes audio (speech or singing), optionally a reference image and/or pose sequence, plus a text prompt, and produces a short video where the subject lip-syncs and moves in accordance to the audio. It blends diffusion-based generative video techniques and transformer-based temporal modeling. Content creators, advertisers, and educators can use Wan 2.2 S2V to produce short cinematic clips — converting podcasts, voiceovers, and scripts into visual content with surprisingly natural motion and facial sync.

Key capabilities: synchronized lip movement, plausible body motion, scene composition and camera moves influenced by prompts, multiple input modes (audio + image + pose + prompt).

Deep technical breakdown

Model architecture overview

Wan 2.2 S2V uses a modular architecture combining three main subsystems:

- Audio encoder & prosody extractor: transforms raw audio into a temporal latent representation capturing phonemes, intensity, pitch, and rhythm.

- Temporal transformer & conditioning: consumes audio latents, optional pose or image embeddings, and prompt embeddings to plan motion and facial expressions across frames.

- Video diffusion decoder: a denoising diffusion network that generates frame-by-frame pixels (or latent video tokens) conditioned on the transformer’s output. It handles color, lighting, camera motion, and finer details.

How audio drives motion

The audio encoder detects phonetic boundaries, stress and prosodic cues. The transformer aligns these audio events to the anticipated mouth shapes (visemes), face micro-expressions, and overall cadence. This temporal alignment creates credible lip-sync and head/torso movements tied to spoken emphasis.

Prompt & image conditioning

Text prompts are used to set scene style, camera framing, and emotional tone (e.g., “close-up, cinematic light, warm color grade”). A high-quality reference image defines subject identity, clothing, and visible face characteristics. An optional pose video can be supplied to precisely control full-body motions (dances, gestures) while audio controls lip-sync and micro facial expressions.

Memory & compute implications

Diffusion decoders and large transformers are memory hungry. To mitigate this, the implementation supports techniques like model offloading (CPU+NVMe), mixed/dynamic precision (BF16/FP16/FP8), and distributed parallelism (FSDP/DeepSpeed). These techniques reduce the per-GPU VRAM requirement, but inferencing higher resolution videos or longer sequences still typically needs high-memory GPUs or multi-GPU setups.

System requirements (local + cloud)

Requirements vary by use case (testing vs production) and resolution (480p/720p/1080p). Below are practical baseline and recommended setups tuned for U.S. creators.

Local minimum (for testing / low-res)

- GPU: NVIDIA 24–48 GB (e.g., RTX 4090 recommended for experimental low-res via heavy optimization)

- CPU: 8-core

- RAM: 32–64 GB

- Storage: NVMe SSD (500 GB+)

- OS: Ubuntu 20.04/22.04 or Windows 11 with CUDA

- Python 3.10+, PyTorch 2.x with CUDA

Recommended for production / 720p–1080p

- GPU: NVIDIA A100 80 GB or H100 or multi-GPU (2×4090+ with FSDP)

- CPU: 16+ cores

- RAM: 128 GB

- Storage: 1TB+ NVMe

- Network: 1 Gbps+ for downloads and cloud workflows

Cloud options (U.S.)

Cloud rental is often most practical for U.S. users because it avoids upfront GPU purchase and provides ondemand scaling:

| Provider | Instance | Use Case |

|---|---|---|

| AWS | p4d / p5 / g5 | Multi-GPU runs, production pipelines (US-East, US-West) |

| Google Cloud | A2 / A3 (A100) | Training & inference at scale (US-Central) |

| Lambda Labs / CoreWeave | RTX 4090 / H100 | Developer-friendly & faster spin-up |

| RunPod / Vast.ai | Rent A100 / 80GB | Cost-effective bursts for creators |

Step-by-step setup & commands (full)

The following step-by-step guide assumes you’re either on a Linux workstation or a U.S. cloud instance. Replace paths and names to match your environment.

1. System prep (Ubuntu)

sudo apt update && sudo apt upgrade -y

# Install essentials

sudo apt install -y git python3 python3-venv build-essential ffmpeg2. Clone repo & environment

git clone https://github.com/Wan-Video/Wan2.2.git

cd Wan2.2

python3 -m venv .venv

source .venv/bin/activate3. Install Python deps

pip install --upgrade pip

pip install -r requirements.txt

pip install -r requirements_s2v.txt # if present4. Download model weights (Hugging Face)

pip install "huggingface_hub[cli]"

huggingface-cli login # paste your token

huggingface-cli download Wan-AI/Wan2.2-S2V-14B --local-dir ./models/Wan2.2-S2V-14B5. Prepare assets

Place your assets in an `inputs/` folder:

mkdir -p inputs

# copy files

cp ~/Downloads/my_audio.wav inputs/audio.wav

cp ~/Downloads/ref_image.jpg inputs/image.jpg

# optional

cp ~/Downloads/pose.mp4 inputs/pose.mp46. Run basic inference (single-GPU, test)

python generate.py \

--task s2v-14B \

--ckpt_dir ./models/Wan2.2-S2V-14B \

--image inputs/image.jpg \

--audio inputs/audio.wav \

--prompt "Close-up cinematic monologue, soft warm lighting" \

--size 640*480Notes: start with smaller resolutions (640×480 or 720×480) for initial iterations. If you see Out-Of-Memory (OOM) errors, reduce `–size` or enable offloading (next section).

7. Multi-GPU / distributed inference

torchrun --nproc_per_node=4 generate.py \

--task s2v-14B \

--ckpt_dir ./models/Wan2.2-S2V-14B \

--image inputs/image.jpg \

--audio inputs/audio.wav \

--prompt "Wide shot, cinematic motion, New York street at sunset" \

--size 1280*720 \

--fsdp8. Postprocess & stitch

ffmpeg -i output.mp4 -i inputs/audio.wav -c:v copy -c:a aac final_output.mp4

ffmpeg -i final_output.mp4 -vf "scale=1280:720" -r 24 ~/"wan_final_720p.mp4"Optimization, memory & performance tuning

Precision & offload

Mixed precision (BF16 / FP16 / FP8) reduces memory and increases throughput. Use model offloading to CPU or NVMe to trade speed for memory if your GPU VRAM is limited:

python generate.py --offload_model True --convert_model_dtype True --dtype bf16Distributed tricks

- FSDP / ZeRO / DeepSpeed: shard parameters across GPUs to run large models on commodity cards.

- Batching frames: generate in short segments and stitch to avoid long sequences OOM.

I/O & storage

NVMe SSD dramatically reduces load time for weights and cache. On cloud instances select local NVMe SSD where possible.

Audio & prompt tips for best outputs

- Use clean audio with minimal noise and consistent loudness.

- If possible, use a transcript aligned to timestamps — it helps for more accurate viseme alignment (if system supports it).

- Craft prompts: mention camera angle, lighting, emotion, clothing and background (e.g., “tight close-up, soft cinematic rim light, neutral studio background”).

U.S. cloud recommendations & cost considerations

Below are practical recommendations for U.S.-based creators balancing cost vs performance:

Short experiments (developer) — low cost

- Use RunPod or Vast.ai for hourly A100/4090 instances.

- Prefer spot/preemptible instances for lower cost; save weights and checkpoints to persistent storage.

Production / regular use

- AWS or Google Cloud reserved instances with high memory GPUs (p4d / A100) provide reliability for longer renders.

- Consider hybrid: use cheaper cloud spot instances for batch renders and keep a reserved instance for final passes.

Region choice (latency & costs)

For U.S. coverage, common region picks are us-east-1 (Virginia), us-west-2 (Oregon) and us-central (Iowa). Choose region closer to your team for lower latency and faster downloads.

Real-world use cases (U.S.)

Wan 2.2 S2V unlocks many practical workflows for U.S. industries:

Entertainment & independent film

Generate pre-visualizations, rough cuts, or stylized music video clips. Indie filmmakers in Los Angeles and New York can iterate fast on scene concepts by feeding voiceover and a reference photo.

Marketing & advertising

Brand spokespeople and product demos: convert recorded voice scripts to short, professional-looking video ads aimed at U.S. marketplaces and platforms (YouTube, TikTok, Instagram).

Education & e-learning

Universities and edtech companies can create avatar-led lessons and training videos — particularly useful for remote learning across timezones in the U.S.

Corporate communications

Internal training, HR messaging, and scalable executive video statements across large U.S. organizations.

Benchmark comparison (Wan 2.2 S2V vs peers)

When comparing speech-to-video systems, focus on four axes: lip-sync accuracy, body motion realism, scene/camera quality, and compute cost/time. In many tests, Wan 2.2 S2V sits in the high-quality bracket for lip-sync and scene control while requiring substantial compute. Simpler offerings trade fidelity for speed and lower cost.

Competitive landscape – summary

- Runway / Pika / Sora: often faster for short outputs and easier to use via SaaS but may have subscription costs and less control for custom prompts.

- Wan 2.2 S2V: superior control, open-source flexibility, and often better cinematic fidelity given enough compute.

- Trade-offs: SaaS tools are lower friction; Wan 2.2 S2V is for teams that need customization, control, or on-premise workflows.

Pros & Cons

Pros

- High-quality lip-sync and expressive facial animation.

- Flexible inputs give creators fine-grained control (image, audio, pose, prompt).

- Open-source — integrates with custom pipelines and on-premise deployments.

- Scales from prototype to production with multi-GPU and cloud support.

Cons

- Significant compute and VRAM requirements for high-resolution videos.

- Longer render times and higher cost compared to lightweight SaaS options.

- Requires familiarity with ML tooling and distributed inference to optimize.

- Artifacts still occasionally show in complex, fast hand motions or crowded scenes.

Frequently Asked Questions (FAQ)

Q1 — Can I run Wan 2.2 S2V on a single consumer GPU?

Short answer: yes for experimentation at low resolution with optimizations, but for reliable 720p+ production you’ll want high-memory GPUs or a multi-GPU setup. For many U.S. creators, renting cloud GPUs is the fastest path.

Q2 — What audio formats are supported?

Typical audio inputs are WAV and MP3. Use uncompressed WAV for best timing accuracy; ensure sample rates are consistent (e.g., 16kHz or 44.1kHz depending on model requirement).

Q3 — How to improve lip-sync?

Use clean audio, increase frame rate if supported, and provide accurate transcripts or phoneme cues if the pipeline supports it. Prompting style (explicit viseme guidance where supported) also improves results.

Q4 — Can I fine-tune for a specific actor/voice?

Yes — with enough data and compute you can fine-tune model components to better represent a specific subject’s face, expression patterns, or speaking cadence. This is compute-heavy and requires dataset preparation and legal clearances when needed.

Q5 — Is commercial use allowed in the U.S.?

Wan 2.2 S2V is typically distributed under an open-source license (check the repository license). Many Wan family releases use permissive licenses that allow commercial use — always verify the exact license and comply with attribution and usage terms.

Conclusion & next steps

Wan 2.2 S2V is a top-tier speech-to-video solution for creators who value control and cinematic output. For U.S. audiences, the best path is usually adopting a hybrid approach: prototype locally or on cheap cloud GPUs, then scale production renders on reserved high-memory cloud instances. Keep prompts descriptive, use clean audio, and lean on mixed precision / distributed strategies to reduce cost.

If you want, I can also provide:

- An AWS deployment script (CloudFormation / Terraform) tuned for Wan 2.2 S2V.

- A ComfyUI workflow JSON for prompt + pose + audio orchestration.

- A 1-page checklist & quickstart (PDF) you can hand to a contractor to run renders in your cloud account.