Table of Contents

In today’s fast-paced digital world, application performance is paramount. One of the most powerful tools in a developer’s arsenal for boosting performance is caching. This comprehensive guide will delve into the intricacies of caching in software development, exploring various strategies, techniques, and best practices that can significantly enhance your application’s speed and efficiency.

Introduction

Caching is a critical component in modern software architecture, playing a vital role in optimizing performance across various types of applications. By storing frequently accessed data in a fast-access storage layer, caching reduces the need for repeated expensive computations or database queries, resulting in faster response times and improved user experience.

In this article, we’ll explore different types of caching, key strategies, advanced techniques, and best practices that can help you leverage the full potential of caching in your software projects. Whether you’re working on web applications, mobile apps, or large-scale distributed systems, understanding and implementing effective caching strategies can make a significant difference in your application’s performance.

1. Understanding the Basics of Caching

What is Caching?

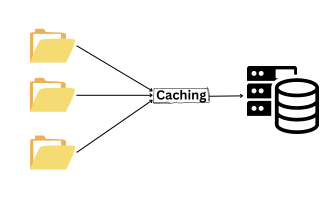

Caching is the process of storing copies of frequently accessed data in a high-speed storage layer, allowing subsequent requests for that data to be served faster. In essence, caching creates a temporary data store that acts as a buffer between your application and the slower, more permanent data storage.

How Caching Works in Software Systems

When a system receives a request for data, it first checks if that data is available in the cache. If it is (a cache hit), the system can quickly return the cached data without having to perform more time-consuming operations. If the data isn’t in the cache (a cache miss), the system retrieves it from the original source, stores a copy in the cache for future use, and then returns it to the requester.

The Role of Caching in Performance Improvement

Caching plays a crucial role in performance improvement by:

- Reducing latency

- Decreasing network traffic

- Lowering the load on backend systems

- Improving application responsiveness

As software architect Martin Fowler notes:

“A common way to improve the performance of a system is to add caching, thereby improving response times and reducing load.“

2. Types of Caching

Understanding different types of caching is crucial for implementing the right strategy for your application. Let’s explore some of the most common types:

In-memory Caching

In-memory caching stores data directly in the application’s memory space, providing extremely fast access times.

Benefits:

- Lowest possible latency

- Simplicity of implementation

Use cases:

- Storing session data

- Caching database query results

- Storing application configuration

Popular in-memory caching solutions include Memcached and Redis.

Distributed Caching

Distributed caching extends the concept of in-memory caching across multiple nodes, allowing for larger cache sizes and improved fault tolerance.

How it works in large-scale applications:

- Data is distributed across multiple cache servers

- Consistent hashing is often used to determine data placement

- Provides scalability and high availability

Common tools and technologies:

- Redis Cluster

- Apache Ignite

- Hazelcast

Browser and Client-Side Caching

Browser caching involves storing web resources (HTML, CSS, JavaScript, images) locally in the user’s browser.

Tips for implementing client-side caching effectively:

- Set appropriate cache-control headers

- Use ETags for validating cache freshness

- Implement service workers for offline caching in web applications

Server-Side Caching

Server-side caching occurs on the web server or application server, reducing the load on backend systems.

Techniques and tools for server-side caching:

- Page caching (e.g., Varnish)

- Object caching (e.g., Memcached)

- ORM-level caching (e.g., Hibernate second-level cache)

3. Key Caching Strategies

Choosing the right caching strategy is crucial for optimizing your application’s performance. Let’s examine some key strategies:

Cache-aside

In the cache-aside strategy, the application is responsible for reading and writing from both the cache and the primary data store.

How it works:

- Application checks the cache for data

- If not found, it reads from the database and updates the cache

- Subsequent reads are served from the cache

Advantages:

- Simple to implement

- Works well with read-heavy workloads

Disadvantages:

- Initial read penalty

- Potential for stale data if not managed properly

Read-through and Write-through Caching

These strategies delegate the responsibility of reading from and writing to the underlying data store to the caching layer.

Read-through:

- Cache automatically loads data from the database when a cache miss occurs

Write-through:

- Data is written to both the cache and the database in a single operation

When to use them:

- In scenarios where data consistency is crucial

- For applications with predictable data access patterns

Implementation tips:

- Use with a distributed cache for better scalability

- Consider combining with write-behind for improved write performance

Write-back Caching

Write-back caching, also known as write-behind, delays database updates to improve write performance.

Benefits:

- Reduced write latency

- Improved write throughput

Drawbacks:

- Risk of data loss in case of system failure

- Increased complexity in ensuring data consistency

Practical examples:

- Logging systems

- Real-time analytics platforms

4. Cache Invalidation and Eviction Policies

Effective cache management involves not only storing data but also ensuring its freshness and managing limited cache resources.

Understanding Cache Invalidation

Cache invalidation is the process of removing or updating cached data when it becomes stale or irrelevant.

Why cache invalidation is critical:

- Ensures data consistency

- Prevents serving outdated information to users

Methods for invalidating cache:

- Time-based expiration

- Event-driven invalidation

- Version tagging

Cache Eviction Policies

Cache eviction policies determine which items to remove when the cache reaches its capacity limit.

Common eviction policies:

- Least Recently Used (LRU):

- Removes the least recently accessed items first

- Ideal for scenarios with temporal locality

- First In, First Out (FIFO):

- Removes the oldest items first

- Simple to implement but may not always be optimal

- Least Frequently Used (LFU):

- Removes items that are accessed least frequently

- Good for scenarios where access frequency is more important than recency

As cache expert Daniel Lemire states:

“Choosing the right cache eviction policy can have a significant impact on your cache’s hit rate and overall system performance.“

5. Advanced Caching Techniques

To further optimize your caching strategy, consider these advanced techniques:

CDN Caching

Content Delivery Networks (CDNs) use caching to deliver content from servers geographically closer to users.

Best practices for CDN caching:

- Set appropriate cache-control headers

- Use cache tags for selective purging

- Implement edge computing for dynamic content caching

Caching in REST APIs

Implementing caching in API design can significantly reduce server load and improve response times.

Tools and libraries for API caching:

- HTTP caching headers

- OData caching

- GraphQL caching with Apollo Server

Implementation tips:

- Use ETags for cache validation

- Implement cache-control headers

- Consider using a caching reverse proxy like Varnish

Microservices

Caching in microservices architectures presents unique challenges due to the distributed nature of these systems.

Challenges and strategies:

- Maintain data consistency across services

- Handle cache invalidation in a distributed environment

- Optimize for both performance and resilience

Common tools for caching in microservices:

- Redis

- Apache Ignite

- Hazelcast

6. Cache Performance Optimization

Optimizing cache performance is crucial for maximizing the benefits of your caching strategy.

Measuring Cache Performance

Key metrics to track:

- Cache hit ratio

- Cache miss ratio

- Latency

- Throughput

Tools for monitoring cache performance:

- New Relic

- Datadog

- Prometheus with Grafana

Optimizing Cache Configuration

Tips for tuning cache settings:

- Adjust cache size based on workload

- Fine-tune eviction policies

- Optimize cache key design

Handling Cache Misses

Strategies to reduce cache misses:

- Implement prefetching for predictable access patterns

- Use multi-level caching

- Implement cache warming strategies

Managing stale data in cache:

- Use time-to-live (TTL) settings

- Implement background refresh mechanisms

- Use versioning or timestamps for cache entries

7. Cache Management and Best Practices

Effective cache management is essential for maintaining optimal performance and data consistency.

Best Practices for Cache Management

- Set appropriate cache expiration times:

- Balance between data freshness and cache hit rate

- Use different TTLs for different types of data

- Regularly monitor and update cache settings:

- Analyze cache performance metrics

- Adjust cache size and eviction policies as needed

- Implement proper error handling:

- Gracefully handle cache failures

- Implement fallback mechanisms

Common Pitfalls in Caching

- Avoiding over-caching:

- Don’t cache data that changes frequently

- Be cautious with caching personal or sensitive information

- Ensuring data consistency:

- Implement proper cache invalidation strategies

- Use versioning or timestamps to detect stale data

- Managing cache size:

- Monitor cache memory usage

- Implement appropriate eviction policies

Tools for Cache Management

Popular cache management tools include:

- Redis Commander

- Memcached Admin

- Ehcache Management Center

These tools provide features like:

- Real-time monitoring of cache statistics

- Manual cache manipulation

- Configuration management

8. Real-World Examples and Case Studies

Let’s examine two real-world examples of successful caching implementations:

Case Study 1: E-commerce Platform Performance Optimization

A major e-commerce platform implemented a multi-layer caching strategy to handle high traffic during peak shopping seasons.

Implemented solutions:

- CDN caching for static assets

- Redis for session data and product information

- Varnish for full-page caching

Results:

- 70% reduction in database load

- 50% improvement in average page load time

- 30% increase in conversion rate

Case Study 2: Caching in Cloud-based Applications

A cloud-based SaaS provider implemented distributed caching to improve the performance of their microservices architecture.

Implemented solutions:

- Redis Cluster for distributed caching

- Cache-aside strategy for database query results

- Event-driven cache invalidation

Results:

- 60% reduction in API response times

- 40% decrease in database costs

- Improved scalability during traffic spikes

FAQ Section

Q: What is the primary benefit of caching in software development?

A: Caching significantly reduces data retrieval time, leading to faster application performance and reduced server load. It helps in serving frequently accessed data quickly, minimizing the need for expensive computations or database queries.

Q: How do you decide which caching strategy to use?

A: The choice depends on factors like data access patterns, application architecture, and performance requirements. Consider the following:

- For read-heavy workloads, cache-aside might be suitable

- If data consistency is crucial, consider read-through and write-through

- For write-heavy workloads with tolerance for slight data inconsistency, write-back caching could be beneficial

Q: What are common challenges with implementing caching?

A: Common challenges include:

- Cache invalidation: Ensuring cached data is up-to-date

- Data consistency: Maintaining consistency between cache and primary data store

- Cache size management: Balancing memory usage with performance benefits

- Distributed caching complexity: Handling data distribution and consistency in distributed systems

Q: Can caching be used in microservices architecture?

A: Yes, caching can be very beneficial in microservices architecture, but it requires careful planning. Considerations include:

- Choosing between local caches for each service or a shared distributed cache

- Implementing effective cache invalidation strategies across services

- Ensuring data consistency in a distributed environment

- Using caching to reduce inter-service communication

Conclusion

Caching is a powerful technique that can significantly enhance the performance and scalability of software applications. By understanding different caching strategies, implementing advanced techniques, and following best practices, developers can optimize their applications for speed and efficiency.

Remember that effective caching is not a one-size-fits-all solution. It requires careful consideration of your specific use case, continuous monitoring, and optimization. As your application evolves, so should your caching strategy.

We encourage you to implement these caching strategies in your projects and share your experiences. Every application is unique, and your insights could be valuable to the development community.

Call to Action

Have you implemented caching in your projects? What challenges did you face, and how did you overcome them? Share your experiences in the comments below!

For more tips on software development and performance optimization, subscribe to our blog or follow us on social media. Stay tuned for more in-depth articles on building high-performance applications!